Zero And Few Shot Text Retrieval And Ranking Using Large Language

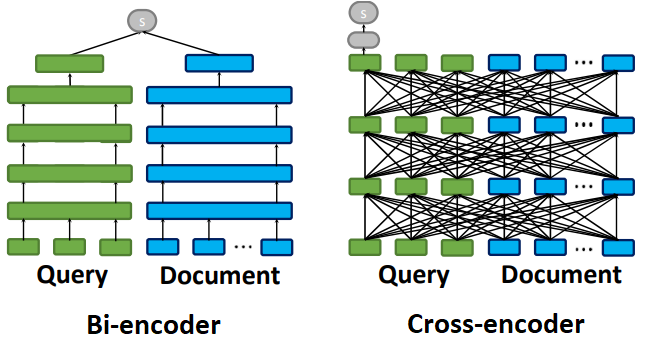

Zero And Few Shot Text Retrieval And Ranking Using Large Language This article gives a brief overview of a standard text ranking workflow and then introduces several recently proposed ideas to utilize large language models to enhance the text ranking task. To address this tradeoff, we propose \textbf {refrank}, a simple and effective comparative ranking method based on a fixed reference document. instead of comparing all document pairs, refrank prompts the llm to evaluate each candidate relative to a shared reference anchor.

Zero And Few Shot Text Retrieval And Ranking Using Large Language It provides a simple interface to use llms as zero shot or few shot classifiers using natural language prompts, making it handy for downstream text analysis tasks such as classification, sentiment analysis, and topic labeling. We set out to explore to what extent the findings of zero shot llm rerankers established on plain text corpora hold for datasets containing predominantly or exclusively precomputed ranking fea tures. We explore this question via an empirical study on one public learning to rank dataset (mslr web10k) and two datasets collected from an audio streaming platform's search logs. This study systematically compares the performance of large language models in zero shot and few shot text classification tasks. experiments were conducted on a.

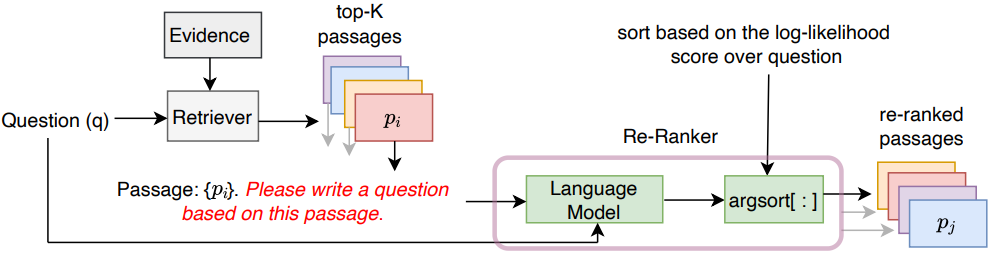

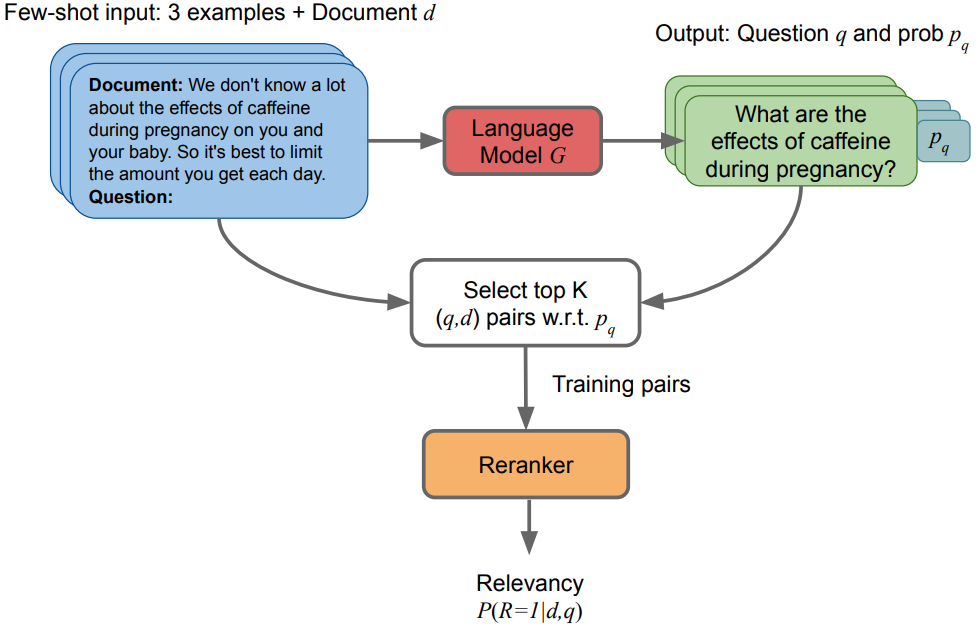

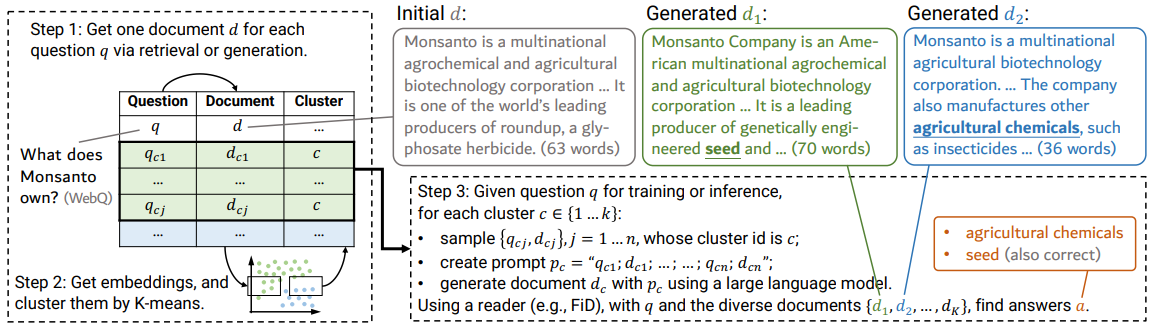

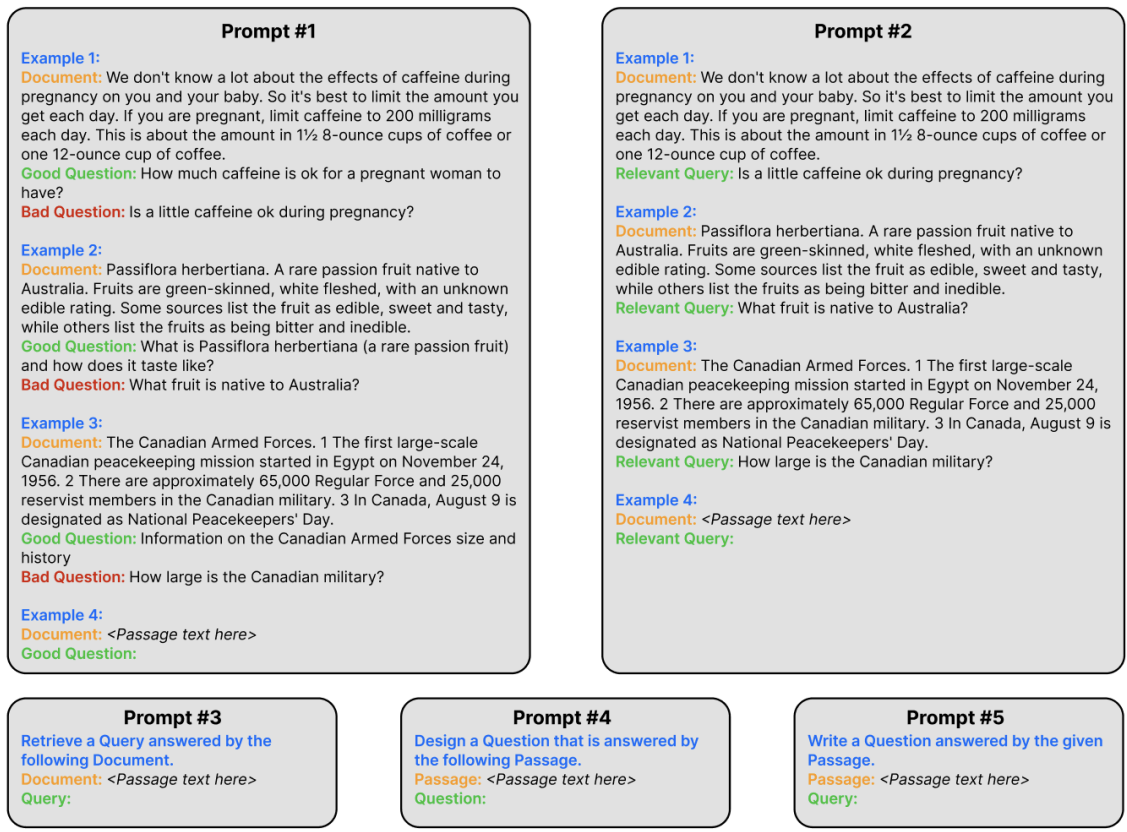

Zero And Few Shot Text Retrieval And Ranking Using Large Language We explore this question via an empirical study on one public learning to rank dataset (mslr web10k) and two datasets collected from an audio streaming platform's search logs. This study systematically compares the performance of large language models in zero shot and few shot text classification tasks. experiments were conducted on a. This article gives a brief overview of a standard text ranking workflow and then introduces several recently proposed ideas to utilize large language models to enhance the text ranking task. Recently, there has been a growing interest in applying llms to zero shot text ranking. this article describes a recent paradigm that uses prompting based approaches to directly utilize llms as rerankers in a multi stage ranking pipeline. We demonstrate that these issues can be alleviated using specially designed prompting and bootstrapping strategies. equipped with these insights, zero shot llms can even challenge conventional recommendation models when ranking candidates are retrieved by multiple candidate generators. We analyze and compare the effectiveness of monolingual reranking using either query or document translations. we also evaluate the effectiveness of llms when leveraging their own generated translations.

Zero And Few Shot Text Retrieval And Ranking Using Large Language This article gives a brief overview of a standard text ranking workflow and then introduces several recently proposed ideas to utilize large language models to enhance the text ranking task. Recently, there has been a growing interest in applying llms to zero shot text ranking. this article describes a recent paradigm that uses prompting based approaches to directly utilize llms as rerankers in a multi stage ranking pipeline. We demonstrate that these issues can be alleviated using specially designed prompting and bootstrapping strategies. equipped with these insights, zero shot llms can even challenge conventional recommendation models when ranking candidates are retrieved by multiple candidate generators. We analyze and compare the effectiveness of monolingual reranking using either query or document translations. we also evaluate the effectiveness of llms when leveraging their own generated translations.

Zero And Few Shot Text Retrieval And Ranking Using Large Language We demonstrate that these issues can be alleviated using specially designed prompting and bootstrapping strategies. equipped with these insights, zero shot llms can even challenge conventional recommendation models when ranking candidates are retrieved by multiple candidate generators. We analyze and compare the effectiveness of monolingual reranking using either query or document translations. we also evaluate the effectiveness of llms when leveraging their own generated translations.

Zero And Few Shot Text Retrieval And Ranking Using Large Language

Comments are closed.