Xgboost Fun Tutorial Beginner To Advanced Boosted Trees Distributed Training Advanced Feature

System Architecture Of Xgboosted Tree 12 Download Scientific Diagram I suspect it could be related to compatibility issues between scikit learn and xgboost or python version. i am using python 3.12, and both scikit learn and xgboost are installed with their latest versions. i attempted to tune the hyperparameters of an xgbregressor using randomizedsearchcv from scikit learn. Furthermore, we can plot the importances with xgboost built in function plot importance(model, max num features = 15) pyplot.show() use max num features in plot importance to limit the number of features if you want.

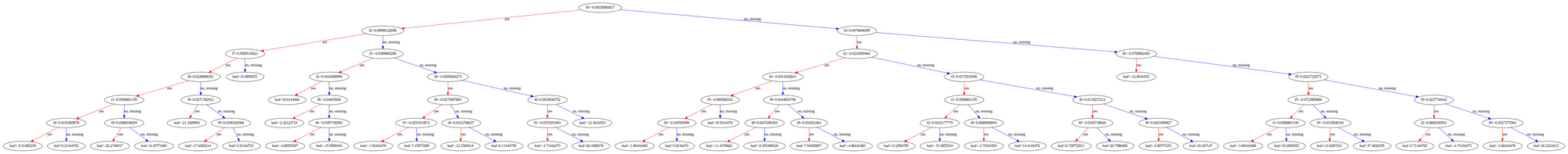

Visualizing Xgboost Trees "when using xgboost we need to convert categorical variables into numeric." not always, no. if booster=='gbtree' (the default), then xgboost can handle categorical variables encoded as numeric directly, without needing dummifying one hotting. whereas if the label is a string (not an integer) then yes we need to comvert it. The sample weight parameter allows you to specify a different weight for each training example. the scale pos weight parameter lets you provide a weight for an entire class of examples ("positive" class). these correspond to two different approaches to cost sensitive learning. if you believe that the cost of misclassifying positive examples (missing a cancer patient) is the same for all. 16 i use the python implementation of xgboost. one of the objectives is rank:pairwise and it minimizes the pairwise loss (documentation). however, it does not say anything about the scope of the output. i see numbers between 10 and 10, but can it be in principle inf to inf?. Multiclass classification with xgboost classifier? asked 5 years, 11 months ago modified 5 years, 1 month ago viewed 97k times.

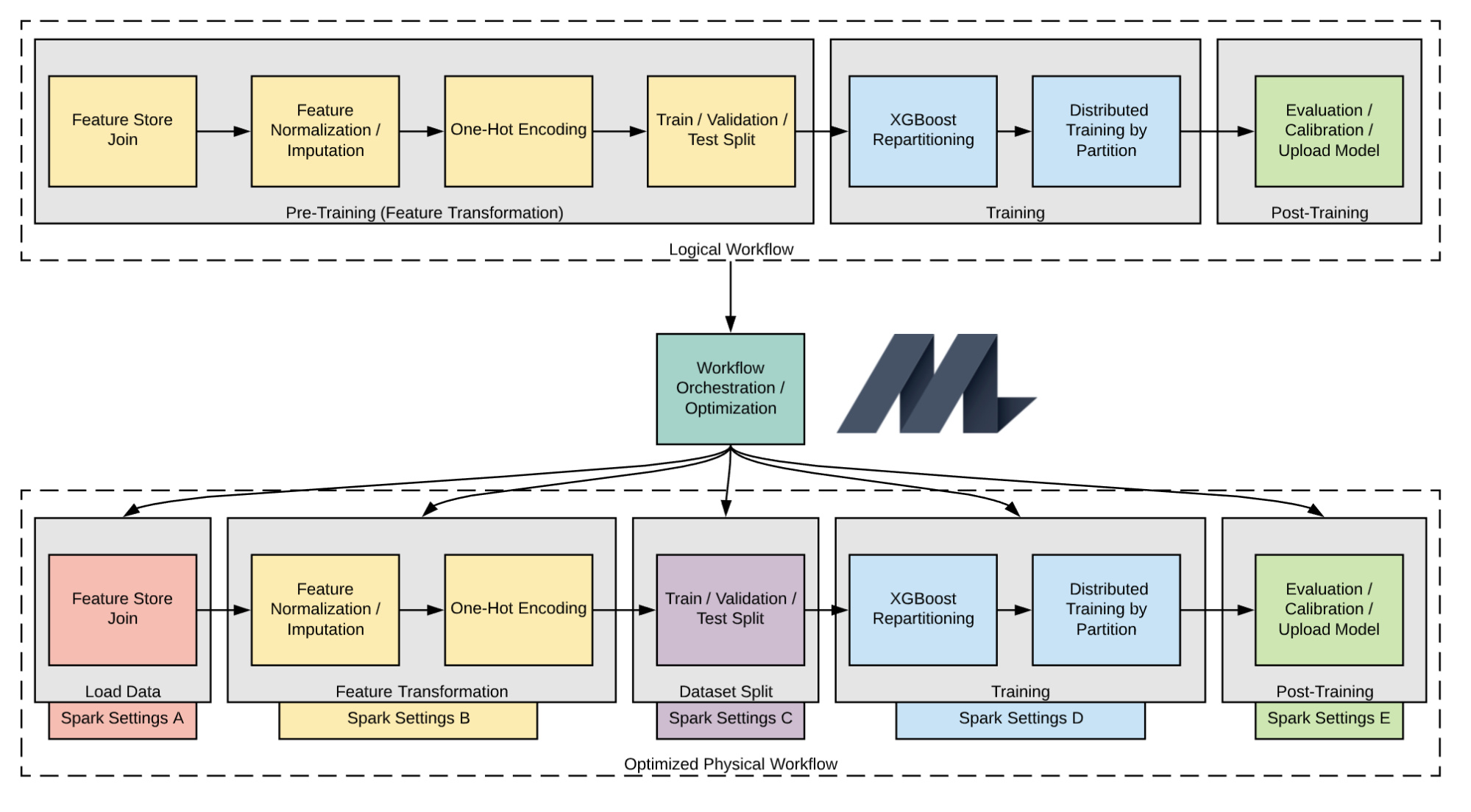

Productionizing Distributed Xgboost To Train Deep Tree Models With 16 i use the python implementation of xgboost. one of the objectives is rank:pairwise and it minimizes the pairwise loss (documentation). however, it does not say anything about the scope of the output. i see numbers between 10 and 10, but can it be in principle inf to inf?. Multiclass classification with xgboost classifier? asked 5 years, 11 months ago modified 5 years, 1 month ago viewed 97k times. Xg boost categorical issues during time series prediction i observed an issue where categorical variables are being converted back to strings, causing failures. the issue arises during model. At least with the glm function in r, modeling count ~ x1 x2 offset(log(exposure)) with family=poisson(link='log') is equivalent to modeling i(count exposure) ~ x1 x2 with family=poisson(link='log') and weight=exposure. that is, normalize your count by exposure to get frequency, and model frequency with exposure as the weight. your estimated coefficients should be the same in both cases. Is it possible to train a model by xgboost that has multiple continuous outputs (multi regression)? what would be the objective of training such a model? thanks in advance for any suggestions. My code is as follows: from sklearn.model selection import train test split from xgboost import xgbclassifier import pandas as pd random state = 55 ## you will pass it to every sklearn call so we e.

Productionizing Distributed Xgboost To Train Deep Tree Models With Xg boost categorical issues during time series prediction i observed an issue where categorical variables are being converted back to strings, causing failures. the issue arises during model. At least with the glm function in r, modeling count ~ x1 x2 offset(log(exposure)) with family=poisson(link='log') is equivalent to modeling i(count exposure) ~ x1 x2 with family=poisson(link='log') and weight=exposure. that is, normalize your count by exposure to get frequency, and model frequency with exposure as the weight. your estimated coefficients should be the same in both cases. Is it possible to train a model by xgboost that has multiple continuous outputs (multi regression)? what would be the objective of training such a model? thanks in advance for any suggestions. My code is as follows: from sklearn.model selection import train test split from xgboost import xgbclassifier import pandas as pd random state = 55 ## you will pass it to every sklearn call so we e.

Xgboost Distributed Training And Parallel Predictions With Apache Spark Is it possible to train a model by xgboost that has multiple continuous outputs (multi regression)? what would be the objective of training such a model? thanks in advance for any suggestions. My code is as follows: from sklearn.model selection import train test split from xgboost import xgbclassifier import pandas as pd random state = 55 ## you will pass it to every sklearn call so we e.

Comments are closed.