Workaround Openais Token Limit With Chain Types

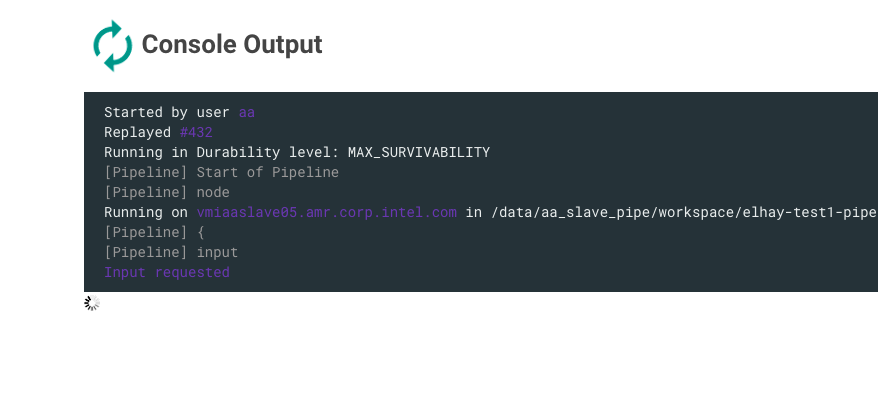

Workaround Openai S Token Limit With Chain Types By Elhay Efrat Medium We will need to convert the tokenized text from nltk to non tokenized text, as the openai gpt 3 api does not handle tokenized text very well, which can result in a higher token count. Workaround openai's token limit with chain types greg kamradt 60.6k subscribers subscribed.

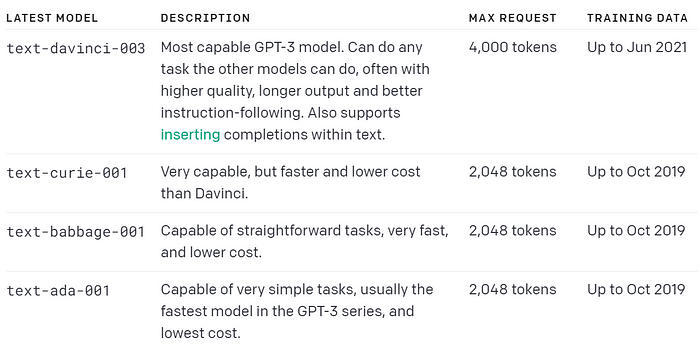

Overcoming Openai S Token Limit Leveraging Chain Types In Langchain Learn how to use the openai api to have a conversation with gpt 4 and how to exceed the token limits. right now, it’s possible to harness the power of llms (large language models) through several public apis — giving us access to the likes of chatgpt, claude and gemini for use in our own applications. I'm using langchain and openai to implement a natural language to sql query tool. it works okay for schemas with a small number of simple tables. however, when i try to use it for schemas that have. Learn how to overcome openai's token limit in this insightful video tutorial using chain types. Hi all, i’m facing a persistent issue with openai’s 8192 token limit (using gpt 4o mini), and i want to proactively handle the token limit error before it happens — not just catch it after it throws.

Workaround Openai S Token Limit With Chain Types By Elhay Efrat Medium Learn how to overcome openai's token limit in this insightful video tutorial using chain types. Hi all, i’m facing a persistent issue with openai’s 8192 token limit (using gpt 4o mini), and i want to proactively handle the token limit error before it happens — not just catch it after it throws. For documents or prompts that are over the limit, you can use map reduce to split the document or prompt into smaller sections or summaries to consolidate as a summary that can fit into the 4k token limit. This limit is separate from the tpm limits and represents the overall usage across all deployments. it cannot be increased beyond the specified limit, but it allows for tracking and managing usage across multiple deployments. So under either scenario, you’d have to shorten your examples to fit those limits. no workaround really if they are too large the examples will get truncated, which ultimately risks negatively impacting your fine tuning results. Download 1m code from codegive 0d1b8f9 openai's models, such as gpt 3.5 and gpt 4, have a token limit for each request. this limit means that t.

Comments are closed.