What Is Ollama Running Local Llms Made Simple

How To Run Open Source Llms Locally Using Ollama Pdf Open Source Get up and running with large language models. Ollama’s new app supports file drag and drop, making it easier to reason with text or pdfs. for processing large documents, ollama’s context length can be increased in the settings.

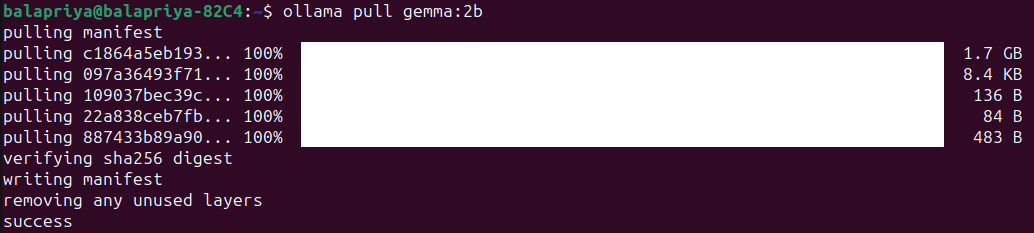

Ollama Tutorial Running Llms Locally Made Super Simple Kdnuggets We are excited to share that ollama is now available as an official docker sponsored open source image, making it simpler to get up and running with large language models using docker containers. Download ollama macos linux windows download for windows requires windows 10 or later. Download ollama for linux. Ollama is now available on windows in preview, making it possible to pull, run and create large language models in a new native windows experience. ollama on windows includes built in gpu acceleration, access to the full model library, and serves the ollama api including openai compatibility.

Ollama Tutorial Running Llms Locally Made Super Simple Kdnuggets Download ollama for linux. Ollama is now available on windows in preview, making it possible to pull, run and create large language models in a new native windows experience. ollama on windows includes built in gpu acceleration, access to the full model library, and serves the ollama api including openai compatibility. Ollama now supports structured outputs making it possible to constrain a model’s output to a specific format defined by a json schema. the ollama python and javascript libraries have been updated to support structured outputs. Ollama run llama3.2 vision:90b to add an image to the prompt, drag and drop it into the terminal, or add a path to the image to the prompt on linux. note: llama 3.2 vision 11b requires least 8gb of vram, and the 90b model requires at least 64 gb of vram. examples handwriting optical character recognition (ocr) charts & tables image q&a usage. Llama 3 is now available to run on ollama. this model is the next generation of meta's state of the art large language model, and is the most capable openly available llm to date. Search for models on ollama.olmo2 olmo 2 is a new family of 7b and 13b models trained on up to 5t tokens. these models are on par with or better than equivalently sized fully open models, and competitive with open weight models such as llama 3.1 on english academic benchmarks.

Ollama Tutorial Running Llms Locally Made Super Simple Kdnuggets Ollama now supports structured outputs making it possible to constrain a model’s output to a specific format defined by a json schema. the ollama python and javascript libraries have been updated to support structured outputs. Ollama run llama3.2 vision:90b to add an image to the prompt, drag and drop it into the terminal, or add a path to the image to the prompt on linux. note: llama 3.2 vision 11b requires least 8gb of vram, and the 90b model requires at least 64 gb of vram. examples handwriting optical character recognition (ocr) charts & tables image q&a usage. Llama 3 is now available to run on ollama. this model is the next generation of meta's state of the art large language model, and is the most capable openly available llm to date. Search for models on ollama.olmo2 olmo 2 is a new family of 7b and 13b models trained on up to 5t tokens. these models are on par with or better than equivalently sized fully open models, and competitive with open weight models such as llama 3.1 on english academic benchmarks.

Running Local Llms Made Easy With Ollama Ai Llama 3 is now available to run on ollama. this model is the next generation of meta's state of the art large language model, and is the most capable openly available llm to date. Search for models on ollama.olmo2 olmo 2 is a new family of 7b and 13b models trained on up to 5t tokens. these models are on par with or better than equivalently sized fully open models, and competitive with open weight models such as llama 3.1 on english academic benchmarks.

Comments are closed.