What Is Llm Benchmarks Types Challenges Evaluators

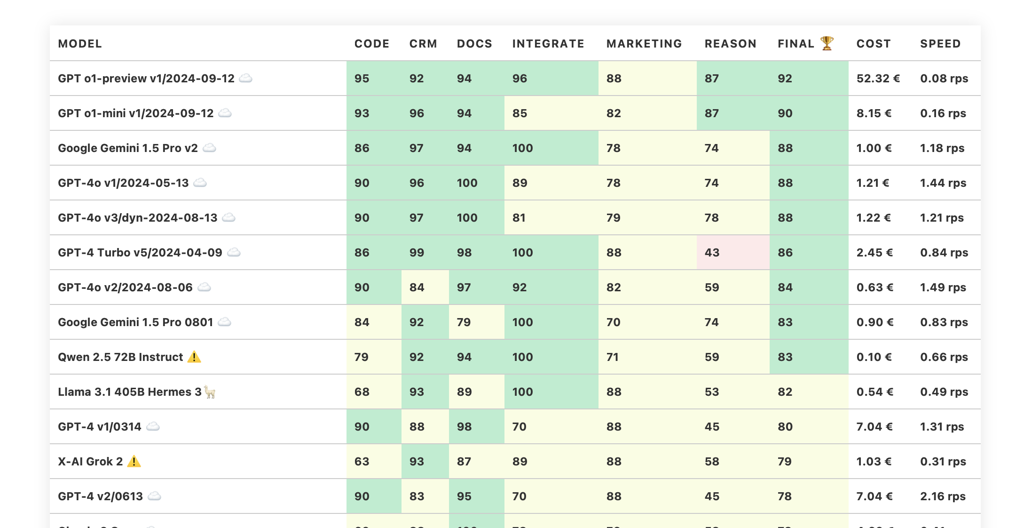

Benchmarking Llm For Business Workloads Explore llm benchmarks, their importance in evaluating language model performance, and their impact on ai advancements. In this post, we’ll walk through some tried and true best practices, common pitfalls, and handy tips to help you benchmark your llm’s performance. whether you’re just starting out or looking for a quick refresher, these guidelines will keep your evaluation strategy on solid ground.

Github Llmonitor Llm Benchmarks Llm Benchmarks Evaluation is crucial for identifying a model's strengths and weaknesses, offering insights for improvement, and guiding the fine tuning process. when evaluating llms, it's important to distinguish between two primary types: model evaluation and system evaluation. There are several types of benchmarks used to evaluate llms, each focusing on different aspects of their functionality. below are some of the most widely recognized categories: 1. natural language understanding (nlu) purpose: assess how well an llm understands and interprets human language. Benchmarks for llms are standardized tests used to evaluate how well a model performs on various language related tasks. these tasks range from simple sentence understanding to more complex activities like reasoning, code generation, and even ethical decision making. Llm benchmarks are standardized frameworks for assessing the performance of large language models (llms). these benchmarks consist of sample data, a set of questions or tasks to test llms on specific skills, metrics for evaluating performance and a scoring mechanism.

Llm Performance Benchmarks Benchmarks for llms are standardized tests used to evaluate how well a model performs on various language related tasks. these tasks range from simple sentence understanding to more complex activities like reasoning, code generation, and even ethical decision making. Llm benchmarks are standardized frameworks for assessing the performance of large language models (llms). these benchmarks consist of sample data, a set of questions or tasks to test llms on specific skills, metrics for evaluating performance and a scoring mechanism. To address these complex challenges, the ai community has developed specialized benchmarking categories with different methodologies: let's examine these categories of llm benchmarks in more detail, beginning with those designed to evaluate general language understanding capabilities. Learn more about llm evaluation metrics, benchmarks, frameworks, challenges, and best practices to ensure accuracy, safety, and real world model performance. what is llm evaluation? the rise of large language models (llms) has emerged as a crucial factor in creating and advancing intelligent business operations. So, let's cut to the chase: llm benchmarks are basically the report cards for large language models. they tell us how well these models are doing their jobs, whether it's generating text, understanding context, or even creating poetry. but why should you care?. Llm benchmarks can be categorized based on the specific capabilities they measure. understanding these types can help in selecting the right benchmark for evaluating a particular model or task.

Llm Benchmarks Study Using Data Subsampling Willowtree To address these complex challenges, the ai community has developed specialized benchmarking categories with different methodologies: let's examine these categories of llm benchmarks in more detail, beginning with those designed to evaluate general language understanding capabilities. Learn more about llm evaluation metrics, benchmarks, frameworks, challenges, and best practices to ensure accuracy, safety, and real world model performance. what is llm evaluation? the rise of large language models (llms) has emerged as a crucial factor in creating and advancing intelligent business operations. So, let's cut to the chase: llm benchmarks are basically the report cards for large language models. they tell us how well these models are doing their jobs, whether it's generating text, understanding context, or even creating poetry. but why should you care?. Llm benchmarks can be categorized based on the specific capabilities they measure. understanding these types can help in selecting the right benchmark for evaluating a particular model or task.

What Is Llm Benchmarks Types Challenges Evaluators So, let's cut to the chase: llm benchmarks are basically the report cards for large language models. they tell us how well these models are doing their jobs, whether it's generating text, understanding context, or even creating poetry. but why should you care?. Llm benchmarks can be categorized based on the specific capabilities they measure. understanding these types can help in selecting the right benchmark for evaluating a particular model or task.

Comments are closed.