What Do Llm Benchmarks Actually Tell Us How To Run Your Own

Github Stardog Union Llm Benchmarks Interpreting and running standardized language model benchmarks and evaluation datasets for both generalized and task specific performance assessments!resou. This post breaks down how llms are tested, which benchmarks matter, what the scores mean, and how you can use all this to figure out which model fits your needs.

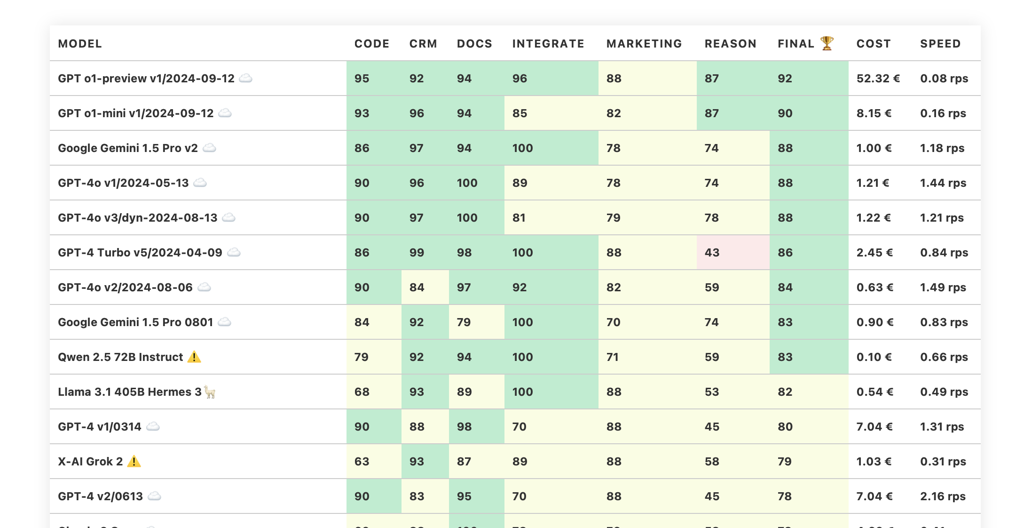

Benchmarking Llm For Business Workloads What metrics should i use to benchmark my llm workflows and ensure they align with my business goals? to effectively assess your llm workflows, start by pinpointing the performance metrics that align most closely with your business objectives. Large language models (llms) are an incredible tool for developers and business leaders to create new value for consumers. they make personal recommendations, translate between unstructured and. Learn how to build an llm evaluation framework and explore 20 benchmarks to assess ai model performance effectively. Llm benchmarks are standardized frameworks that assess llm performance. they provide a set of tasks for the llm to accomplish, rate the llm's ability to achieve that task against specific metrics, then produce a score based on the metrics.

Github Llmonitor Llm Benchmarks Llm Benchmarks Learn how to build an llm evaluation framework and explore 20 benchmarks to assess ai model performance effectively. Llm benchmarks are standardized frameworks that assess llm performance. they provide a set of tasks for the llm to accomplish, rate the llm's ability to achieve that task against specific metrics, then produce a score based on the metrics. Understand llm evaluation with our comprehensive guide. learn how to define benchmarks and metrics, and measure progress for optimizing your llm performance. In this post, we’ll walk through some tried and true best practices, common pitfalls, and handy tips to help you benchmark your llm’s performance. whether you’re just starting out or looking for a quick refresher, these guidelines will keep your evaluation strategy on solid ground. Wondering what llm is best for your custom application? the principled approach is to create an application specific benchmark! i explain how using: yourbench to create q&as from your documents. lighteval to evaluate the performance of different llms. trelis advanced evals for data inspection. cheers, ronan. trelis links:. In this article, you'll learn how to evaluate llm systems using llm evaluation metrics and benchmark datasets.

Unify Static Llm Benchmarks Are Not Enough Understand llm evaluation with our comprehensive guide. learn how to define benchmarks and metrics, and measure progress for optimizing your llm performance. In this post, we’ll walk through some tried and true best practices, common pitfalls, and handy tips to help you benchmark your llm’s performance. whether you’re just starting out or looking for a quick refresher, these guidelines will keep your evaluation strategy on solid ground. Wondering what llm is best for your custom application? the principled approach is to create an application specific benchmark! i explain how using: yourbench to create q&as from your documents. lighteval to evaluate the performance of different llms. trelis advanced evals for data inspection. cheers, ronan. trelis links:. In this article, you'll learn how to evaluate llm systems using llm evaluation metrics and benchmark datasets.

Llm Performance Benchmarks Wondering what llm is best for your custom application? the principled approach is to create an application specific benchmark! i explain how using: yourbench to create q&as from your documents. lighteval to evaluate the performance of different llms. trelis advanced evals for data inspection. cheers, ronan. trelis links:. In this article, you'll learn how to evaluate llm systems using llm evaluation metrics and benchmark datasets.

Comments are closed.