What Are Large Language Model Llm Benchmarks

What Are Large Language Model Llm Benchmarks Ibm Technology Art What are llm benchmarks? llm benchmarks are standardized frameworks for assessing the performance of large language models (llms). these benchmarks consist of sample data, a set of questions or tasks to test llms on specific skills, metrics for evaluating performance and a scoring mechanism. Llm benchmarks are standardized evaluation metrics or tasks designed to assess the capabilities, limitations, and overall performance of large language models.

The Impact Of Large Language Models Llm A Statistical Analysis Note the 🤗 llm perf leaderboard 🏋️ aims to benchmark the performance (latency, throughput & memory) of large language models (llms) with different hardwares, backends and optimizations using optimum benchmark and optimum flavors. Discover the top llms of 2025 with real benchmarks, pricing, and use case picks. find the best model or explore expert llm development services. Llm benchmarks are standardized frameworks that assess llm performance. they provide a set of tasks for the llm to accomplish, rate the llm's ability to achieve that task against specific metrics, then produce a score based on the metrics. Llm benchmarks are collections of carefully designed tasks, questions, and datasets that test the performance of language models in a standardized process. why are benchmarks so important? benchmarks give us metrics to compare different llms fairly. they tell us which model objectively does the job better.

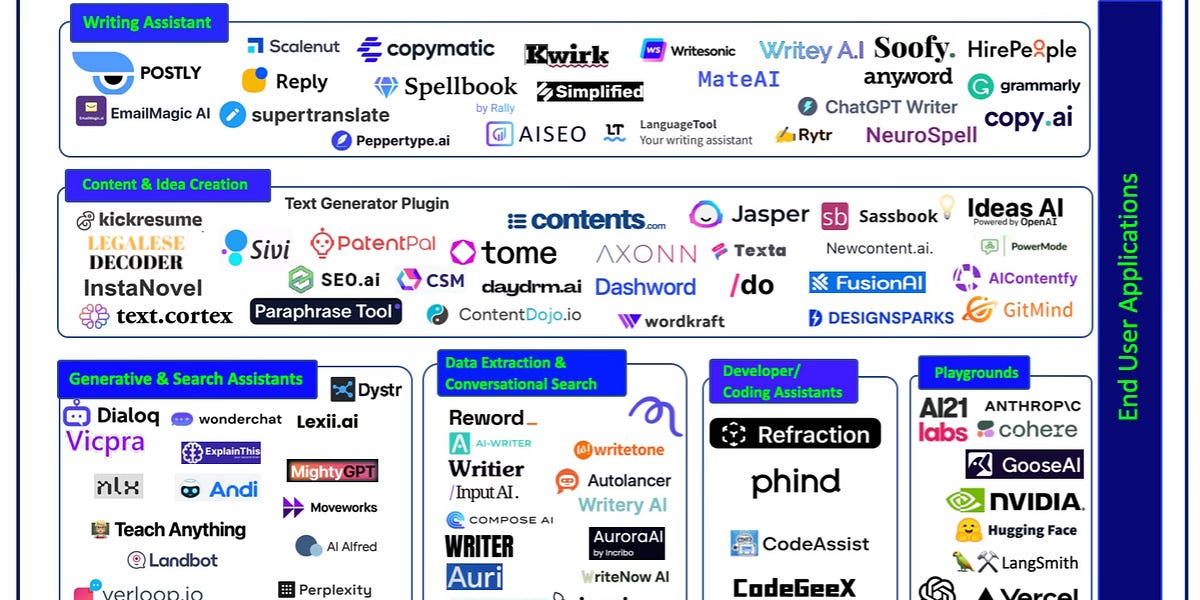

Large Language Model Llm Stack Version 5 Llm benchmarks are standardized frameworks that assess llm performance. they provide a set of tasks for the llm to accomplish, rate the llm's ability to achieve that task against specific metrics, then produce a score based on the metrics. Llm benchmarks are collections of carefully designed tasks, questions, and datasets that test the performance of language models in a standardized process. why are benchmarks so important? benchmarks give us metrics to compare different llms fairly. they tell us which model objectively does the job better. Benchmarks provide insights into areas where a model excels and tasks where the model struggles. with the increasing use of llms in various sectors, from customer service to code generation, the need for clear, understandable performance metrics is paramount. Large language model evaluation (i.e., llm eval) refers to the multidimensional assessment of large language models (llms). effective evaluation is crucial for selecting and optimizing llms. enterprises have a range of base models and their variations to choose from, but achieving success is uncertain without precise performance measurement. In this blog post, i will cover a range of methods by which llms and downstream applications can be evaluated. the goal is not to cover specific benchmarks or metrics but to discuss common underlying methods undergirding the benchmarks. our goal is not so much to draw conclusions as to provide the information needed to make an informed decision. Discover the top 25 llm benchmarks to assess ai model performance, accuracy, and reliability. as you work on your generative ai product, you will likely encounter various large language models and their unique strengths and weaknesses. you'll need to evaluate these models against specific benchmarks to find the right fit for your goals.

A Comprehensive Guide To Large Language Model Llm Benchmarks provide insights into areas where a model excels and tasks where the model struggles. with the increasing use of llms in various sectors, from customer service to code generation, the need for clear, understandable performance metrics is paramount. Large language model evaluation (i.e., llm eval) refers to the multidimensional assessment of large language models (llms). effective evaluation is crucial for selecting and optimizing llms. enterprises have a range of base models and their variations to choose from, but achieving success is uncertain without precise performance measurement. In this blog post, i will cover a range of methods by which llms and downstream applications can be evaluated. the goal is not to cover specific benchmarks or metrics but to discuss common underlying methods undergirding the benchmarks. our goal is not so much to draw conclusions as to provide the information needed to make an informed decision. Discover the top 25 llm benchmarks to assess ai model performance, accuracy, and reliability. as you work on your generative ai product, you will likely encounter various large language models and their unique strengths and weaknesses. you'll need to evaluate these models against specific benchmarks to find the right fit for your goals.

Large Language Model Llm Llm Knowledge Base In this blog post, i will cover a range of methods by which llms and downstream applications can be evaluated. the goal is not to cover specific benchmarks or metrics but to discuss common underlying methods undergirding the benchmarks. our goal is not so much to draw conclusions as to provide the information needed to make an informed decision. Discover the top 25 llm benchmarks to assess ai model performance, accuracy, and reliability. as you work on your generative ai product, you will likely encounter various large language models and their unique strengths and weaknesses. you'll need to evaluate these models against specific benchmarks to find the right fit for your goals.

Large Language Model Llm Be On The Right Side Of Change

Comments are closed.