Vision Language Models Vlms Explained Datacamp

Vision Language Models Vlms Explained Datacamp Vision language models (vlms) have dramatically improved how models understands both images and language. early examples used simpler approaches, combining cnns and rnns for tasks like. These models are designed to understand and generate language based on visual inputs which helps them to perform a range of tasks such as describing images, answering questions about them and even creating images from textual descriptions.

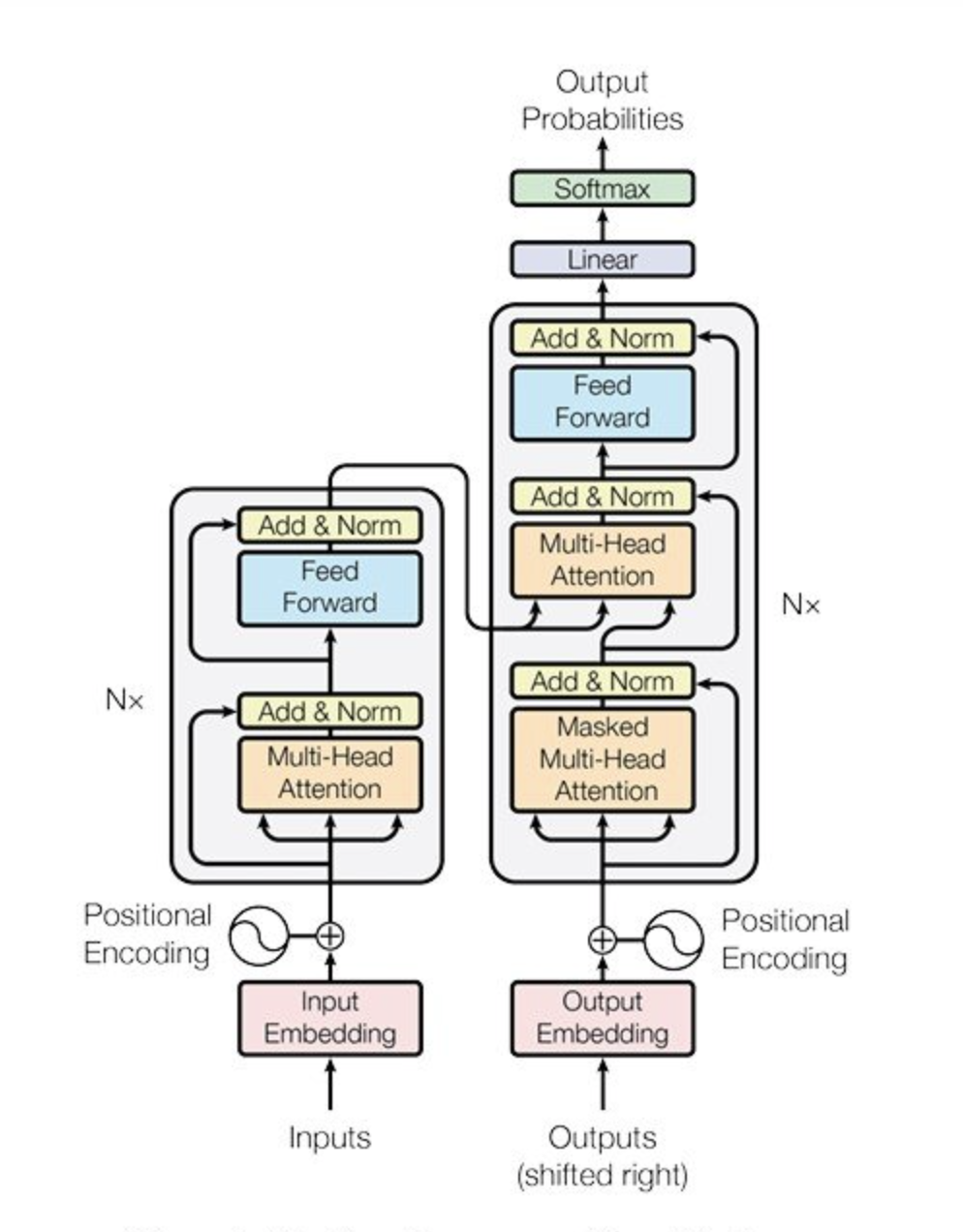

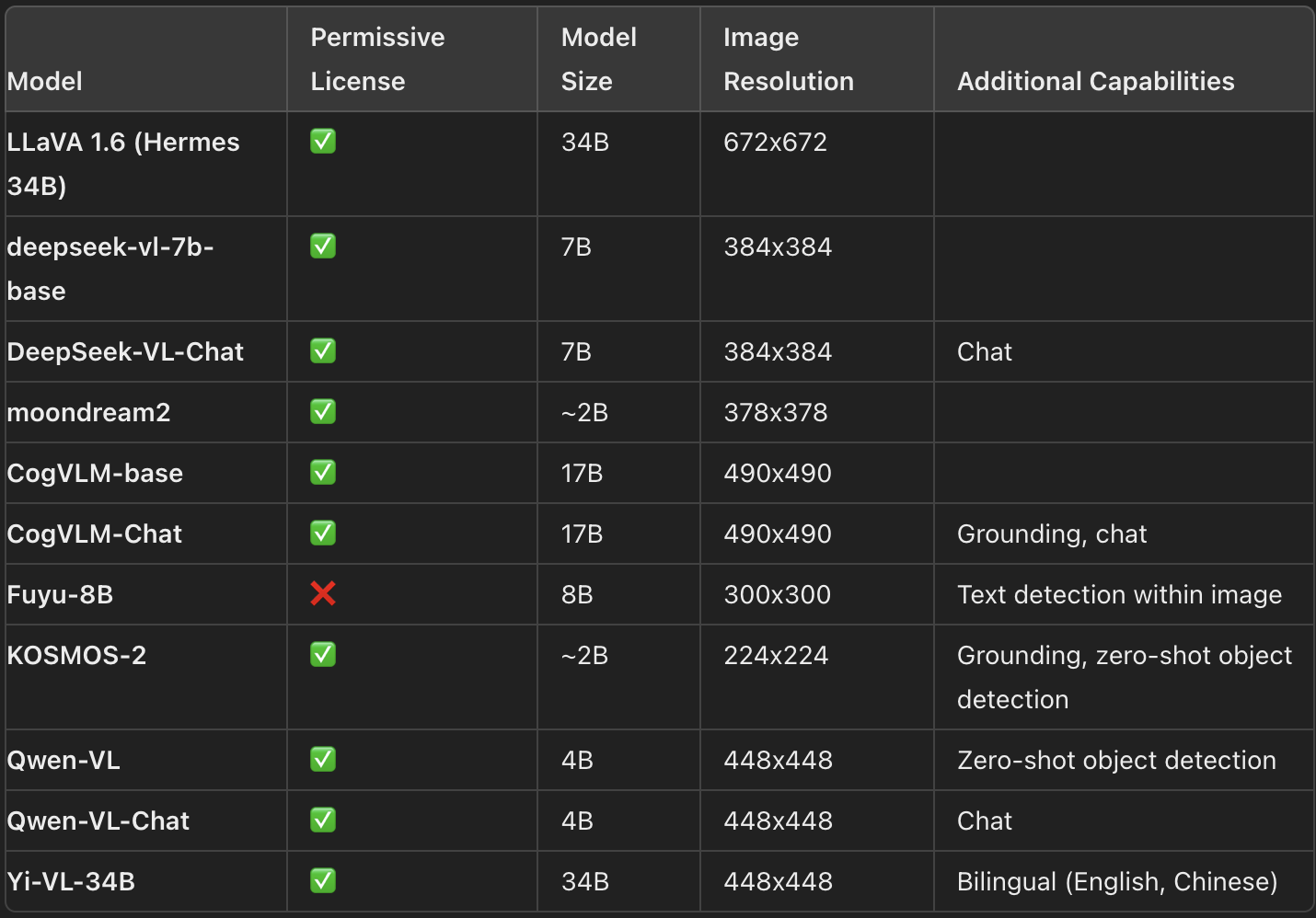

Vision Language Models Vlms Explained Datacamp What are vision language models (vlms)? vision language models (vlms) are artificial intelligence (ai) models that blend computer vision and natural language processing (nlp) capabilities. Vision language models (vlms) integrate image understanding and natural language processing, enabling advanced applications like image captioning and visual question answering through multimodal fusion. the architecture of vlms primarily includes dual encoder models, fusion encoder models, and hybrid models, each offering unique strengths in processing and interpreting visual and textual data. Top 10 vision language models in 2025: a comprehensive guide in 2025, vision language models (vlms) are redefining the parameters for new types of artificial intelligence. these models combine visual understanding with language reasoning – thus linking the modes of language understanding and visual understanding. Before we look at where vision language models (vlms) can be used, let's understand what they are and how they work. vlms are advanced ai models that combine the abilities of vision and language models to handle both images and text.

Vision Language Models Vlms Explained Datacamp Top 10 vision language models in 2025: a comprehensive guide in 2025, vision language models (vlms) are redefining the parameters for new types of artificial intelligence. these models combine visual understanding with language reasoning – thus linking the modes of language understanding and visual understanding. Before we look at where vision language models (vlms) can be used, let's understand what they are and how they work. vlms are advanced ai models that combine the abilities of vision and language models to handle both images and text. Bringing all these points together, the advancement of vision language models (vlms) marks a pivotal moment in ai, seamlessly merging computer vision and natural language understanding to unlock unprecedented capabilities in processing multimodal data. In this blog, we will delve into the world of vlms, explaining the underlying technology, its applications, and the benefits it offers, in simple and easy to understand terms, making it accessible to both technical and non technical readers. Vision language models (vlms) are deep neural architectures that jointly learn from visual and linguistic modalities using large scale image–text pairs. they leverage dual encoders and pre training objectives—contrastive, generative, and alignment—to achieve zero shot performance in tasks like image classification, detection, and. In this article, we explore the architectures, evaluation strategies, and mainstream datasets used in developing vlms, as well as the key challenges and future trends in the field.

Vision Language Models Vlms Explained Datacamp Bringing all these points together, the advancement of vision language models (vlms) marks a pivotal moment in ai, seamlessly merging computer vision and natural language understanding to unlock unprecedented capabilities in processing multimodal data. In this blog, we will delve into the world of vlms, explaining the underlying technology, its applications, and the benefits it offers, in simple and easy to understand terms, making it accessible to both technical and non technical readers. Vision language models (vlms) are deep neural architectures that jointly learn from visual and linguistic modalities using large scale image–text pairs. they leverage dual encoders and pre training objectives—contrastive, generative, and alignment—to achieve zero shot performance in tasks like image classification, detection, and. In this article, we explore the architectures, evaluation strategies, and mainstream datasets used in developing vlms, as well as the key challenges and future trends in the field.

Vision Language Models Vlms Explained Datacamp Vision language models (vlms) are deep neural architectures that jointly learn from visual and linguistic modalities using large scale image–text pairs. they leverage dual encoders and pre training objectives—contrastive, generative, and alignment—to achieve zero shot performance in tasks like image classification, detection, and. In this article, we explore the architectures, evaluation strategies, and mainstream datasets used in developing vlms, as well as the key challenges and future trends in the field.

Vision Language Models Vlms Explained Datacamp

Comments are closed.