Vision Language Models Multi Modality Image Captioning Text To Image Advantages Of Vlms

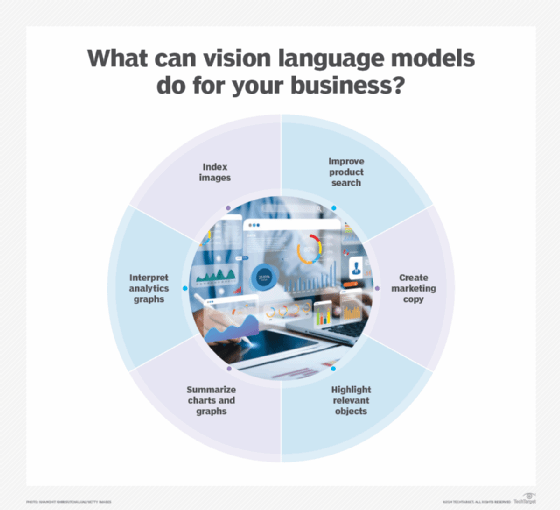

Vision Language Models Vlms Explained Datacamp Join us in this episode as we explore the world of vision language models (vlms) and their diverse applications. Because of their multi modal capacity, vlms are crucial for bridging the gap between textual and visual data, opening up a large range of use cases that text only models can’t address.

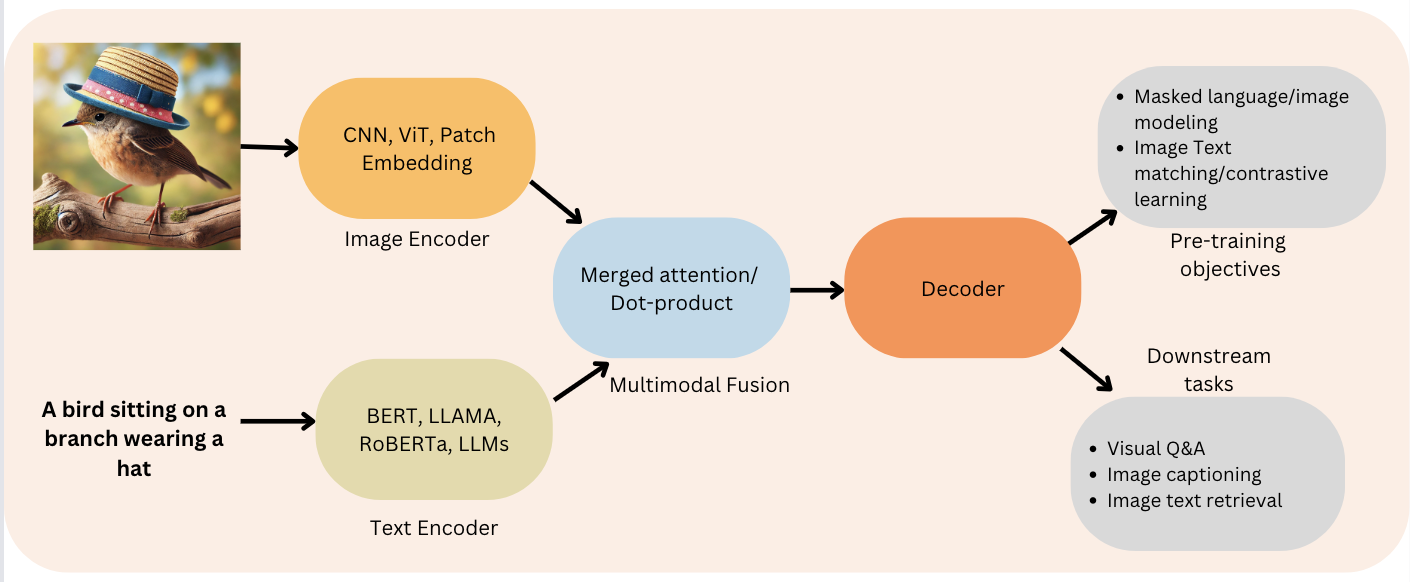

Vision Language Models Vlms Explained Datacamp These models are designed to understand and generate language based on visual inputs which helps them to perform a range of tasks such as describing images, answering questions about them and even creating images from textual descriptions. These models have shown significant promise across various natural language processing tasks, such as visual question answering and computer vision applications, including image captioning and image text retrieval, highlighting their adaptability for complex, multimodal datasets. Mmrl projects the space tokens to text and image representation tokens, facilitating more effective multi modal interactions. Vision language models (vlms) integrate image understanding and natural language processing, enabling advanced applications like image captioning and visual question answering through multimodal fusion. the architecture of vlms primarily includes dual encoder models, fusion encoder models, and hybrid models, each offering unique strengths in processing and interpreting visual and textual data.

What Are Vision Language Models Vlms Definition From Techtarget Mmrl projects the space tokens to text and image representation tokens, facilitating more effective multi modal interactions. Vision language models (vlms) integrate image understanding and natural language processing, enabling advanced applications like image captioning and visual question answering through multimodal fusion. the architecture of vlms primarily includes dual encoder models, fusion encoder models, and hybrid models, each offering unique strengths in processing and interpreting visual and textual data. Many times communication between 2 people gets really awkward in textual mode, slightly improves when voices are involved but greatly improves when you are able to visualize body language and facial expressions as well. Vision language models (vlms) bridge the gap between visual and linguistic understanding of ai. they consist of a multimodal architecture that learns to associate information from image and text modalities. in simple terms, a vlm can understand images and text jointly and relate them. Vision language models (vlms) have dramatically improved how models understands both images and language. early examples used simpler approaches, combining cnns and rnns for tasks like. Training vlms for captioning requires large datasets of images paired with human written descriptions, such as coco or flickr30k. the model learns by minimizing the difference between its generated captions and the ground truth text.

A Learner S Guide To Vision Language Models Vlms Techdogs Many times communication between 2 people gets really awkward in textual mode, slightly improves when voices are involved but greatly improves when you are able to visualize body language and facial expressions as well. Vision language models (vlms) bridge the gap between visual and linguistic understanding of ai. they consist of a multimodal architecture that learns to associate information from image and text modalities. in simple terms, a vlm can understand images and text jointly and relate them. Vision language models (vlms) have dramatically improved how models understands both images and language. early examples used simpler approaches, combining cnns and rnns for tasks like. Training vlms for captioning requires large datasets of images paired with human written descriptions, such as coco or flickr30k. the model learns by minimizing the difference between its generated captions and the ground truth text.

Vision Language Models Learning Strategies Applications Vision language models (vlms) have dramatically improved how models understands both images and language. early examples used simpler approaches, combining cnns and rnns for tasks like. Training vlms for captioning requires large datasets of images paired with human written descriptions, such as coco or flickr30k. the model learns by minimizing the difference between its generated captions and the ground truth text.

Comments are closed.