Using Large Language Models For Recommendation Systems

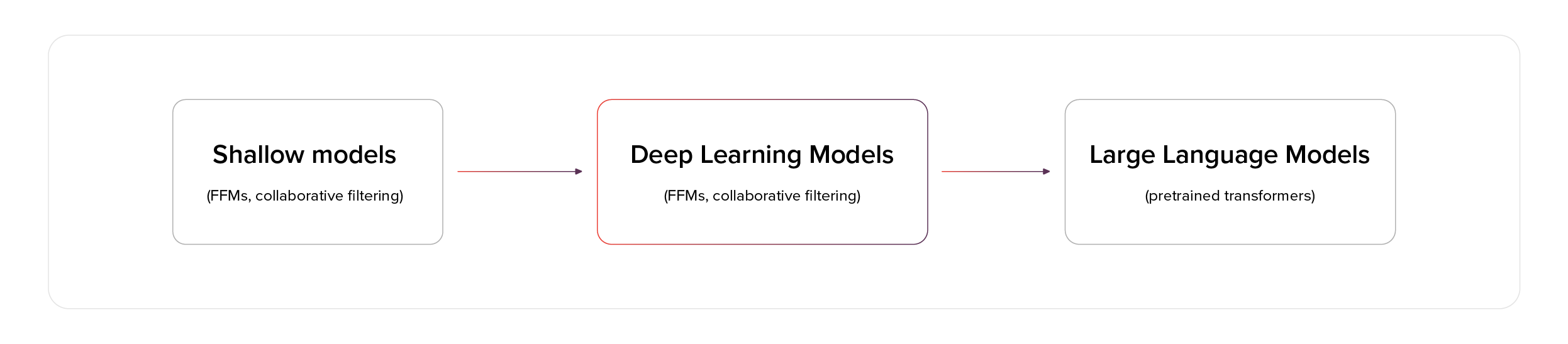

Using Large Language Models For Recommendation Systems I decided to leverage an open source t5 model from hugging face (t5 large) and made my own custom dataset to fine tune it to produce recommendations. the dataset i made consisted of over 100 examples of sports equipment purchases along with the next item to be purchased. We introduce how recommender system advanced from shallow models to deep models and to large models, how llms enable generative recommendation in contrast to traditional discriminative recommendation, and how to build llm based recommender systems.

Large Language Models In Recommendation Systems Techblog Large language models should be integrated into recommendation systems by designing a robust framework that manages data preprocessing, candidate generation, personalized ranking, and multimodal fusion. The rise of large language models (llms), such as llama and chatgpt, has opened new opportunities for enhancing recommender systems through improved explainability. this paper provides a systematic literature review focused on leveraging llms to. Integrating multiple types of data, such as images or videos, into recommendation systems using llms is not only possible but increasingly beneficial. by equipping llms with encoders that translate these diverse data formats into a common token space, we can significantly enhance the system’s understanding and responsiveness to user preferences. Large language models (llms) transform recommendation systems by addressing challenges like domain specific limitations, cold start issues, and explainability gaps. they enable personalized, explainable, and conversational recommendations through zero shot learning and open domain knowledge.

Using Large Language Models As Recommendation Systems By Mohamad Integrating multiple types of data, such as images or videos, into recommendation systems using llms is not only possible but increasingly beneficial. by equipping llms with encoders that translate these diverse data formats into a common token space, we can significantly enhance the system’s understanding and responsiveness to user preferences. Large language models (llms) transform recommendation systems by addressing challenges like domain specific limitations, cold start issues, and explainability gaps. they enable personalized, explainable, and conversational recommendations through zero shot learning and open domain knowledge. Despite significant advances in existing recommendation approaches based on large language models, they still exhibit notable limitations in multimodal feature recognition and dynamic preference. Structured for progressive learning, the book covers foundational llm concepts, the evolution from classic to llm powered recommendation systems, and advanced topics including end to end llm recommenders, conversational agents, and multi modal integration. In a recently published paper titled how can recommender systems benefit from large language models: a survey a research team attempted to codify and summarize this potential as well as challenges that will arise with running llms as recommendation systems. In particular, we will clarify how recommender systems benefit from llms through a variety of perspectives, including the model architecture, learning paradigm, and the strong abilities of llms such as chatting, generalization, planning, and generation.

Using Large Language Models As Recommendation Systems Towards Data Despite significant advances in existing recommendation approaches based on large language models, they still exhibit notable limitations in multimodal feature recognition and dynamic preference. Structured for progressive learning, the book covers foundational llm concepts, the evolution from classic to llm powered recommendation systems, and advanced topics including end to end llm recommenders, conversational agents, and multi modal integration. In a recently published paper titled how can recommender systems benefit from large language models: a survey a research team attempted to codify and summarize this potential as well as challenges that will arise with running llms as recommendation systems. In particular, we will clarify how recommender systems benefit from llms through a variety of perspectives, including the model architecture, learning paradigm, and the strong abilities of llms such as chatting, generalization, planning, and generation.

Comments are closed.