Using Conversational Pipeline With Tgi Issue 796 Huggingface Text

Using Conversational Pipeline With Tgi Issue 796 Huggingface Text Also, i wonder if you have any suggestion that in current tgi workflow how can we send the type of input i mentioned above, basically prompt context (such as few previous chats with the assistant). If you want to, instead of hitting models on the hugging face inference api, you can run your own models locally. a good option is to hit a text generation inference endpoint.

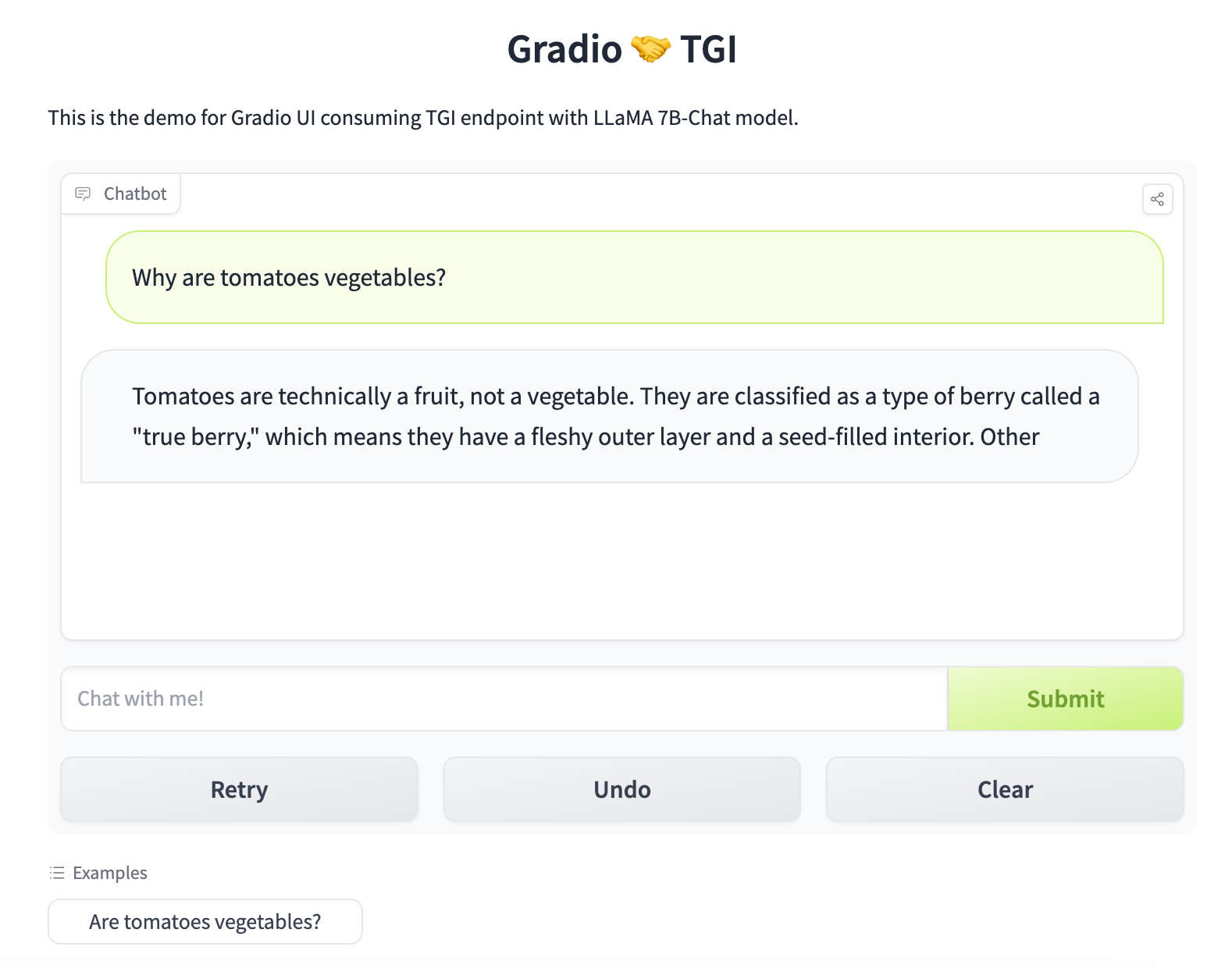

Consuming Text Generation Inference I’m trying to build a chatbot using pipeline with a text generation model. so far, i have been able to create a successful response from the llm using the following snippet:. Chatui can automatically consume the tgi server and even provides an option to switch between different tgi endpoints. you can try it out at hugging chat, or use the chatui docker space to deploy your own hugging chat to spaces. To pick up a draggable item, press the space bar. while dragging, use the arrow keys to move the item. press space again to drop the item in its new position, or press escape to cancel. Thanks for the suggestions, i'm running some test! as a side comment: this is true for newer versions of transformer library. the old one i've been using (4.36) has a dedicated pipeline for chat ("conversational"). took some time to realize this :).

Text Generation Inference Docs Source Basic Tutorials Consuming Tgi Md To pick up a draggable item, press the space bar. while dragging, use the arrow keys to move the item. press space again to drop the item in its new position, or press escape to cancel. Thanks for the suggestions, i'm running some test! as a side comment: this is true for newer versions of transformer library. the old one i've been using (4.36) has a dedicated pipeline for chat ("conversational"). took some time to realize this :). You can use openai’s client libraries or third party libraries expecting openai schema to interact with tgi’s messages api. below are some examples of how to utilize this compatibility. The conversational task was deprecated because it can be confusing as many models can be used for conversation, depending on your specific needs. i’d recommend checking out the text generation task instead. I decided that i wanted to test its deployment using tgi. i managed to deploy the base flan t5 large model from google using tgi as it was pretty straightforward. Tgi is a production grade inference engine built in rust and python, designed for high performance serving of open source llms (e.g. llama, falcon, starcoder, bloom and many more).

Comments are closed.