Understanding Gradient Descent And Backward Propagation In Course Hero

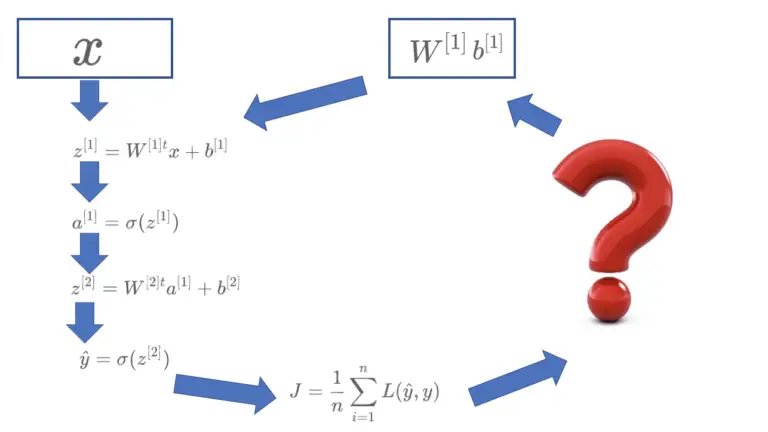

Understanding Gradient Descent A Powerful Optimization Course Hero I discuss new topics in machine learning such as gradient descent and backward propagation. these two topics are a core concept in deep learning which enable efficient learning through intelligent application of mathematics. In backward pass or back propagation the errors between the predicted and actual outputs are computed. the gradients are calculated using the derivative of the sigmoid function and weights and biases are updated accordingly.

Gradient Descent Back Propagation Excel Financial Website The article covers the basics of gradient descent and backpropagation and the points of difference between the two terms. Lecture 8 backpropagation and gradient descent ivan titov (with slides from edoardo ponti). We want to compute the cost gradient de=dw, which is the vector of partial derivatives. this is the average of dl=dw over all the training examples, so in this lecture we focus on computing dl=dw. we've already been using the univariate chain rule. recall: if f (x) and x(t) are univariate functions, then. By the time you finish reading this post, you’ll have a clear, detailed understanding of backpropagation and gradient descent. we’ll break down their roles, highlight their differences,.

Understanding Backpropagation With Gradient Descent Programmathically We want to compute the cost gradient de=dw, which is the vector of partial derivatives. this is the average of dl=dw over all the training examples, so in this lecture we focus on computing dl=dw. we've already been using the univariate chain rule. recall: if f (x) and x(t) are univariate functions, then. By the time you finish reading this post, you’ll have a clear, detailed understanding of backpropagation and gradient descent. we’ll break down their roles, highlight their differences,. • minor: [your minor (if applicable)] in this assignment, we will demonstrate that the back propagation algorithm has a solid mathematical basis in gradient descent, while also being relatively simple to implement. We also put together a sheet of exercises (and their solutions) to help you test your understanding of gradient descent and backpropogation, as well as provide useful practice for the exam. Gradient descent and backpropagation are essential components of training neural networks. here's a detailed explanation of how they work together: gradient descent is an optimization algorithm used to minimize the loss function of a neural network by iteratively updating the model parameters. Learners explore the chain rule, implement activation functions and their derivatives, and see how the forward and backward passes work together to enable learning through gradient descent.

Comments are closed.