Understanding Backpropagation With Gradient Descent Programmathically

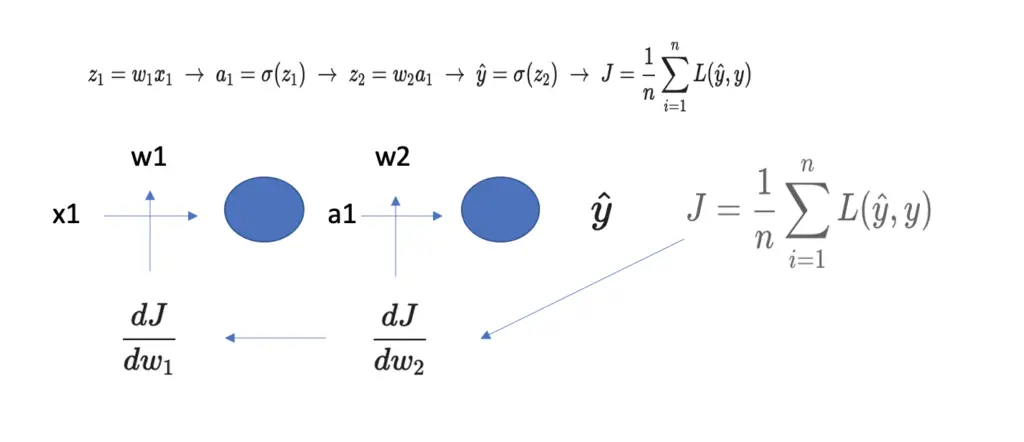

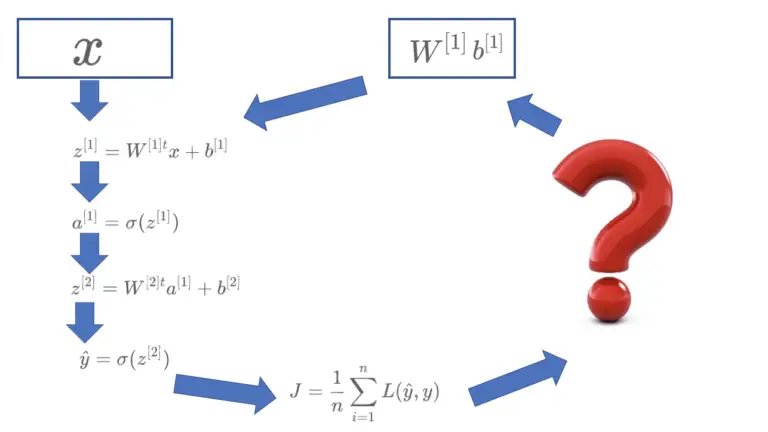

Understanding Backpropagation With Gradient Descent Programmathically Next, we perform a step by step walkthrough of backpropagation using an example and understand how backpropagation and gradient descent work together to help a neural network learn. Together, gradient descent and backpropagation form the backbone of training neural networks, allowing models to learn from data and improve their performance over time.

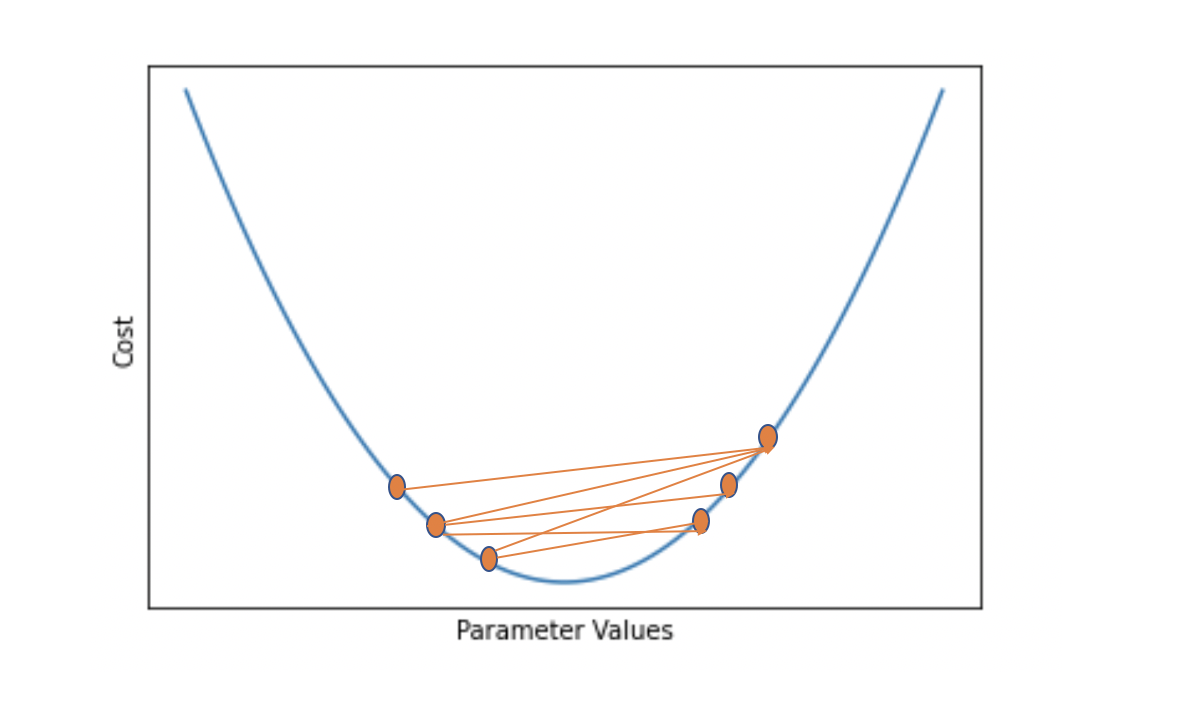

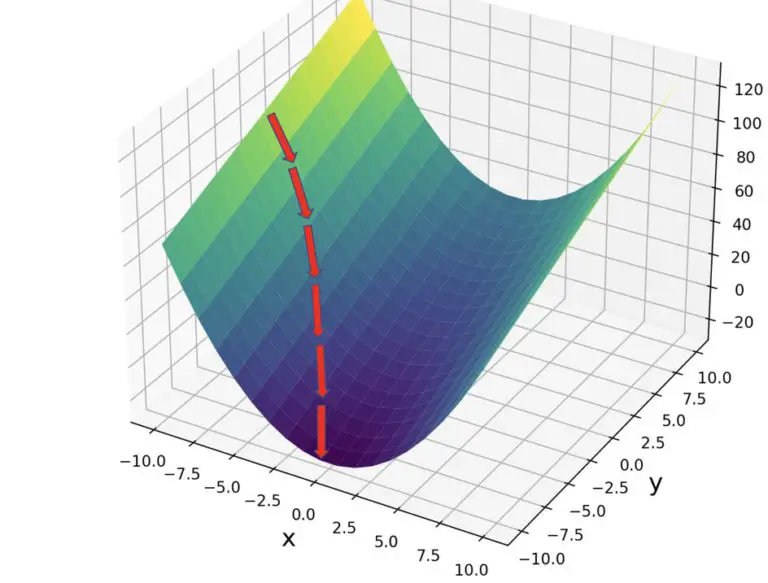

Understanding Backpropagation With Gradient Descent Programmathically Summary so far stochastic gradient descent is an optimiser that iteratively updates the parameter estimate with the (scaled) gradient from mini batches of data. back propagation is an algorithm to calculate the gradient wrt parameters of deep networks ep⬪ciently. In this article you will learn how a neural network can be trained by using backpropagation and stochastic gradient descent. the theories will be described thoroughly and a detailed example calculation is included where both weights and biases are updated. Before vs after optimization 📍 before gradient descent: you're sitting somewhere randomly on the loss curve. 📍 after one update: you’ve moved closer to the local minimum. this is the power of gradient descent — it helps your model learn how to learn. Backpropagation, short for “backward propagation of errors,” is an algorithm used for training neural networks. it calculates the gradient of the loss function with respect to each weight by.

Understanding Backpropagation With Gradient Descent Programmathically Before vs after optimization 📍 before gradient descent: you're sitting somewhere randomly on the loss curve. 📍 after one update: you’ve moved closer to the local minimum. this is the power of gradient descent — it helps your model learn how to learn. Backpropagation, short for “backward propagation of errors,” is an algorithm used for training neural networks. it calculates the gradient of the loss function with respect to each weight by. Backpropagation is workhorse behind training neural networks and understanding what’s happening inside this algorithm is atmost importance for efficient learning. this post gives in depth explanation of backpropagation for training neural networks. below are the contents: 1. notation to represent a neural network:. If you're diving into the world of deep learning, you've likely encountered the terms "backpropagation" and "gradient descent." these two techniques form the backbone of training neural networks, allowing them to learn and improve their performance over time. It includes the creation and training of a basic neural network from scratch, aiming to deepen the understanding of backpropagation and gradient computation. #the notebook includes sections that cover: defining and plotting a simple function basic function plotting for intuitive understanding. In this seventh installment of my deep learning fundamentals series, lets explore more and finally understand backpropagation and gradient descent. these two concepts are like the dynamic duo that makes neural networks learn and improve, kind of like a brain gaining superpowers!.

Understanding Backpropagation With Gradient Descent Programmathically Backpropagation is workhorse behind training neural networks and understanding what’s happening inside this algorithm is atmost importance for efficient learning. this post gives in depth explanation of backpropagation for training neural networks. below are the contents: 1. notation to represent a neural network:. If you're diving into the world of deep learning, you've likely encountered the terms "backpropagation" and "gradient descent." these two techniques form the backbone of training neural networks, allowing them to learn and improve their performance over time. It includes the creation and training of a basic neural network from scratch, aiming to deepen the understanding of backpropagation and gradient computation. #the notebook includes sections that cover: defining and plotting a simple function basic function plotting for intuitive understanding. In this seventh installment of my deep learning fundamentals series, lets explore more and finally understand backpropagation and gradient descent. these two concepts are like the dynamic duo that makes neural networks learn and improve, kind of like a brain gaining superpowers!.

Comments are closed.