Unable To Install Tgi Locally Issue 1264 Huggingface Text

Unable To Install Tgi Locally Issue 1264 Huggingface Text You need to create a virtual environment for text generation inference. also it doesn't look like you have changed into the text generation inference directory before running the commands?. You're going to need network access if you want to install the dependencies. i'm guessing you' could prepare everything you need outside of your gaped environment and bring that in afterwards.

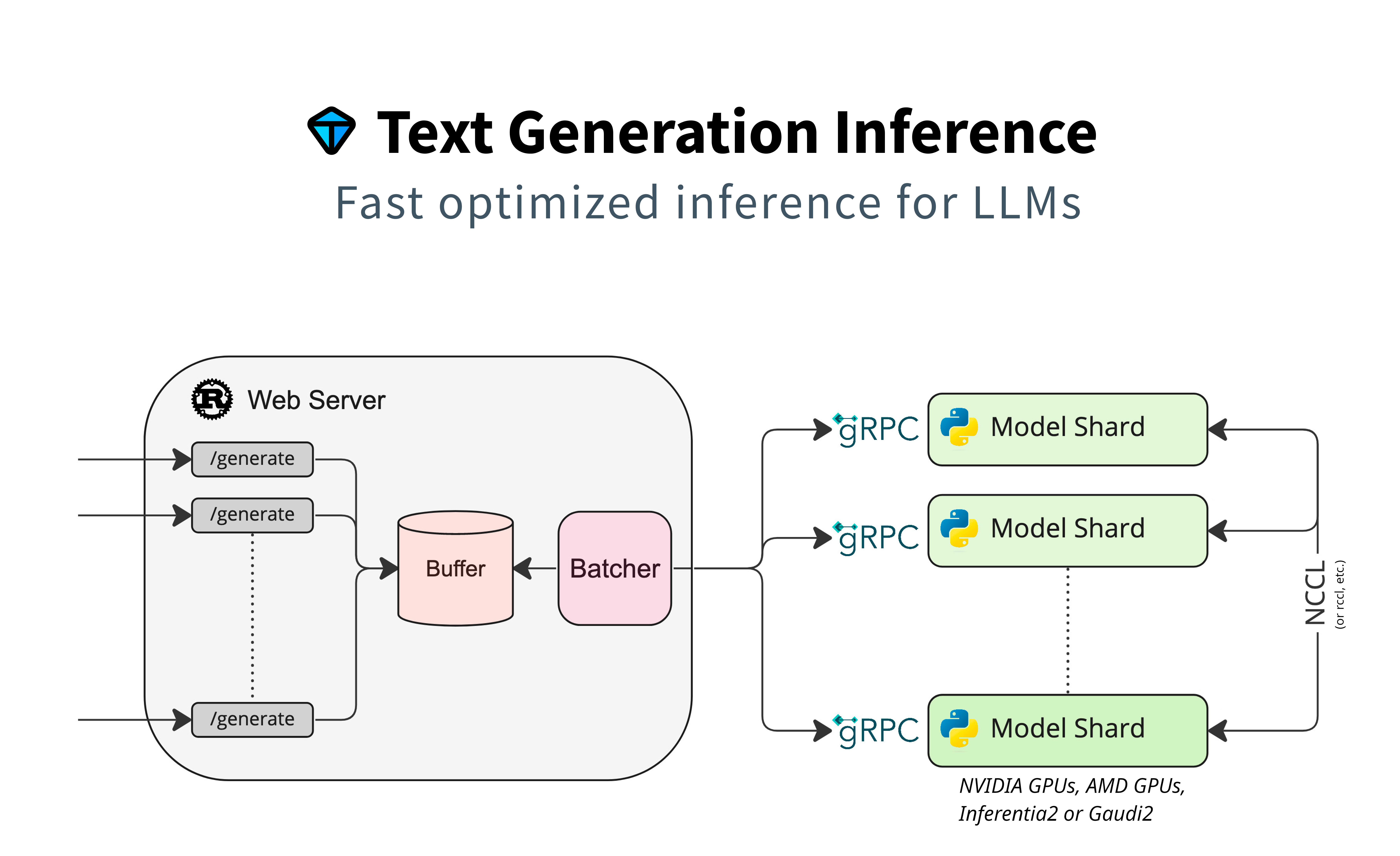

Huggingface Text Generation Inference Tgi 筆記 Clay Technology World We’re on a journey to advance and democratize artificial intelligence through open source and open science. Tgi should have loaded local model for serving but it failed to recognize local model. it seems you give as model id the path to your model on your instance (i.e. home ubuntu model 10e ). the tgi server is run inside a docker container that doesn't have access to that folder by default. We're ditching vllm as a dependency anyway so it should be gone soon (it has too many hard requirements and faster kernels exist out there). i see you are running within anaconda, the issue is most likely coming from there, but i'm not sure how to fix it for you. I can see that you cloned the repo, however you'll need to build and install the project locally to run the command above. please refer to the local install section if you'd like to run locally.

Help With Debugging Locally Issue 1366 Huggingface Text Generation We're ditching vllm as a dependency anyway so it should be gone soon (it has too many hard requirements and faster kernels exist out there). i see you are running within anaconda, the issue is most likely coming from there, but i'm not sure how to fix it for you. I can see that you cloned the repo, however you'll need to build and install the project locally to run the command above. please refer to the local install section if you'd like to run locally. This issue is stale because it has been open 30 days with no activity. remove stale label or comment or this will be closed in 5 days. In the inference optimisation chapter, there is no image suitable for apple silicon for ghcr.io huggingface text generation inference:latest and the amd version does not work since it searches for nvidea gpu. This section explains how to install the cli tool as well as installing tgi from source. the strongly recommended approach is to use docker, as it does not require much setup. check the quick tour to learn how to run tgi with docker. I am hitting a wall here, and currently am trying to clone tgi and use the serving functionality. this is proving to be pretty hard because the way the project is built and more focused on being run in a containerized application.

Comments are closed.