Tutorial 43 Understand What Is Conversationwindowbuffermemory Ai Langchain Llm Machinelearning

How To Integrate And Handle Llm Memory Using Langchain **🚀 master generative ai with langchain – build powerful llm applications!** welcome to your ultimate guide on **generative ai and langchain**! this playli. Conversationbufferwindowmemory keeps a list of the interactions of the conversation over time. it only uses the last k interactions. this can be useful for keeping a sliding window of the most recent interactions, so the buffer does not get too large. let's first explore the basic functionality of this type of memory.

Memory Langchain For Llm Application Development Prompt after formatting: the following is a friendly conversation between a human and an ai. the ai is talkative and provides lots of specific details from its context. if the ai does not know the answer to a question, it truthfully says it does not know. current conversation: human: hi, what's up? ai: > finished chain. " hi there! i'm doing great. This blog post will explore the conversationbufferwindowmemory technique, an effective approach to optimizing memory management in language models like langchain. Picking the right type of langchain memory ensures that the ai responds with clarity and relevance. let’s go through the best practices to manage memory effectively and understand when to use which type. Follow this guide if you're trying to migrate off one of the old memory classes listed below: keeps the last n messages of the conversation. drops the oldest messages when there are more than n.

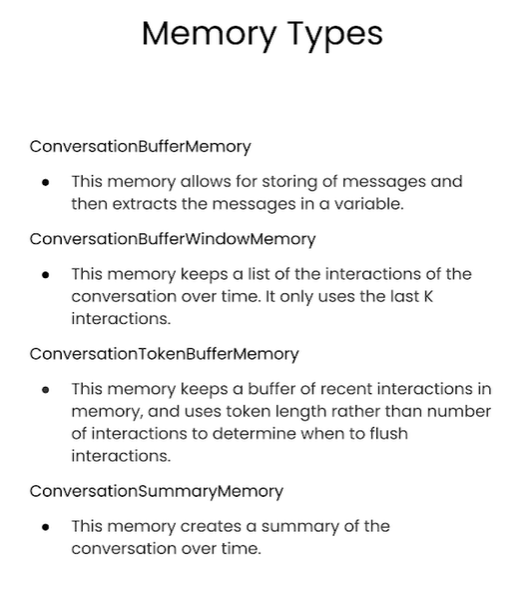

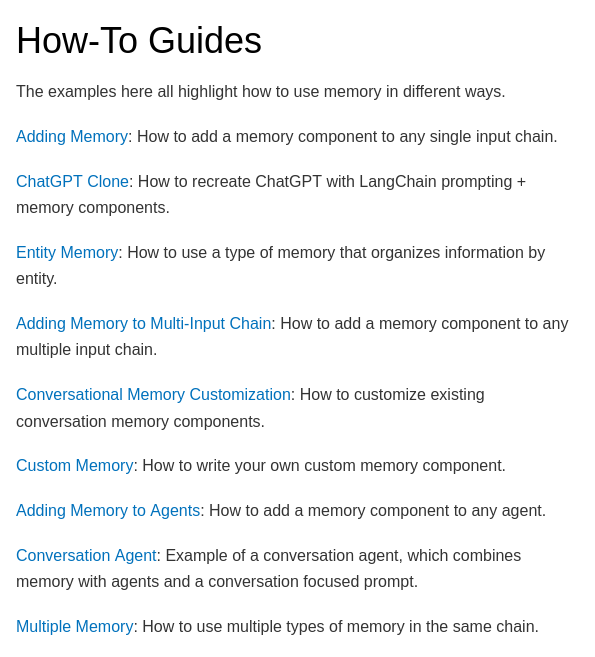

Llm Application Primer Ai Data And Coffee Picking the right type of langchain memory ensures that the ai responds with clarity and relevance. let’s go through the best practices to manage memory effectively and understand when to use which type. Follow this guide if you're trying to migrate off one of the old memory classes listed below: keeps the last n messages of the conversation. drops the oldest messages when there are more than n. Memory enables a large language model (llm) to recall previous interactions with the user. by default, llms are stateless, meaning each query is processed independently of other. Use to keep track of the last k turns of a conversation. if the number of messages in the conversation is more than the maximum number of messages to keep, the oldest messages are dropped. number of messages to store in buffer. clear memory contents. async return key value pairs given the text input to the chain. We delve into langchain’s powerful memory capabilities, exploring three key techniques—llmchain, conversationbuffermemory, and conversationbufferwindowmemory—to help you build more dynamic. As for customizing the language of the summary, you can customize the ai and human prefixes in the conversation summary. by default, these are set to "ai" and "human", but you can set these to be anything you want. here's an example:.

Generative Ai 101 Making Your Llm Context Aware Using Langchain рџ њрџ Memory enables a large language model (llm) to recall previous interactions with the user. by default, llms are stateless, meaning each query is processed independently of other. Use to keep track of the last k turns of a conversation. if the number of messages in the conversation is more than the maximum number of messages to keep, the oldest messages are dropped. number of messages to store in buffer. clear memory contents. async return key value pairs given the text input to the chain. We delve into langchain’s powerful memory capabilities, exploring three key techniques—llmchain, conversationbuffermemory, and conversationbufferwindowmemory—to help you build more dynamic. As for customizing the language of the summary, you can customize the ai and human prefixes in the conversation summary. by default, these are set to "ai" and "human", but you can set these to be anything you want. here's an example:.

Chat With Pdf Using Langchain And Llm Openai Silery Engineering We delve into langchain’s powerful memory capabilities, exploring three key techniques—llmchain, conversationbuffermemory, and conversationbufferwindowmemory—to help you build more dynamic. As for customizing the language of the summary, you can customize the ai and human prefixes in the conversation summary. by default, these are set to "ai" and "human", but you can set these to be anything you want. here's an example:.

A Chatbot With Openai Llm Langchain And Huggingface Models Upwork

Comments are closed.