Tracking Batching Support Issue 1118 Mlc Ai Mlc Llm Github

Tracking Batching Support Issue 1118 Mlc Ai Mlc Llm Github Sign up for a free github account to open an issue and contact its maintainers and the community. any updates on this? we have updated the rest api documentation with the latest mlc serve instructions llm.mlc.ai docs deploy rest . closing this issue due to completion now. To pick up a draggable item, press the space bar. while dragging, use the arrow keys to move the item. press space again to drop the item in its new position, or press escape to cancel. failed to load issues. we encountered an error trying to load issues.

Github Mlc Ai Mlc Llm Enable Everyone To Develop Optimize And This issue tracks the migration of current rest api backend to use mlc serve. mlc serve implements a paged kv cache interface and supports batched processings of prompts. Sign up for a free github account to open an issue and contact its maintainers and the community. Have a question about this project? sign up for a free github account to open an issue and contact its maintainers and the community. As slm rollout is almost completed: #1420, there are some remaining steps to clear the confusion.

Feature Request Npu Support Issue 1361 Mlc Ai Mlc Llm Github Have a question about this project? sign up for a free github account to open an issue and contact its maintainers and the community. As slm rollout is almost completed: #1420, there are some remaining steps to clear the confusion. As slm's development is wrapping up, this issue tracks the steps for rolling out slm, including documentation, tutorials, prebuilt models weights release, and more. This is a pinned issue to give an overview of mlc llm project tracking project tracking board contains list of tracking issues. we are an open source community and we love contributions. The current mlc llm chat is still backed by the chatmodule. we are working on a jsonffiengine, with pure json string input output, to enable us to expose a broader set of interface (as in openai) to broader set of backends. so the transition will happen once jsonffiengine lands. Sign up for a free github account to open an issue and contact its maintainers and the community. i see that mlc doesn't currently support batched inference, is there any work being done there? i'd love to pick it up otherwise.

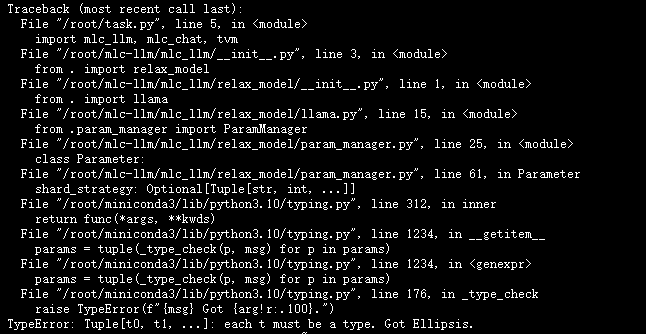

Question Running Error Issue 1011 Mlc Ai Mlc Llm Github As slm's development is wrapping up, this issue tracks the steps for rolling out slm, including documentation, tutorials, prebuilt models weights release, and more. This is a pinned issue to give an overview of mlc llm project tracking project tracking board contains list of tracking issues. we are an open source community and we love contributions. The current mlc llm chat is still backed by the chatmodule. we are working on a jsonffiengine, with pure json string input output, to enable us to expose a broader set of interface (as in openai) to broader set of backends. so the transition will happen once jsonffiengine lands. Sign up for a free github account to open an issue and contact its maintainers and the community. i see that mlc doesn't currently support batched inference, is there any work being done there? i'd love to pick it up otherwise.

Comments are closed.