Tokenization For Improved Data Security Overview Of Security

Tokenization For Improved Data Security Overview Of Security Security sensitive applications use tokenization to replace sensitive data, such as personally identifiable information (pii) or protected health information (phi), with tokens to reduce security risks. By replacing sensitive data with non sensitive tokens, tokenization offers a highly secure method to store, process, and transmit data, reducing the risk of data breaches and helping organizations meet industry standards.

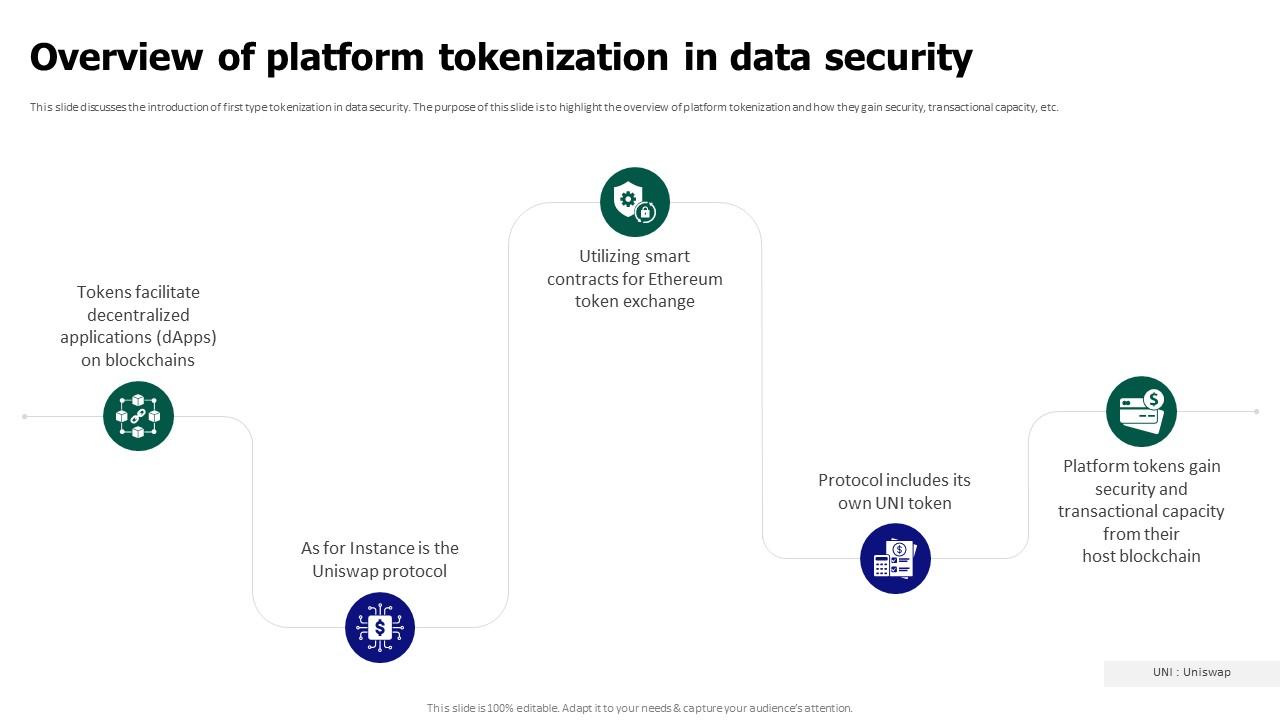

Tokenization For Improved Data Security Overview Of Platform The security and risk reduction benefits of tokenization require that the tokenization system is logically isolated and segmented from data processing systems and applications that previously processed or stored sensitive data replaced by tokens. Tokenization helps prevent unauthorized access to patient data, minimizes the risk of data breaches, and facilitates secure data sharing among healthcare providers, payers, and research institutions. Data tokenization is a security technique that helps protect sensitive data by replacing it with a non sensitive substitute, called a token. tokens are unique identifiers that have no intrinsic value and cannot be used to recreate the original data. Among the data protection techniques available, tokenization is a powerful method for protecting sensitive information. tokenization replaces real data with format preserving tokens,.

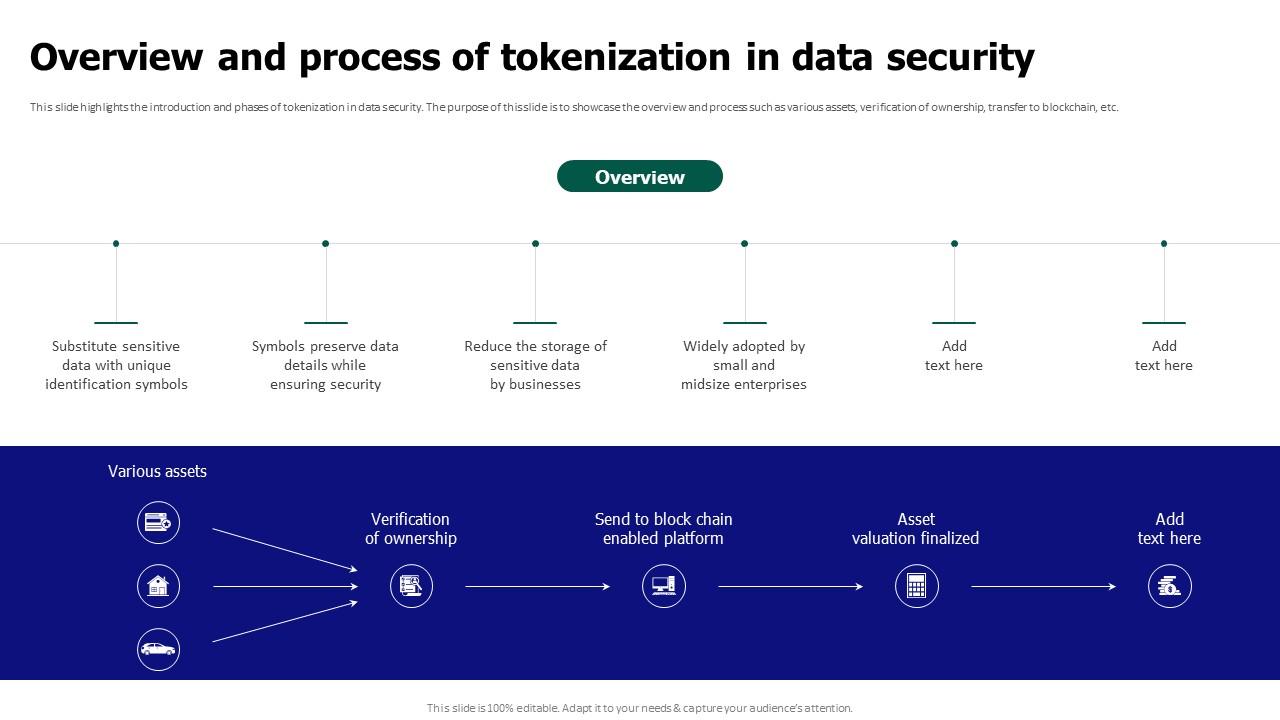

Tokenization For Improved Data Security Overview And Process Of Data tokenization is a security technique that helps protect sensitive data by replacing it with a non sensitive substitute, called a token. tokens are unique identifiers that have no intrinsic value and cannot be used to recreate the original data. Among the data protection techniques available, tokenization is a powerful method for protecting sensitive information. tokenization replaces real data with format preserving tokens,. This comprehensive article delves into the critical role of tokenization in modern data security, exploring its core concepts, operational mechanisms, and distinct advantages over traditional encryption methods. Data tokenization replaces sensitive data with random tokens, ensuring security by preventing unauthorized access during storage and transmission. tokenization not only supports regulatory compliance but also reduces risks from insider threats, enhancing overall data protection and customer trust. In data security, tokenization is the process of converting sensitive data into a nonsensitive digital replacement, called a token, that maps back to the original. tokenization can help protect sensitive information. for example, sensitive data can be mapped to a token and placed in a digital vault for secure storage. As businesses navigate an increasingly challenging and complex web of privacy regulations, data tokenization – a method that replaces sensitive data with non sensitive placeholders, offering enhanced security without compromising usability – looks set to be the future of digital security.

Tokenization For Improved Data Security Main Data Security Tokenization This comprehensive article delves into the critical role of tokenization in modern data security, exploring its core concepts, operational mechanisms, and distinct advantages over traditional encryption methods. Data tokenization replaces sensitive data with random tokens, ensuring security by preventing unauthorized access during storage and transmission. tokenization not only supports regulatory compliance but also reduces risks from insider threats, enhancing overall data protection and customer trust. In data security, tokenization is the process of converting sensitive data into a nonsensitive digital replacement, called a token, that maps back to the original. tokenization can help protect sensitive information. for example, sensitive data can be mapped to a token and placed in a digital vault for secure storage. As businesses navigate an increasingly challenging and complex web of privacy regulations, data tokenization – a method that replaces sensitive data with non sensitive placeholders, offering enhanced security without compromising usability – looks set to be the future of digital security.

An Overview Of Tokenization In Data Security In data security, tokenization is the process of converting sensitive data into a nonsensitive digital replacement, called a token, that maps back to the original. tokenization can help protect sensitive information. for example, sensitive data can be mapped to a token and placed in a digital vault for secure storage. As businesses navigate an increasingly challenging and complex web of privacy regulations, data tokenization – a method that replaces sensitive data with non sensitive placeholders, offering enhanced security without compromising usability – looks set to be the future of digital security.

Tokenization For Improved Data Security Overview And Benefits Of

Comments are closed.