Ti Pooling Transformation Invariant Pooling For Feature Learning In

Ti Pooling Transformation Invariant Pooling For Feature Learning In What is ti pooling? ti pooling is a simple technique that allows to make a convolutional neural networks (cnn) transformation invariant. In this paper we present a deep neural network topology that incorporates a simple to implement transformation invariant pooling operator (ti pooling). this operator is able to efficiently handle prior knowledge on nuisance variations in the data, such as rotation or scale changes.

Ti Pooling Transformation Invariant Pooling For Feature Learning In In this paper we present a deep neural network topology that incorporates a simple to implement transformationinvariant pooling operator (ti pooling). this oper. For example,that incorporates a simple to implement transformation convolutional neural networks [12] learn kernels to be ap invariant pooling operator (ti pooling). In this paper we present a deep neural network topology that incorporates a simple to implement transformation invariant pooling operator (ti pooling). this operator is able to. In this paper we present a deep neural network topology that incorporates a simple to implement transformation invariant pooling operator (ti pooling). this operator is able to efficiently handle prior knowledge on nuisance variations in the data, such as rotation or scale changes.

Pdf Ti Pooling Transformation Invariant Pooling For Feature Learning In this paper we present a deep neural network topology that incorporates a simple to implement transformation invariant pooling operator (ti pooling). this operator is able to. In this paper we present a deep neural network topology that incorporates a simple to implement transformation invariant pooling operator (ti pooling). this operator is able to efficiently handle prior knowledge on nuisance variations in the data, such as rotation or scale changes. Ti pooling: transformation invariant pooling for feature learning in convolutional neural networks ti pooling readme.md at master · dlaptev ti pooling. This paper presents the transformation invariant restricted boltzmann machine that compactly represents data by its weights and their transformations, which achieves invariance of the feature representation via probabilistic max pooling. In this paper we present a deep neural network topology that incorporates a simple to implement transformation invariant pooling operator (ti pooling). this operator is able to efficiently handle prior knowledge on nuisance variations in the data, such as rotation or scale changes.

Pooling Invariant Image Feature Learning Pdf Ti pooling: transformation invariant pooling for feature learning in convolutional neural networks ti pooling readme.md at master · dlaptev ti pooling. This paper presents the transformation invariant restricted boltzmann machine that compactly represents data by its weights and their transformations, which achieves invariance of the feature representation via probabilistic max pooling. In this paper we present a deep neural network topology that incorporates a simple to implement transformation invariant pooling operator (ti pooling). this operator is able to efficiently handle prior knowledge on nuisance variations in the data, such as rotation or scale changes.

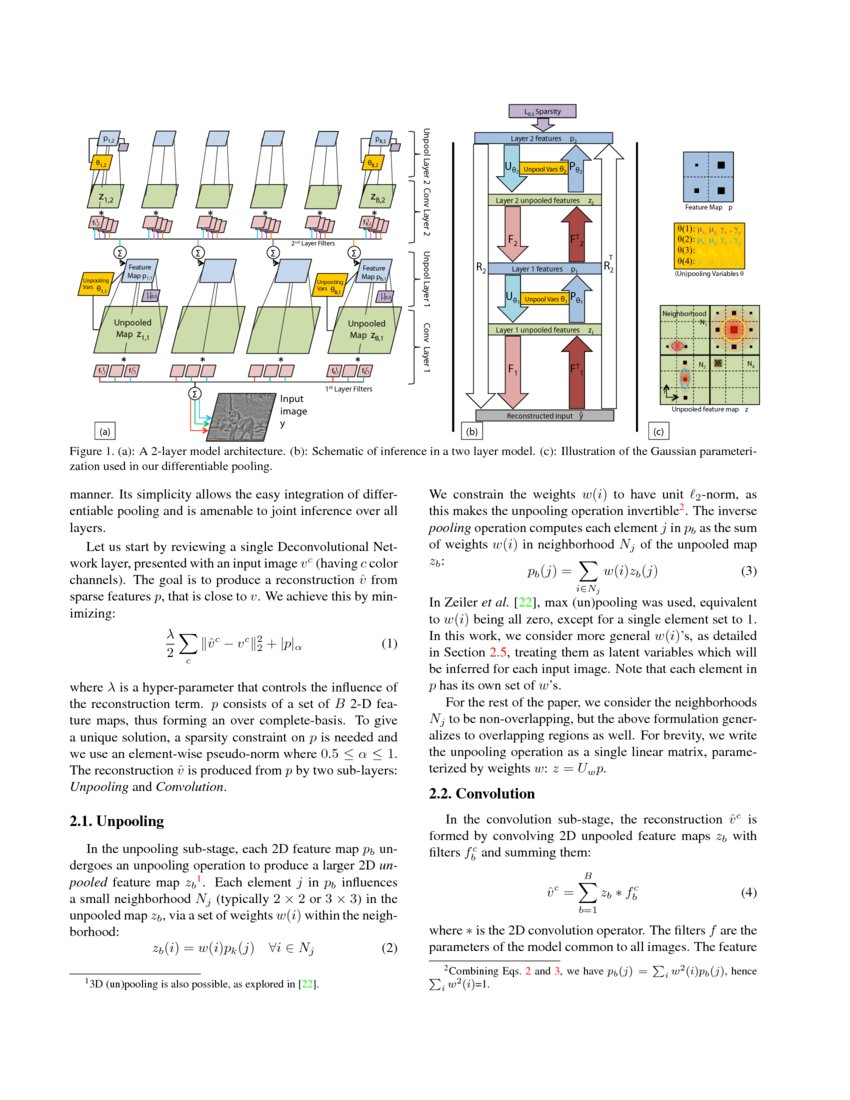

Differentiable Pooling For Hierarchical Feature Learning Deepai In this paper we present a deep neural network topology that incorporates a simple to implement transformation invariant pooling operator (ti pooling). this operator is able to efficiently handle prior knowledge on nuisance variations in the data, such as rotation or scale changes.

Scale Invariant Feature Transformation Chiplogic Blog Chiplogic

Comments are closed.