This Ai Paper From Stanford Introduces Codebook Features For Sparse And

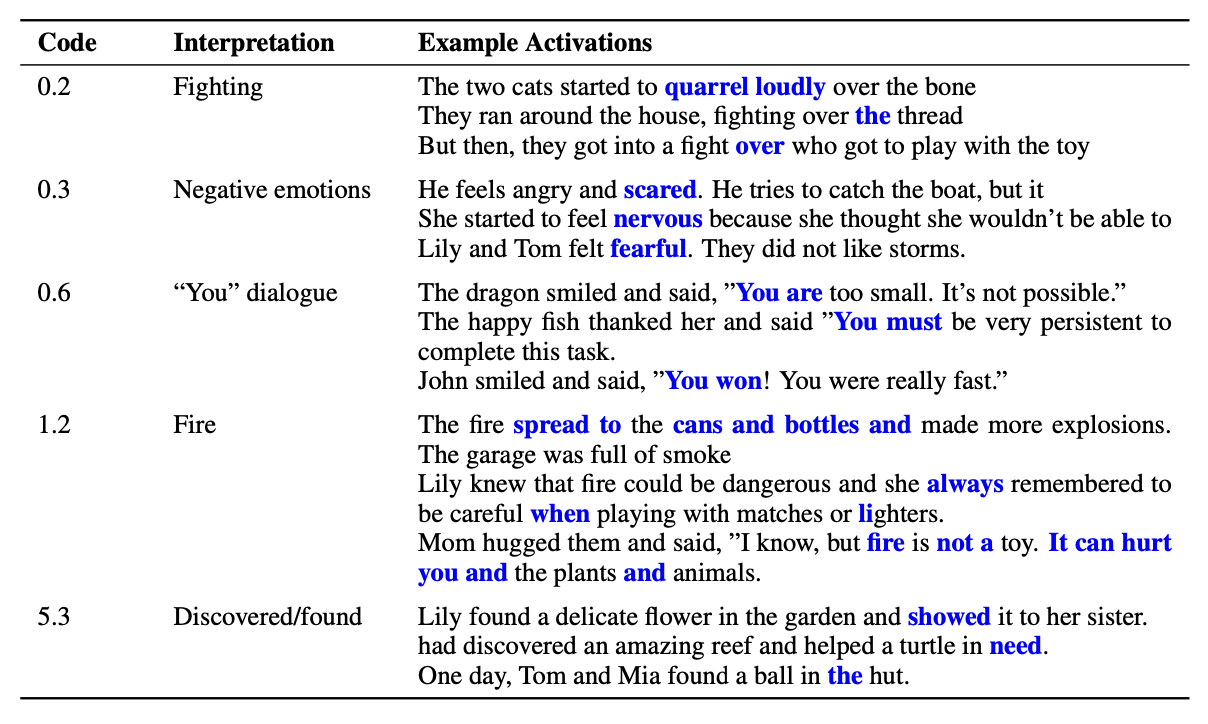

Codebook Features Sparse And Discrete Interpretability For Neural In our new paper, we introduce codebook features to make progress on these challenges by training neural networks with sparse, discrete hidden states. codebook features attempt to combine the expressivity of neural networks with the sparse, discrete state often found in traditional software. We explore whether we can train neural networks to have hidden states that are sparse, discrete, and more interpretable by quantizing their continuous features into what we call codebook features.

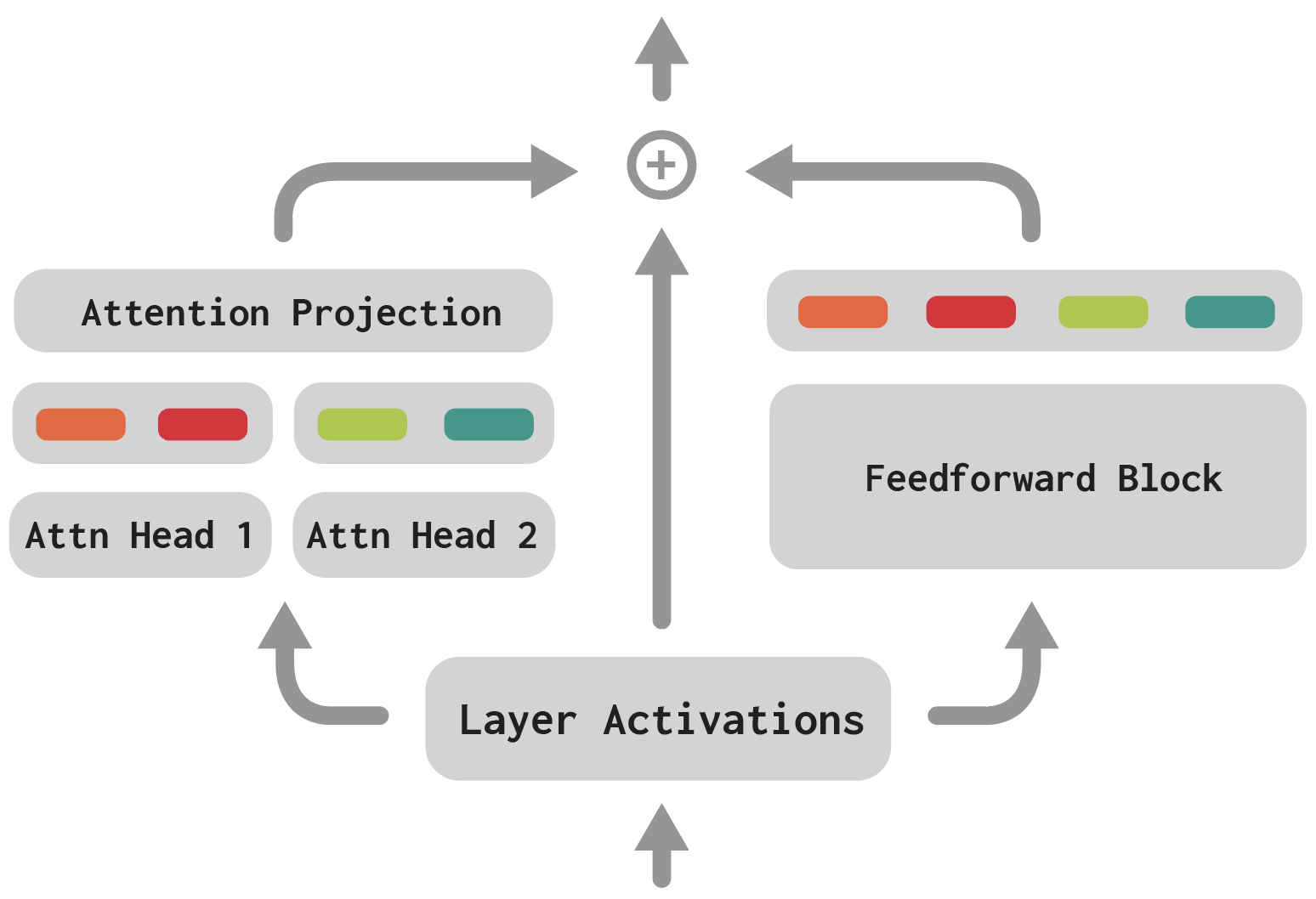

Codebook Features Sparse And Discrete Interpretability For Neural The research team’s proposed solution, codebook features, attempts to bridge this gap by combining the expressive power of neural networks with the sparse, discrete states commonly found in traditional software. We explore whether we can train neural networks to have hidden states that are sparse, discrete, and more interpretable by quantizing their continuous features into what we call codebook features. We explore whether we can train neural networks to have hidden states that are sparse, discrete, and more interpretable by quan tizing their continuous features into what we call codebook features. Codebook features is a method for training neural networks with a set of learned sparse and discrete hidden states, enabling interpretability and control of the resulting model. codebook features work by inserting vector quantization bottlenecks called codebooks into each layer of a neural network.

Codebook Features Sparse And Discrete Interpretability For Neural We explore whether we can train neural networks to have hidden states that are sparse, discrete, and more interpretable by quan tizing their continuous features into what we call codebook features. Codebook features is a method for training neural networks with a set of learned sparse and discrete hidden states, enabling interpretability and control of the resulting model. codebook features work by inserting vector quantization bottlenecks called codebooks into each layer of a neural network. We demonstrate codebook features: a way to modify neural networks to make their internals more interpretable and steerable while causing only a small degradation of performance. By leveraging vector quantization and making a codebook of sparse and discrete vectors, the strategy transforms the dense and steady computations of neural networks right into a extra interpretable type. To address this challenge, a research team has introduced "codebook features," a novel method that enhances the interpretability and control of neural networks. S into what we call codebook features. code book features are produced by finetuning neural networks with vector quantization bottlenecks at each layer, producing a network whose hidden features are the sum of a small number of discrete vect.

Comments are closed.