The Hidden Risks In Open Source Ai Models

The Hidden Risks In Open Source Ai Models The growing openness of ai models fosters transparency, collaboration, and faster iteration across the ai community. but those benefits come with familiar risks. When the data pipeline remains undisclosed, hidden agendas or compromises can go unnoticed. additionally, community driven audits may be time consuming and expensive, meaning well intentioned.

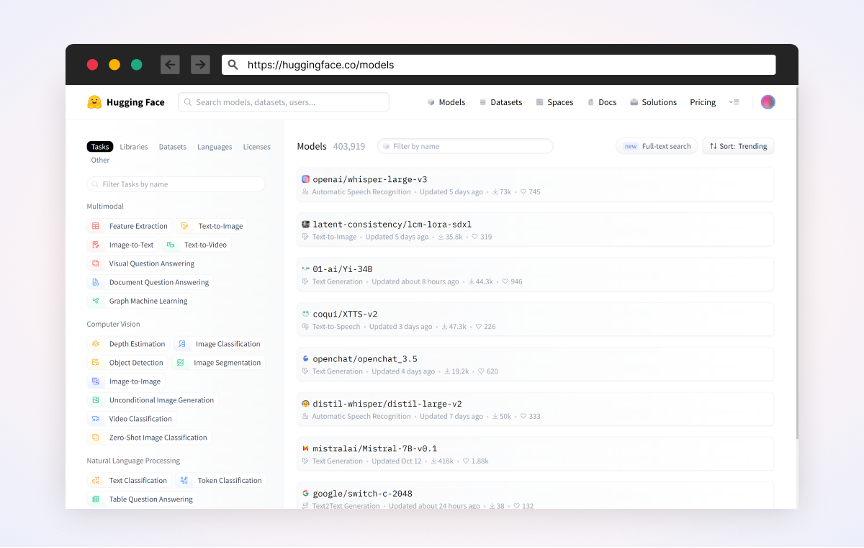

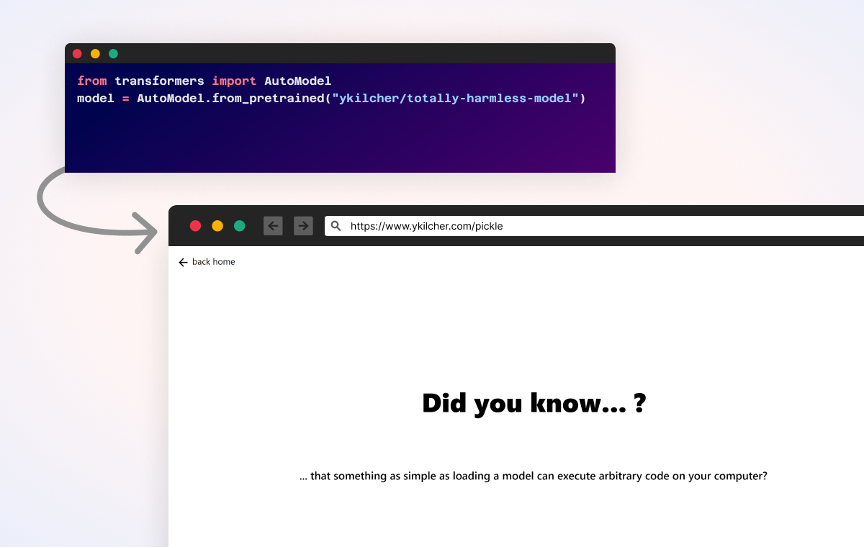

The Hidden Supply Chain Risks In Open Source Ai Models While the open source movement has clear advantages, it also introduces hidden risks that many teams overlook — from security loopholes and ethical liabilities to supply chain. Ai is powerful and exciting, but open source doesn’t automatically make it safe. as linux admins and devs, it’s tempting to treat ai like every other tool in the arsenal, but in reality, it’s a moving target—and one worth scrutinizing. This research explores the full lifecycle risk posed by backdoored models in the open source ai ecosystem. we analyze real world demonstrations, dissect the various threat vectors across model development and hosting platforms, and propose actionable strategies for detection and mitigation. For research purposes, i open sourced here the various scripts i’m using to demonstrate the risks of malicious ai. please read the disclaimer in this github repo if you’re planning on using them.

The Hidden Supply Chain Risks In Open Source Ai Models This research explores the full lifecycle risk posed by backdoored models in the open source ai ecosystem. we analyze real world demonstrations, dissect the various threat vectors across model development and hosting platforms, and propose actionable strategies for detection and mitigation. For research purposes, i open sourced here the various scripts i’m using to demonstrate the risks of malicious ai. please read the disclaimer in this github repo if you’re planning on using them. Open source ai models, when used by malicious actors, may pose serious threats to international peace, security and human rights. highly capable open source models could be repurposed by actors with malicious intent to perpetuate crime, cause harm, or even disrupt and undermine democratic processes. However, open source platforms are not immune to data threats. with the rise in cybersecurity incidents, with over 343 million victims reported just last year, the focus is back on data security, especially with ai in the picture. Learn the hidden risks of open source data and rethink your ai training strategy. take a responsible approach for safer, more accurate ml models. Our study therefore shows that data filtration can be a powerful tool in helping developers balance safety and innovation in open source ai. study co author stephen casper, uk ai security institute. the team used a multi stage filtering pipeline combining keyword blocklists and a machine learning classifier trained to detect high risk content.

The Hidden Supply Chain Risks In Open Source Ai Models Open source ai models, when used by malicious actors, may pose serious threats to international peace, security and human rights. highly capable open source models could be repurposed by actors with malicious intent to perpetuate crime, cause harm, or even disrupt and undermine democratic processes. However, open source platforms are not immune to data threats. with the rise in cybersecurity incidents, with over 343 million victims reported just last year, the focus is back on data security, especially with ai in the picture. Learn the hidden risks of open source data and rethink your ai training strategy. take a responsible approach for safer, more accurate ml models. Our study therefore shows that data filtration can be a powerful tool in helping developers balance safety and innovation in open source ai. study co author stephen casper, uk ai security institute. the team used a multi stage filtering pipeline combining keyword blocklists and a machine learning classifier trained to detect high risk content.

Comments are closed.