Table 1 From Decoding Symbolism In Language Models Semantic Scholar

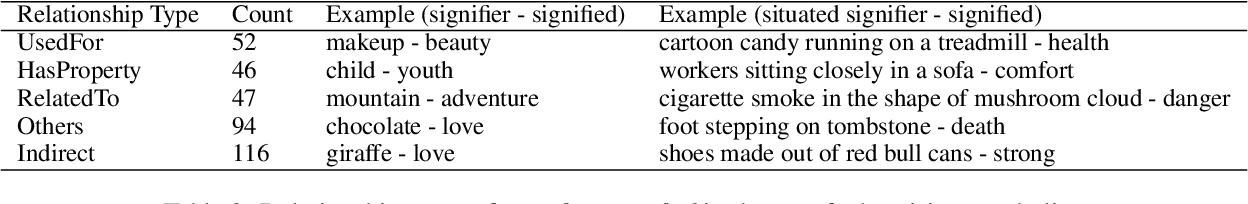

Table 1 From Decoding Symbolism In Language Models Semantic Scholar Table 1: our conventional symbolism dataset groups the signifiers into 11 types. "decoding symbolism in language models". This work explores the feasibility of eliciting knowledge from language models (lms) to de code symbolism, recognizing something (e.g., roses) as a stand in for another (e.g., love).

Table 1 From Decoding Symbolism In Language Models Semantic Scholar We collected datasets of fictional narratives containing a figurative expression along with crowd sourced plausible and implausible continuations relying on the correct interpretation of the. While scaling laws grant large language models powerful intuition, the art of the symbol provides the necessary compass for navigating complex frontiers an. This method empowers llms to deconstruct reasoning independent semantic information into generic symbolic representations, thereby efficiently capturing more generalized reasoning knowledge. This work explores the feasibility of eliciting knowledge from language models (lms) to decode symbolism, recognizing something (e.g.,roses) as a stand in for another (e.g., love).

Table 1 From Decoding Symbolism In Language Models Semantic Scholar This method empowers llms to deconstruct reasoning independent semantic information into generic symbolic representations, thereby efficiently capturing more generalized reasoning knowledge. This work explores the feasibility of eliciting knowledge from language models (lms) to decode symbolism, recognizing something (e.g.,roses) as a stand in for another (e.g., love). From this perspective, the article offers counterarguments to two commonly cited reasons why llms cannot serve as plausible models of language in humans: their lack of symbolic structure and their lack of grounding. It is argued that modern llms are more than mere symbol manipulators and that llms in deep neural networks should be considered capable of a form of knowledge, though it may not qualify as justified true belief (jtb) in the traditional definition. Tldr: this work explores the feasibility of eliciting knowledge from language models (lms) to decode symbolism, recognizing something (e.g.,roses) as a stand in for another (e.g., love). Our work closely focuses on human natural language, resolute the semantics at the semiotic level, and explores the upper limit of llm reasoning in dealing with problems under natural language.

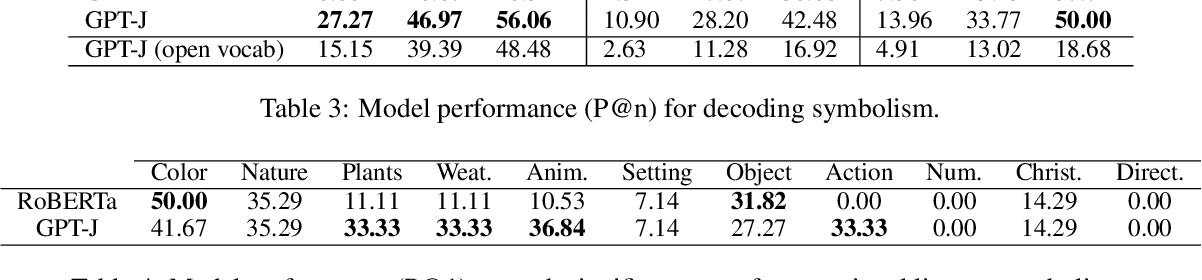

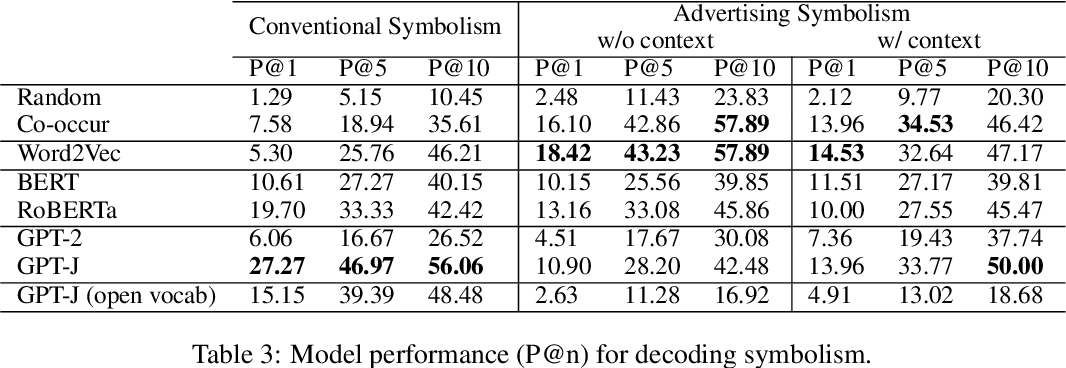

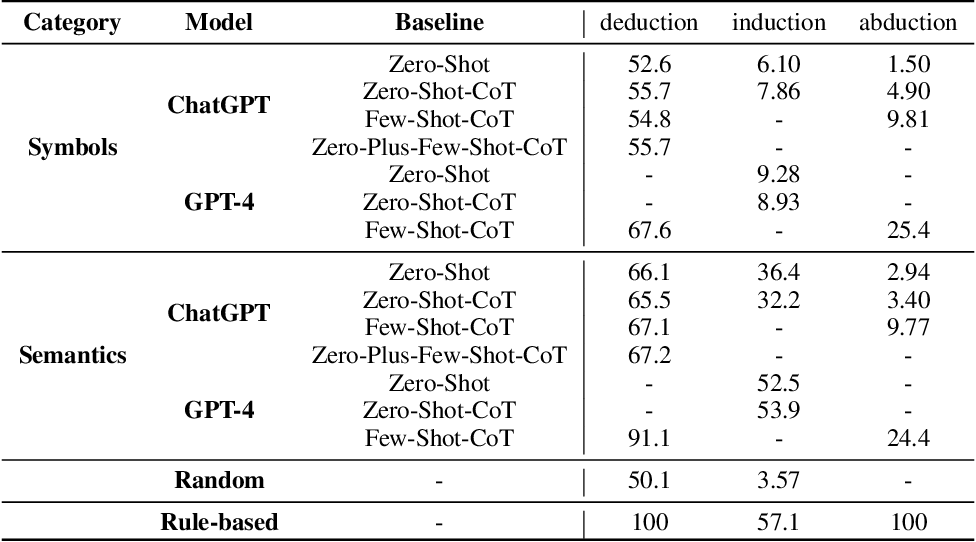

Table 3 From Decoding Symbolism In Language Models Semantic Scholar From this perspective, the article offers counterarguments to two commonly cited reasons why llms cannot serve as plausible models of language in humans: their lack of symbolic structure and their lack of grounding. It is argued that modern llms are more than mere symbol manipulators and that llms in deep neural networks should be considered capable of a form of knowledge, though it may not qualify as justified true belief (jtb) in the traditional definition. Tldr: this work explores the feasibility of eliciting knowledge from language models (lms) to decode symbolism, recognizing something (e.g.,roses) as a stand in for another (e.g., love). Our work closely focuses on human natural language, resolute the semantics at the semiotic level, and explores the upper limit of llm reasoning in dealing with problems under natural language.

Underline Decoding Symbolism In Language Models Tldr: this work explores the feasibility of eliciting knowledge from language models (lms) to decode symbolism, recognizing something (e.g.,roses) as a stand in for another (e.g., love). Our work closely focuses on human natural language, resolute the semantics at the semiotic level, and explores the upper limit of llm reasoning in dealing with problems under natural language.

Table 2 From Large Language Models Are In Context Semantic Reasoners

Comments are closed.