Supercharge Training Pytorch Models On Tpus

Supercharge Training Pytorch Models On Tpus This quick guide outlines how to set up pytorch training with google tpus, especially for those familiar with kaggle colab environments and gpu based training. the rest of your code can use. Cloud tpus are designed to scale cost efficiently for a wide range of ai workloads, spanning training, fine tuning, and inference. cloud tpus provide the versatility to accelerate workloads on leading ai frameworks, including pytorch, jax, and tensorflow.

Training Machine Learning Models With Tpus Moving a pytorch pipeline to tpu includes the following steps: this post provides a tutorial on using pytorch xla to build the tpu pipeline. the code is optimized for multi core tpu. It aims to demystify training on xla based accelerators, providing clear patterns and best practices to help the pytorch community unlock top performance and efficiency on google cloud tpus. Learn the basics of single and multi tpu core training. scale massive models using cloud tpus. dive into xla and advanced techniques to optimize tpu powered models. frequently asked questions about tpu training. Enable advanced training features using trainer arguments. these are sota techniques that are automatically integrated into your training loop without changes to your code.

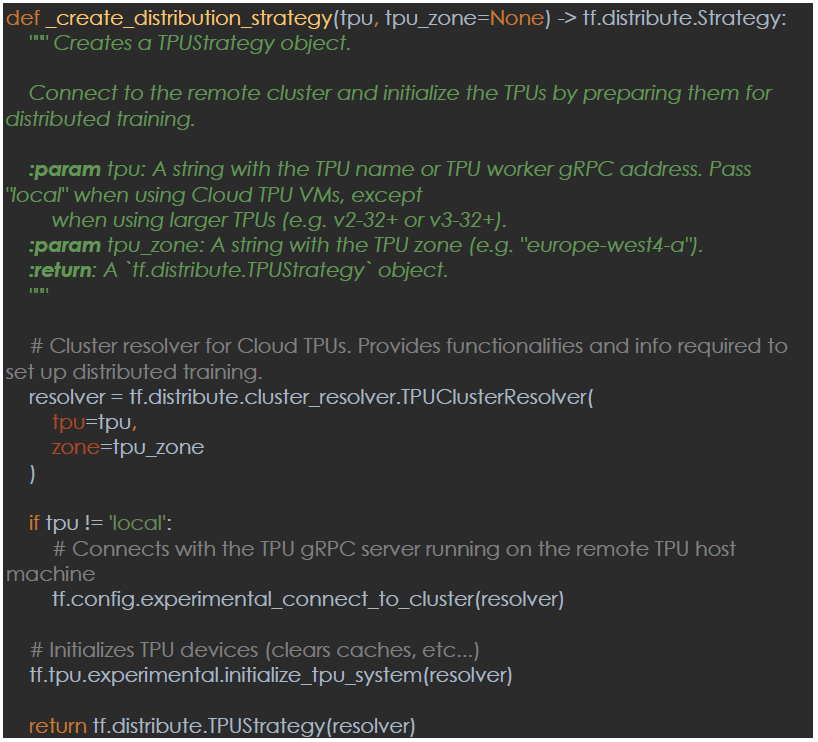

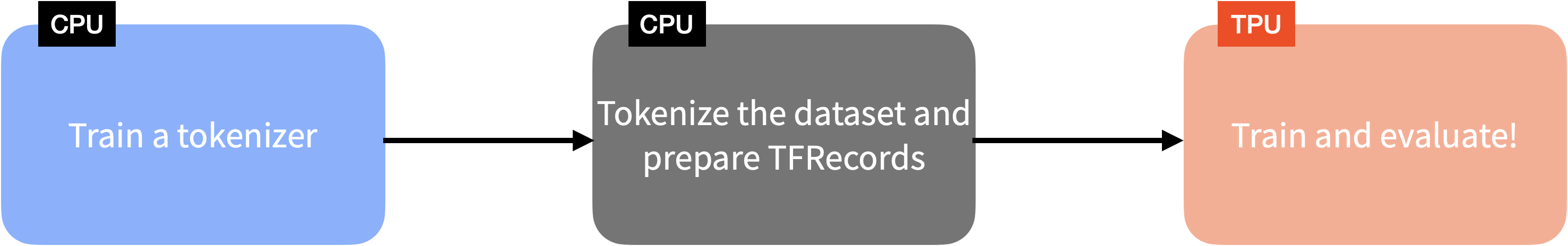

Training A Language Model From Scratch With рџ Transformers And Tpus Learn the basics of single and multi tpu core training. scale massive models using cloud tpus. dive into xla and advanced techniques to optimize tpu powered models. frequently asked questions about tpu training. Enable advanced training features using trainer arguments. these are sota techniques that are automatically integrated into your training loop without changes to your code. Learn how to set up and leverage tpus effectively, address common challenges, and employ debugging techniques. discover the power of training large scale models on tpus and unlock the full potential of your pytorch training workflows. With the right setup and optimization, training deep learning models on tpus with pytorch can be significantly faster, allowing for more efficient experimentation and development. First, to use tpus on google cloud tpus you have to use the pytorch xla library, as its enable the support to use tpus with pytorch. there is some options to do so, you can use code lab or create an environment on gcp to this. Moving a pytorch pipeline to tpu includes the following steps: this post provides a tutorial on using pytorch xla to build the tpu pipeline. the code is optimized for multi core tpu training. many of the ideas are adapted from here and here.

Supercharge Training And Learning With Teams Platform Learn how to set up and leverage tpus effectively, address common challenges, and employ debugging techniques. discover the power of training large scale models on tpus and unlock the full potential of your pytorch training workflows. With the right setup and optimization, training deep learning models on tpus with pytorch can be significantly faster, allowing for more efficient experimentation and development. First, to use tpus on google cloud tpus you have to use the pytorch xla library, as its enable the support to use tpus with pytorch. there is some options to do so, you can use code lab or create an environment on gcp to this. Moving a pytorch pipeline to tpu includes the following steps: this post provides a tutorial on using pytorch xla to build the tpu pipeline. the code is optimized for multi core tpu training. many of the ideas are adapted from here and here.

Scaling Pytorch Models On Cloud Tpus With Fsdp Pytorch First, to use tpus on google cloud tpus you have to use the pytorch xla library, as its enable the support to use tpus with pytorch. there is some options to do so, you can use code lab or create an environment on gcp to this. Moving a pytorch pipeline to tpu includes the following steps: this post provides a tutorial on using pytorch xla to build the tpu pipeline. the code is optimized for multi core tpu training. many of the ideas are adapted from here and here.

Train Ml Models With Pytorch Lightning On Tpus Google Cloud Blog

Comments are closed.