Statistical Learning 5 3 Cross Validation The Wrong And Right Way

Evaluating Machine Learning Models With Stratified K Fold Cross {"payload":{"allshortcutsenabled":false,"filetree":{"":{"items":[{"name":".gitignore","path":".gitignore","contenttype":"file"},{"name":"1.1 opening remarks.txt","path":"1.1 opening remarks.txt","contenttype":"file"},{"name":"1.2 examples and framework.txt","path":"1.2 examples and framework.txt","contenttype":"file"},{"name":"1.2 review. One way to do this is to apply cross validation and test a set of different tuning parameters, to see which set gives the lowest error rate. you’ve guessed it. this is the wrong way, but it.

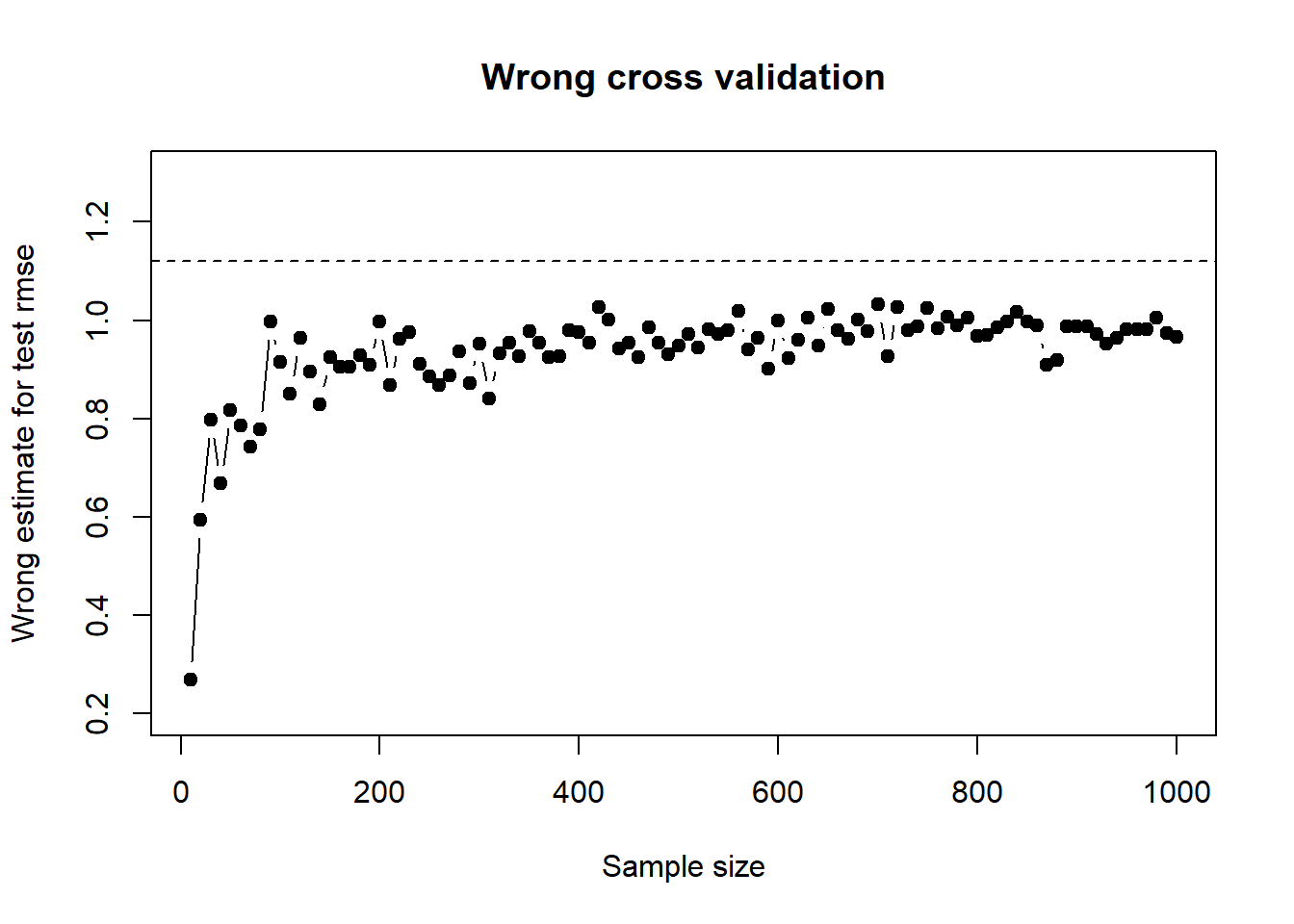

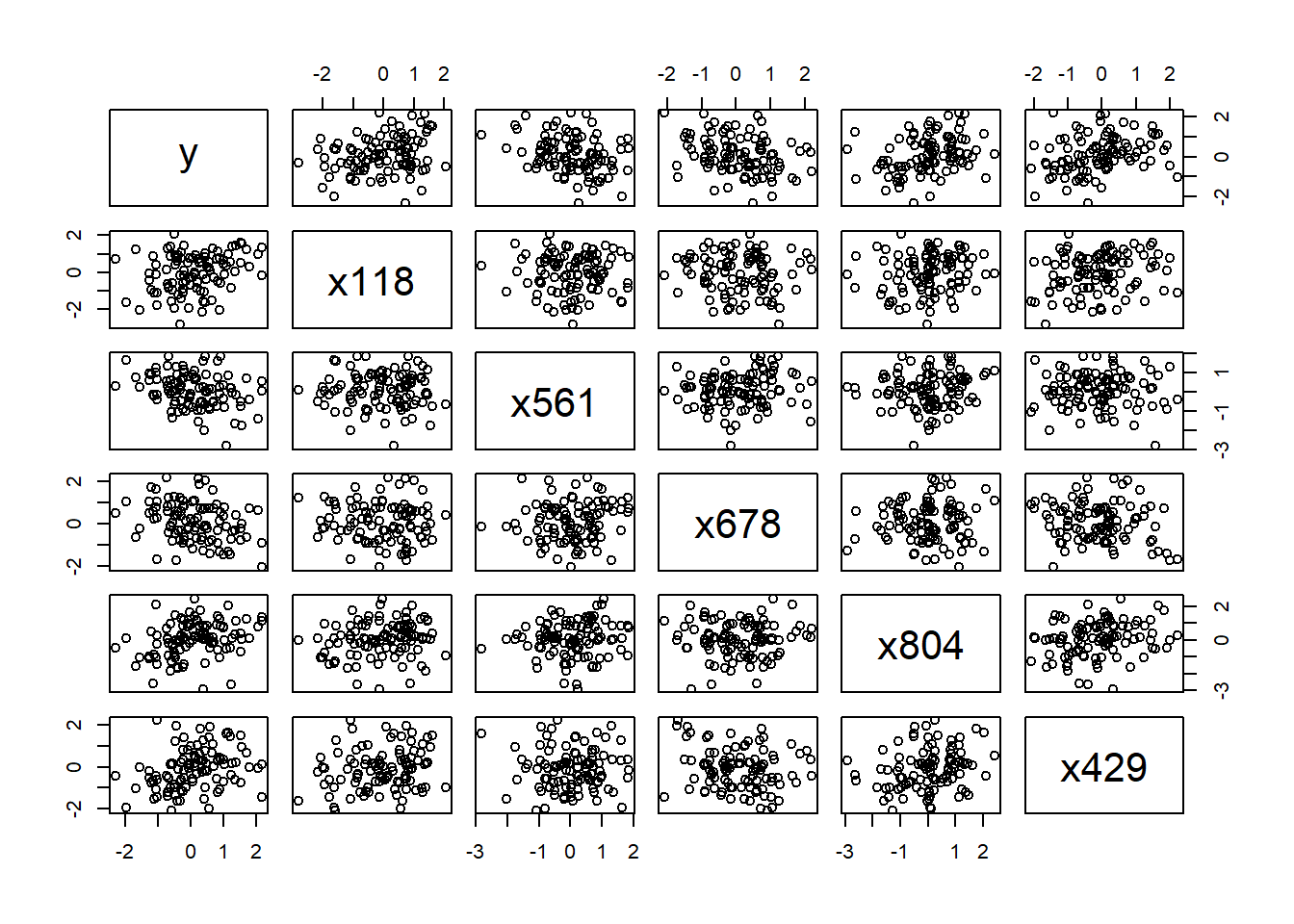

Statistical Learning Cross Validation Wrong And Right Way Data And Code Estimate the test error of logistic regression with these 20 predictors via 10 fold cross validation. each gene expression is standard normal and independent of all others. the response (cancer or not) is sampled from a coin flip — no correlation to any of the “genes”. Cross validation is one of the most popular methods for estimating test error and selecting tuning parameters, however, one can easily be mislead confused by it without realising it. My advice (in accordance with esl) is to perform cross validation at each point of the grid, and choose the model based on these results. you do not give details on the size of the problem but computationally wise it shouldn't be a problem. Cross validation is such a model validation technique to verify the classifier performance of a statistical analysis method. it is mainly used where our primary goal is prediction and we want to estimate how accurately the model will perform in practice.

Statistical Learning Cross Validation Wrong And Right Way Data And Code My advice (in accordance with esl) is to perform cross validation at each point of the grid, and choose the model based on these results. you do not give details on the size of the problem but computationally wise it shouldn't be a problem. Cross validation is such a model validation technique to verify the classifier performance of a statistical analysis method. it is mainly used where our primary goal is prediction and we want to estimate how accurately the model will perform in practice. Textbook: james, gareth, daniela witten, trevor hastie and robert tibshirani, an introduction to statistical learning. vol. 112, new york: springer, 2013. Cross validation (cv) cv is a particular way of de ning a collection of train test splits to estimate test performance. flexibility knobs (as well as other settings) can be chosen by optimizing the cv estimated test performance. model selection criteria and bayesian methods. Suppose that we perform forward stepwise regression and use cross validation to choose the best model size. using the full data set to choose the sequence of models is the wrong way to do cross validation (we need to redo the model selection step within each training fold).

Cross Validation The Wrong Way And Right Way With Feature Selection Textbook: james, gareth, daniela witten, trevor hastie and robert tibshirani, an introduction to statistical learning. vol. 112, new york: springer, 2013. Cross validation (cv) cv is a particular way of de ning a collection of train test splits to estimate test performance. flexibility knobs (as well as other settings) can be chosen by optimizing the cv estimated test performance. model selection criteria and bayesian methods. Suppose that we perform forward stepwise regression and use cross validation to choose the best model size. using the full data set to choose the sequence of models is the wrong way to do cross validation (we need to redo the model selection step within each training fold).

Comments are closed.