State Of The Art Ai With The Nvidia Tensorrt Hyperscale Inference Platform

State Of The Art Ai With The Nvidia Tensorrt Hyperscale Inference Platform Nvidia websites use cookies to deliver and improve the website experience. see our cookie policy for further details on how we use cookies and how to change your cookie settings. It brings together nvidia tensorrt optimizer and runtime engines for inference, video codec sdk for transcode, pre processing, and data curation apis to tap into the power of tesla gpus.

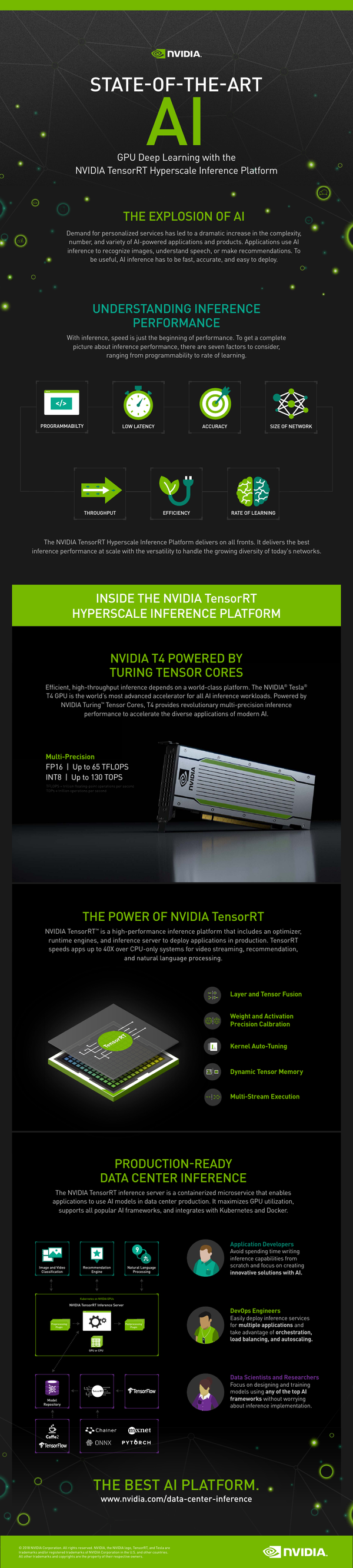

Tensorrt Sdk Nvidia Developer The combination of tensorrt 3 with nvidia gpus delivers ultra fast and efficient inferencing across all frameworks for ai enabled services such as image and speech recognition, natural language processing, visual search and personalized recommendations. We are excited to see how the nvidia tensorrt inference server, which brings a powerful solution for both gpu and cpu inference serving at scale, enables faster deployment of ai applications and improves infrastructure utilization.”. The nvidia tensorrt inference server is a containerized microservice that enables applications to use ai models in data center production. it maximizes gpu utilization, supports all popular ai frameworks, and integrates with kubernetes and docker. Nvidia today launched tensorrt™ 8, the eighth generation of the company’s ai software, which slashes inference time in half for language queries enabling developers to build the world’s best performing search engines, ad recommendations and chatbots and offer them from the cloud to the edge.

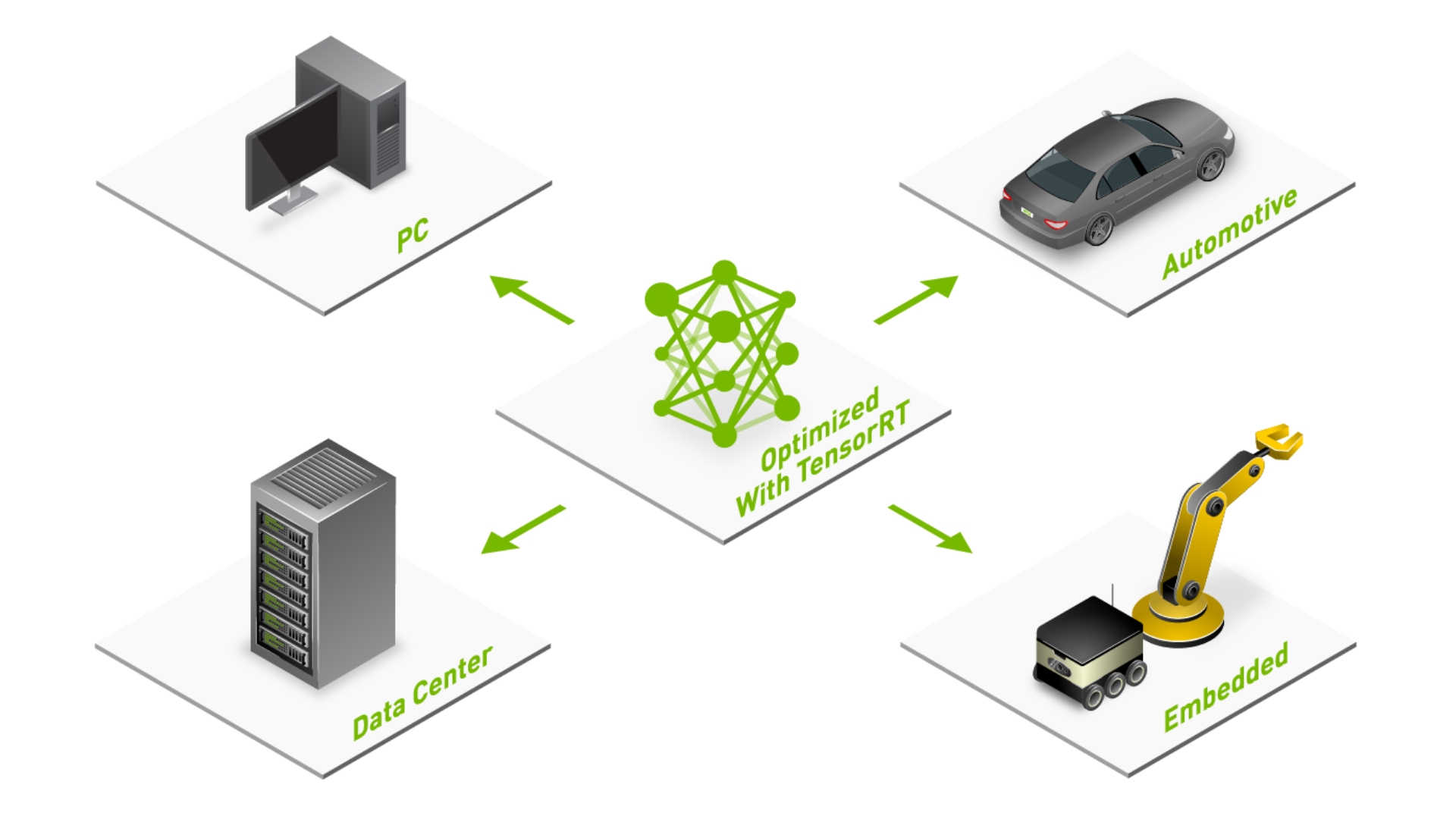

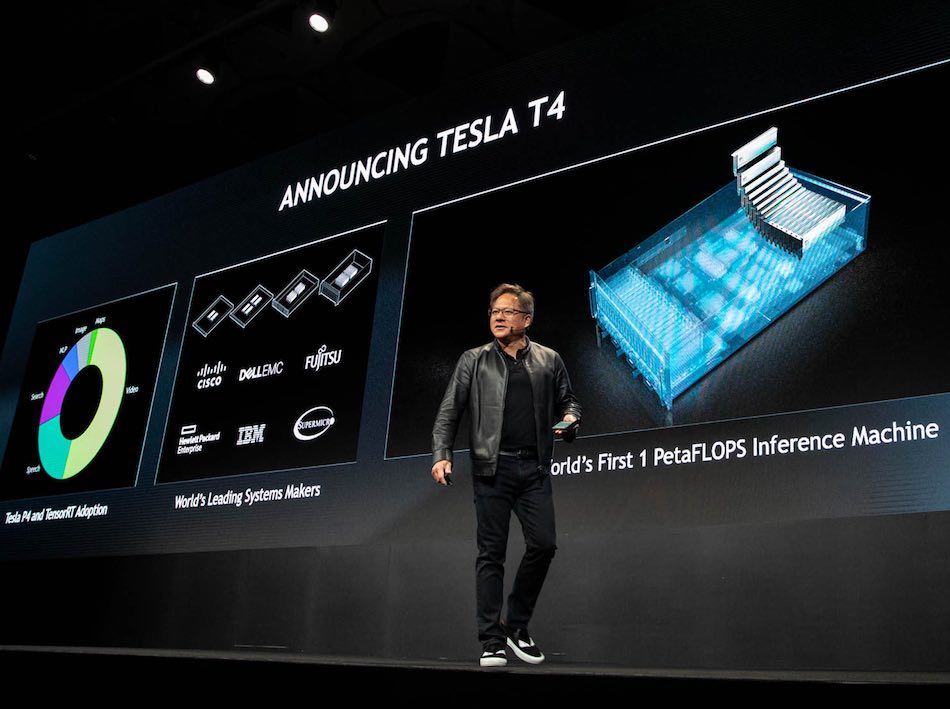

Tensorrt 7 Accelerate End To End Conversational Ai With New Compiler The nvidia tensorrt inference server is a containerized microservice that enables applications to use ai models in data center production. it maximizes gpu utilization, supports all popular ai frameworks, and integrates with kubernetes and docker. Nvidia today launched tensorrt™ 8, the eighth generation of the company’s ai software, which slashes inference time in half for language queries enabling developers to build the world’s best performing search engines, ad recommendations and chatbots and offer them from the cloud to the edge. With its small form factor and 70 watt (w) footprint design, t4 is optimized for scale out servers, and is purpose built to deliver state of the art inference in real time. Nvidia® tensorrt™ is an ecosystem of tools for developers to achieve high performance deep learning inference. tensorrt includes inference compilers, runtimes, and model optimizations that deliver low latency and high throughput for production applications. The nvidia tensorrt inference server makes state of the art ai driven experiences possible in real time. it’s a containerized inference microservice for data center production that maximizes gpu utilization and seamlessly integrates into devops deployments with docker and kubernetes integration. Join this introduction and live q&a to a new pytorch based architecture for tensorrt llm that significantly enhances user experience and developer velocity—making it easier to build custom models, integrate new kernels, and extend runtime functionality, while delivering sota performance on the nvidia gpus.

Accelerate Generative Ai Inference Performance With Nvidia Tensorrt With its small form factor and 70 watt (w) footprint design, t4 is optimized for scale out servers, and is purpose built to deliver state of the art inference in real time. Nvidia® tensorrt™ is an ecosystem of tools for developers to achieve high performance deep learning inference. tensorrt includes inference compilers, runtimes, and model optimizations that deliver low latency and high throughput for production applications. The nvidia tensorrt inference server makes state of the art ai driven experiences possible in real time. it’s a containerized inference microservice for data center production that maximizes gpu utilization and seamlessly integrates into devops deployments with docker and kubernetes integration. Join this introduction and live q&a to a new pytorch based architecture for tensorrt llm that significantly enhances user experience and developer velocity—making it easier to build custom models, integrate new kernels, and extend runtime functionality, while delivering sota performance on the nvidia gpus.

Accelerate Generative Ai Inference Performance With Nvidia Tensorrt The nvidia tensorrt inference server makes state of the art ai driven experiences possible in real time. it’s a containerized inference microservice for data center production that maximizes gpu utilization and seamlessly integrates into devops deployments with docker and kubernetes integration. Join this introduction and live q&a to a new pytorch based architecture for tensorrt llm that significantly enhances user experience and developer velocity—making it easier to build custom models, integrate new kernels, and extend runtime functionality, while delivering sota performance on the nvidia gpus.

Video Nvidia Rolls Out Tensorrt Hyperscale Platform And New T4 Gpu For

Comments are closed.