Stable Diffusion Instructpix2pix Image Editing With Text Instructions

Github Whiteghostdev Image Editing Anything Stable Diffusion Image Stable diffusion instructpix2pix image editing with text instructions. stable diffusion instruct pix2pix, an instruction based image editing model playground. We demonstrate an approach that combines two large pretrained models, a large language model and a text to image model, to generate a dataset for training a diffusion model to follow written image editing instructions.

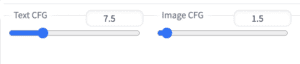

Instruct Pix2pix Edit And Stylize Photos With Text Stable Diffusion Art Instructpix2pix is a new stable diffusion based model that can edit images using text prompts only. it can change backgrounds, hairstyle using prompts. With this method, we can prompt stable diffusion using an input image and an “instruction”, such as apply a cartoon filter to the natural image. figure 1: we explore the instruction tuning capabilities of stable diffusion. They generated almost half a million edits using gpt3 in the form of

Instruct Pix2pix Edit And Stylize Photos With Text Stable Diffusion Art They generated almost half a million edits using gpt3 in the form of

Instruct Pix2pix Edit And Stylize Photos With Text Stable Diffusion Art Learn how you can edit and style images using instruct pix2pix with the help of huggingface diffusers and transformers libraries in python. This stable diffusion model transforms images based solely on textual instructions. timothy brooks, the model’s creator, defines it as “learning to follow image editing instructions”. The paired data used for training instructpix2pix consists of one unedited image and one altered image, with the image’s semantic content reimagined by gpt 3.

Comments are closed.