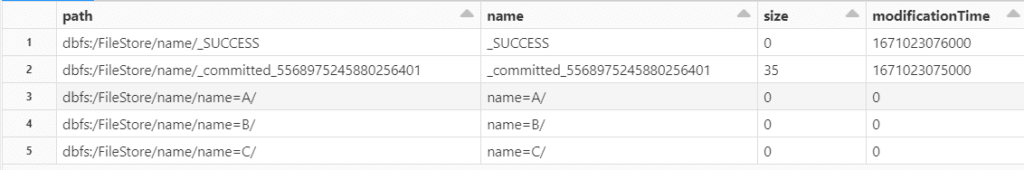

Spark Tutorial Spark Actions Collect And Count With Examples

Spark Sql Count Distinct From Dataframe Spark By Examples We’ll define rdd actions, detail key operations (e.g., collect, count, saveastextfile, reduce) in scala, cross reference pyspark equivalents, and provide a practical example—a sales data analysis using multiple actions—to illustrate their power and versatility. No description has been added to this video.

Spark Dataframe Count Spark By Examples Learn about key rdd actions in pyspark such as collect (), count (), and reduce (). step by step examples and output to help beginners understand pyspark better. Rdd actions are pyspark operations that return the values to the driver program. any function on rdd that returns other than rdd is considered as an action in pyspark programming. in this tutorial, i will explain the most used rdd actions with examples. In the above example, the reduce() action is applied to the rdd rdd using a lambda function lambda x, y: x y. this lambda function takes two elements and returns their sum. the reduce() action repeatedly applies this function to pairs of elements in the rdd until a single result is obtained. In this guide, we will explore spark rdd transformations and actions with real world examples, helping both beginners and experienced developers understand how to leverage spark for efficient data processing.

Spark Dataframe Count Spark By Examples In the above example, the reduce() action is applied to the rdd rdd using a lambda function lambda x, y: x y. this lambda function takes two elements and returns their sum. the reduce() action repeatedly applies this function to pairs of elements in the rdd until a single result is obtained. In this guide, we will explore spark rdd transformations and actions with real world examples, helping both beginners and experienced developers understand how to leverage spark for efficient data processing. Pyspark is the python api for apache spark, designed for big data processing and analytics. it lets python developers use spark's powerful distributed computing to efficiently process large datasets across clusters. Unlike transformations, which are lazy and build a directed acyclic graph (dag) without immediate execution, actions—such as collect, count, or reduce —trigger spark to compute and deliver outcomes. In our apache spark tutorial journey, we have learnt how to create spark rdd using java, spark transformations. in this article, we are going to explain spark actions. Actions are operations that trigger computation on rdds or dataframes and return a result to the driver program or write data to an external storage system. examples of actions include collect(), take(), reduce(), and count().

Comments are closed.