Solved Backward Step I E Back Propagation And Gradient Chegg

Solved Backward Step I E Back Propagation And Gradient Chegg Q15. what are the steps for back propagation based algorithm to train a neural network? (a) for each data point in the dataset: calculate gradient using the chain rule, calculate weights as the gradient multiplied by the learning factor. Second stage of weight adjustment using calculated derivatives can be tackled using variety of optimization schemes substantially more powerful than gradient descent.

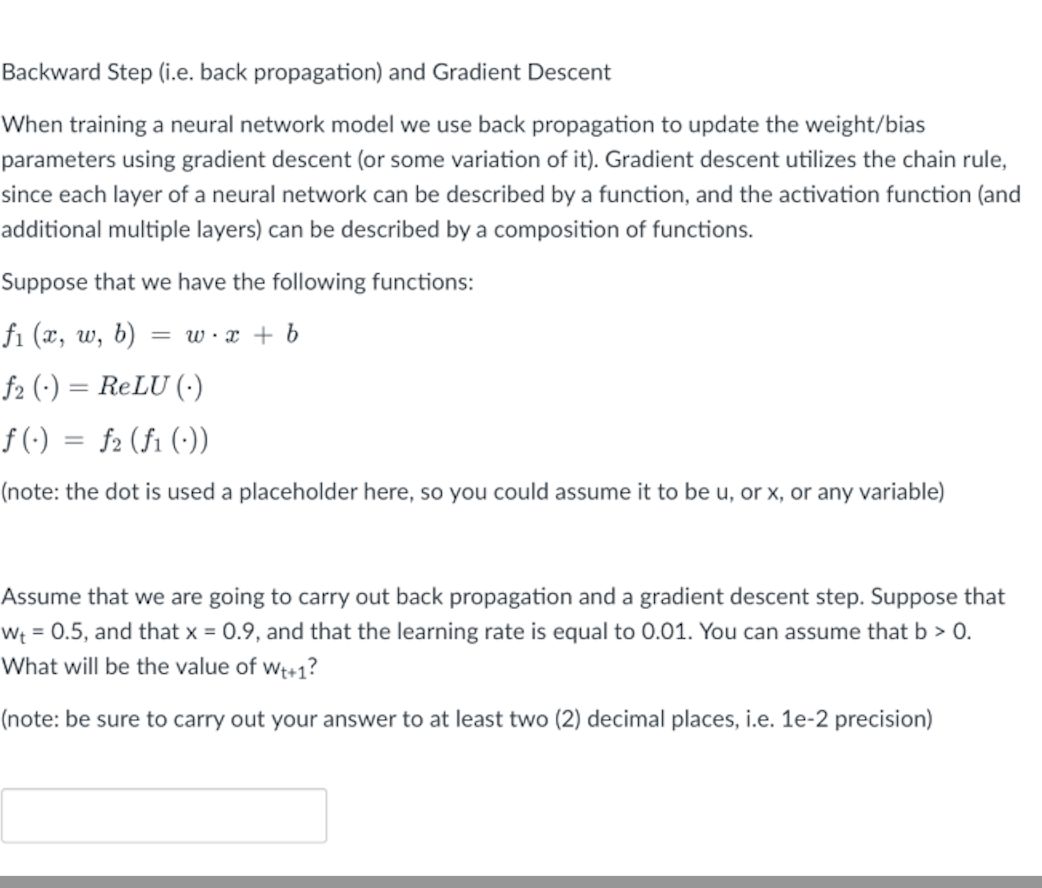

Solved For The Following Example Which We Solved In The Chegg Backpropagation is a common method for training a neural network. there is no shortage of papers online that attempt to explain how backpropagation works, but few that include an example with actual numbers. In backward pass or back propagation the errors between the predicted and actual outputs are computed. the gradients are calculated using the derivative of the sigmoid function and weights and biases are updated accordingly. This document presents the back propagation algorithm for neural networks along with supporting proofs. the notation sticks closely to that used by russell & norvig in their arti cial intelligence textbook (3rd edition, chapter 18). Study with quizlet and memorize flashcards containing terms like back propagation, why do we need a backdrop, staged computation and more.

Back Propagation Algorithm Pdf This document presents the back propagation algorithm for neural networks along with supporting proofs. the notation sticks closely to that used by russell & norvig in their arti cial intelligence textbook (3rd edition, chapter 18). Study with quizlet and memorize flashcards containing terms like back propagation, why do we need a backdrop, staged computation and more. Parameter initialisation the approximate solution found by gradient descent (and its quality) depends on the parameter initialisation θ0 (especially for deep neural networks). commonly, θ0 is randomly sampled. Learners explore the chain rule, implement activation functions and their derivatives, and see how the forward and backward passes work together to enable learning through gradient descent. Complete the forward and back propagation for the following computation graph in figure 2, i.e., compute f and the gradient of f on all the input parameters. your solution’s ready to go! our expert help has broken down your problem into an easy to learn solution you can count on. Show the steps and upstream and downstream gradients in the computational graph. in backward propagation use the sigmoid local gradient for the 4 operations performed by this function.

Solved Backward Propagation Question 5 I The Goal Of Chegg Parameter initialisation the approximate solution found by gradient descent (and its quality) depends on the parameter initialisation θ0 (especially for deep neural networks). commonly, θ0 is randomly sampled. Learners explore the chain rule, implement activation functions and their derivatives, and see how the forward and backward passes work together to enable learning through gradient descent. Complete the forward and back propagation for the following computation graph in figure 2, i.e., compute f and the gradient of f on all the input parameters. your solution’s ready to go! our expert help has broken down your problem into an easy to learn solution you can count on. Show the steps and upstream and downstream gradients in the computational graph. in backward propagation use the sigmoid local gradient for the 4 operations performed by this function.

Comments are closed.