Solved 1 1 10 Point Let The Data Be Xi Yi 1 Where Xi Chegg

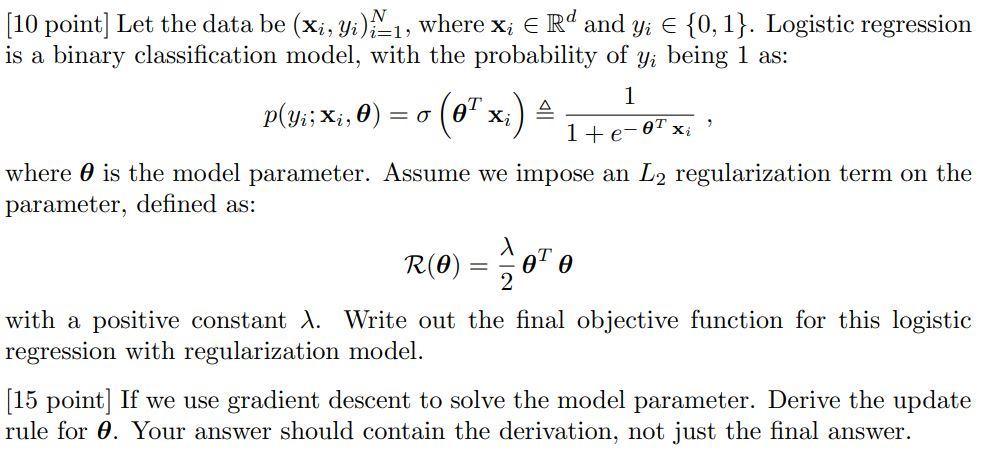

Solved 1 1 10 Point Let The Data Be Xi Yi 1 Where Xi Chegg Question: 1 1 [10 point] let the data be (xi, yi) #1, where xi e rd and yi € {0,1}. logistic regression is a binary classification model, with the probability of yi being 1 as: p (y;;x;,0) = 0 (0% x; where 0 is the model parameter. 1) [10 point] let the data be (xi, yi)i 1, where x; e rd and yi e {0, 1}. logistic regression is a binary university at buffalo • cse.

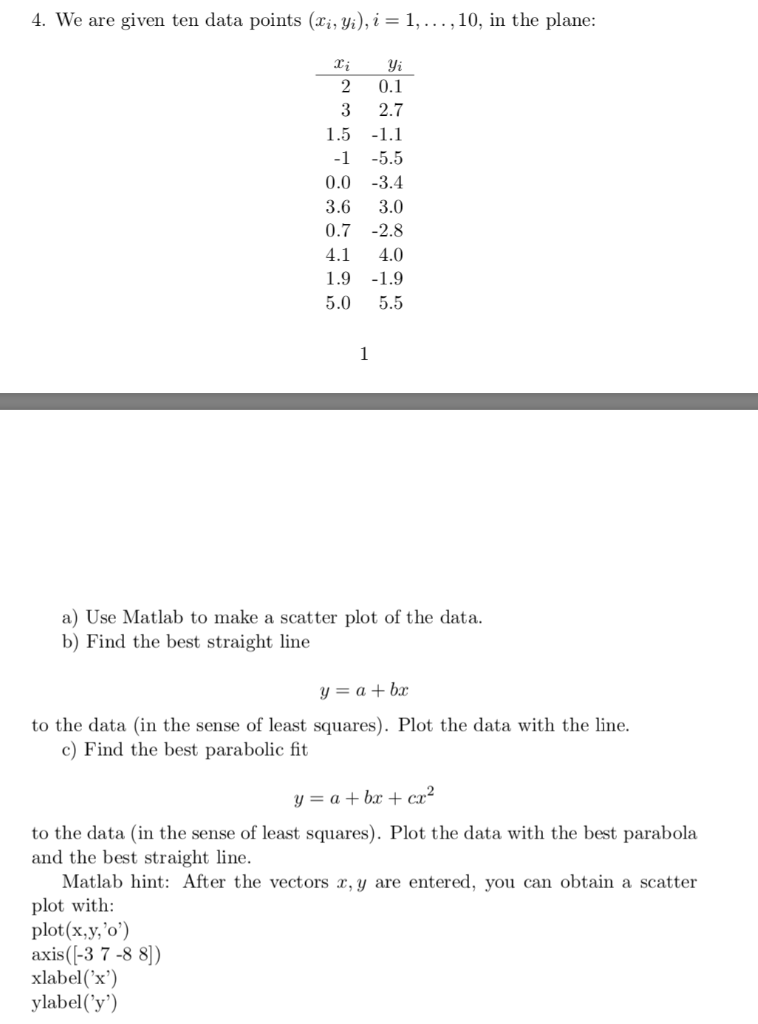

Solved 4 We Are Given Ten Data Points Xi Yi I 1 Chegg 1. are these estimators unbiased? if yes, prove it. if no, find the bias. 2. derive the variance of βˆ 0 and the covariance between βˆ 1 and βˆ 0. Step 1: set up the lagrangian function. in this case, the lagrangian l is given by l (w, λ) = 1 2 * ||w||^2 λ * (y xw), where λ is the lagrange multiplier. Our task is to confirm that the solution for β given a predictor x ∈ r^p is given by βb = (xt w x)−1 xt w y . step 2: explication. firstly, we need to create a weighted least square problem, which is derived from the loss function of local linear regression. To find the least squares line that best fits the given data set { (xi, yi)}=1, we need to minimize the sum of the squared differences between the observed values yi and the values.

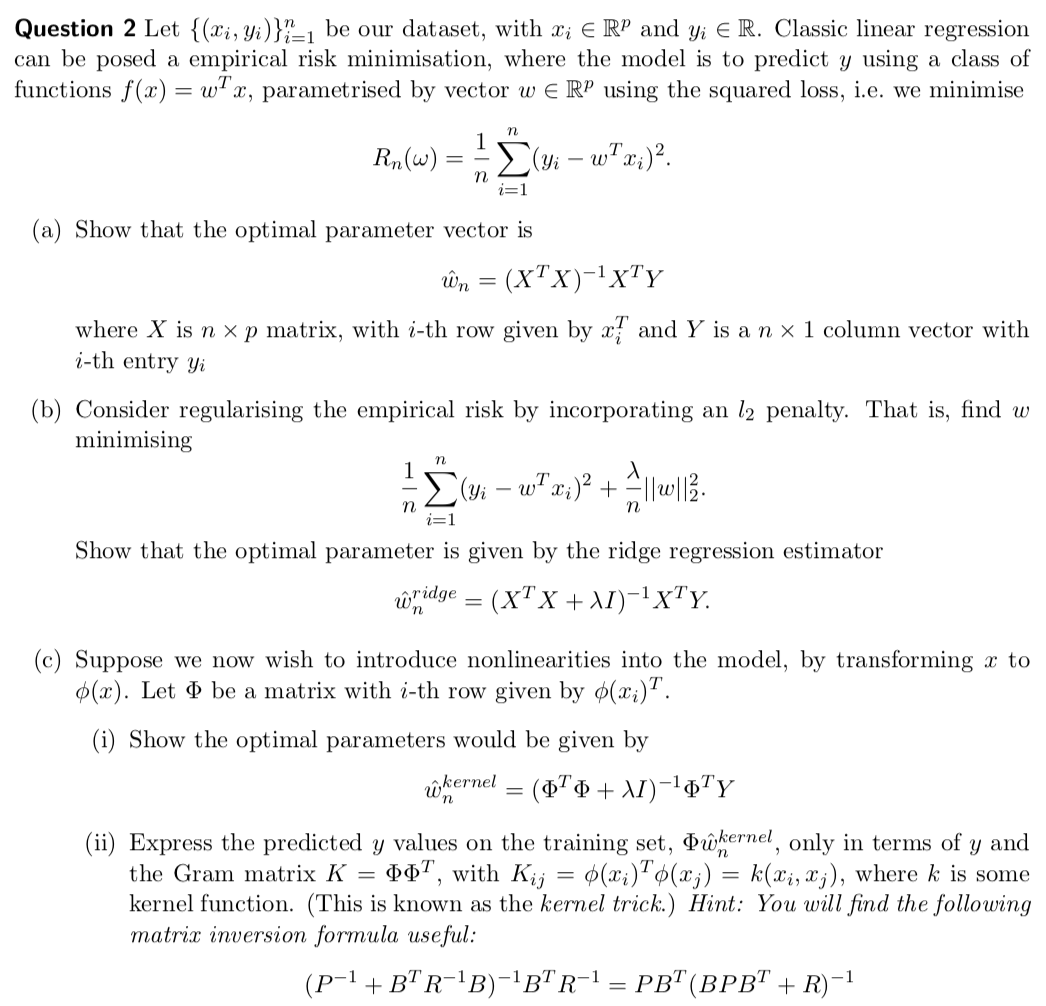

Question 2 Let Xi Yi 1 Be Our Dataset With Xi Chegg Our task is to confirm that the solution for β given a predictor x ∈ r^p is given by βb = (xt w x)−1 xt w y . step 2: explication. firstly, we need to create a weighted least square problem, which is derived from the loss function of local linear regression. To find the least squares line that best fits the given data set { (xi, yi)}=1, we need to minimize the sum of the squared differences between the observed values yi and the values. This can be done by finding the pseudo inverse of a and multiplying it with b. let's denote the pseudo inverse of a as a . the least squares solution x can be found as: x = a * b so, b is the vector of y values of the data points. Learn from their 1 to 1 discussion with filo tutors. consider a data set d = {xi,yi}, i = 1, ,n, where xi ∈ r and yi ∈ {0,1}, and n is the number of samples. The solution that minimizes the error function can be found by setting the derivative of the error function with respect to the weight vector to zero and solving for the weight vector. this results in the following expression: w = (Σ ri xi xi^t)^ 1 Σ ri xi yi here's a step by step explanation:. Write question: n i= 1) [10 point] let the data be (xi, yi) 1, where xi € rd and yi € {0, 1}. logistic regression is a binary classification model, with the probability of yi being 1 as: 1 Δ p (yi; xi, 0) =o xi (07 1 e ot xi where 0 is the model parameter.

Comments are closed.