Shap Values An Overview

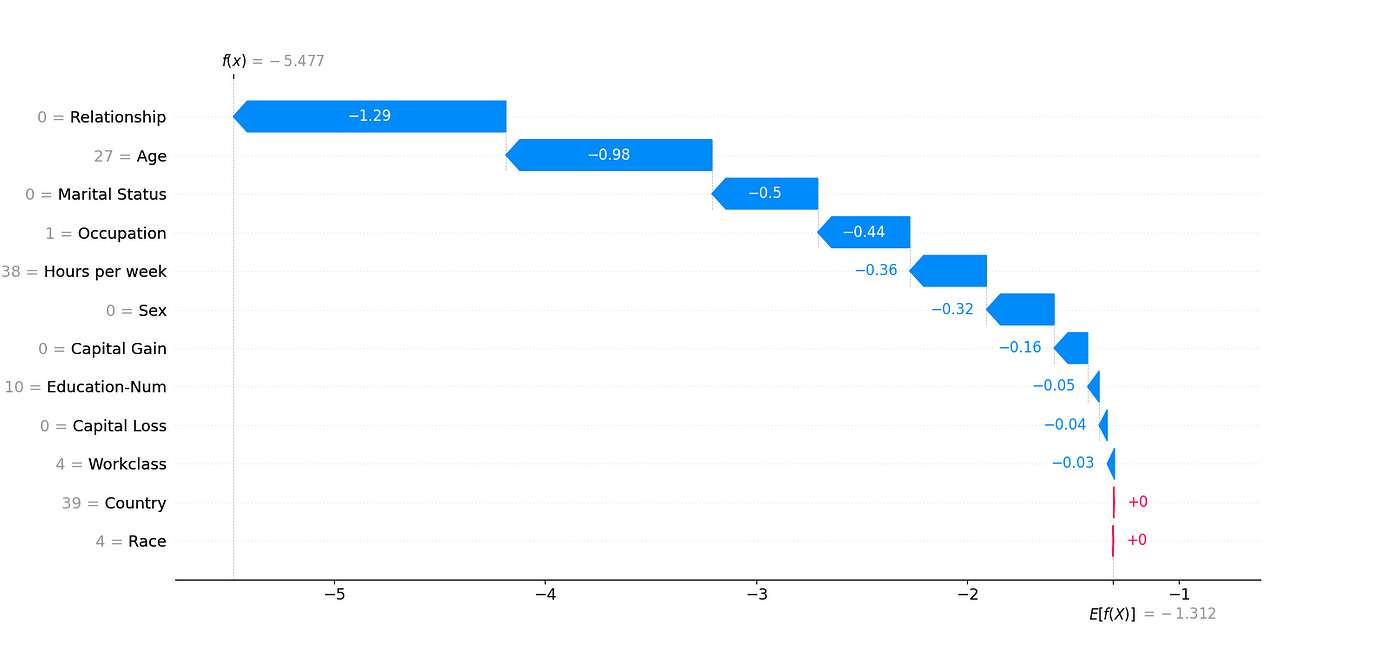

How To Use Shap Values To Optimize And Debug Ml Models Shap (shapley additive explanations) has a variety of visualization tools that help interpret machine learning model predictions. these plots highlight which features are important and also explain how they influence individual or overall model outputs. Shap values (sh apley a dditive ex p lanations) is a method based on cooperative game theory and used to increase transparency and interpretability of machine learning models.

Shap Values With Examples Applied To A Multi Classification Problem Shap values can help you see which features are most important for the model and how they affect the outcome. in this tutorial, we will learn about shap values and their role in machine learning model interpretation. Shap (shapley additive explanations) is a game theoretic approach to explain the output of any machine learning model. it connects optimal credit allocation with local explanations using the classic shapley values from game theory and their related extensions (see papers for details and citations). Learn how explainable ai (xai) interprets machine learning decisions using shap, lime, and more—boosting transparency, trust, and model debugging in ml systems. Explore shap, lime, and feature importance in ml. learn methods, strengths, and best practices for transparent, fair, and trusted ai models.

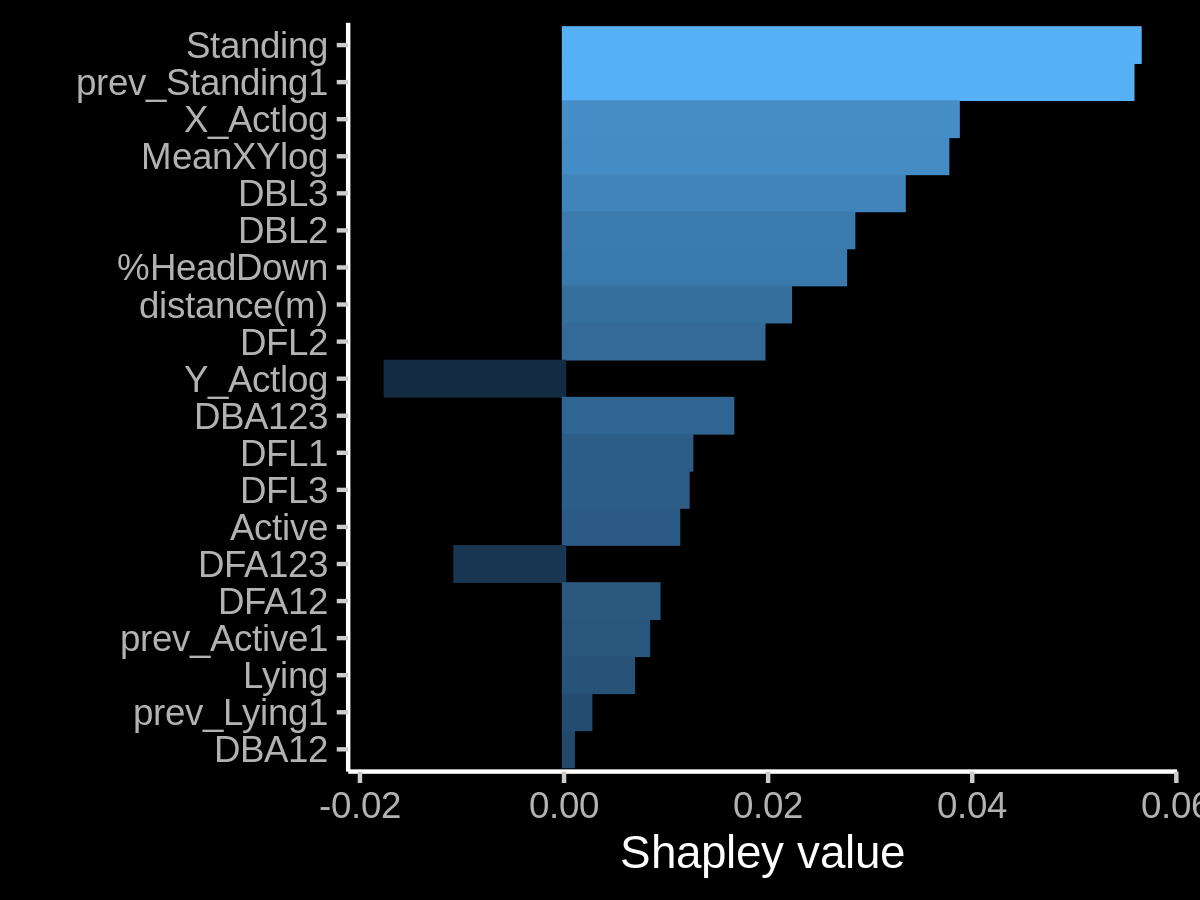

Shap Values For The Different Feature Values Positive Shap Values Learn how explainable ai (xai) interprets machine learning decisions using shap, lime, and more—boosting transparency, trust, and model debugging in ml systems. Explore shap, lime, and feature importance in ml. learn methods, strengths, and best practices for transparent, fair, and trusted ai models. Better understand global shap analysis and how it can impact actuarial work. By design, the shap guided adversarial training framework is model agnostic and readily transferable to other asset classes and predictive tasks where interpretability is essential, enabling deployment in real world investment and risk management workflows without imposing explicit economic priors. Shap integration for explainable ai in applications from zoomhoot the important information you need information hub for industry professionals, business owners, and investors. The approach follows a 2025 paper that showed how to apply lime shap to time‑series without leakage, then used arima and gradient‑boosted trees to quantify what actually drives the forecast (yearly seasonality dominated in their case).

Shap Values Summary Plot Download Scientific Diagram Better understand global shap analysis and how it can impact actuarial work. By design, the shap guided adversarial training framework is model agnostic and readily transferable to other asset classes and predictive tasks where interpretability is essential, enabling deployment in real world investment and risk management workflows without imposing explicit economic priors. Shap integration for explainable ai in applications from zoomhoot the important information you need information hub for industry professionals, business owners, and investors. The approach follows a 2025 paper that showed how to apply lime shap to time‑series without leakage, then used arima and gradient‑boosted trees to quantify what actually drives the forecast (yearly seasonality dominated in their case).

5 Demystifying Shap Values In Machine Learning Interpretability Shap integration for explainable ai in applications from zoomhoot the important information you need information hub for industry professionals, business owners, and investors. The approach follows a 2025 paper that showed how to apply lime shap to time‑series without leakage, then used arima and gradient‑boosted trees to quantify what actually drives the forecast (yearly seasonality dominated in their case).

Comments are closed.