Seamlessly Transfer Kafka Data With The New Azure Cosmos Db Connector

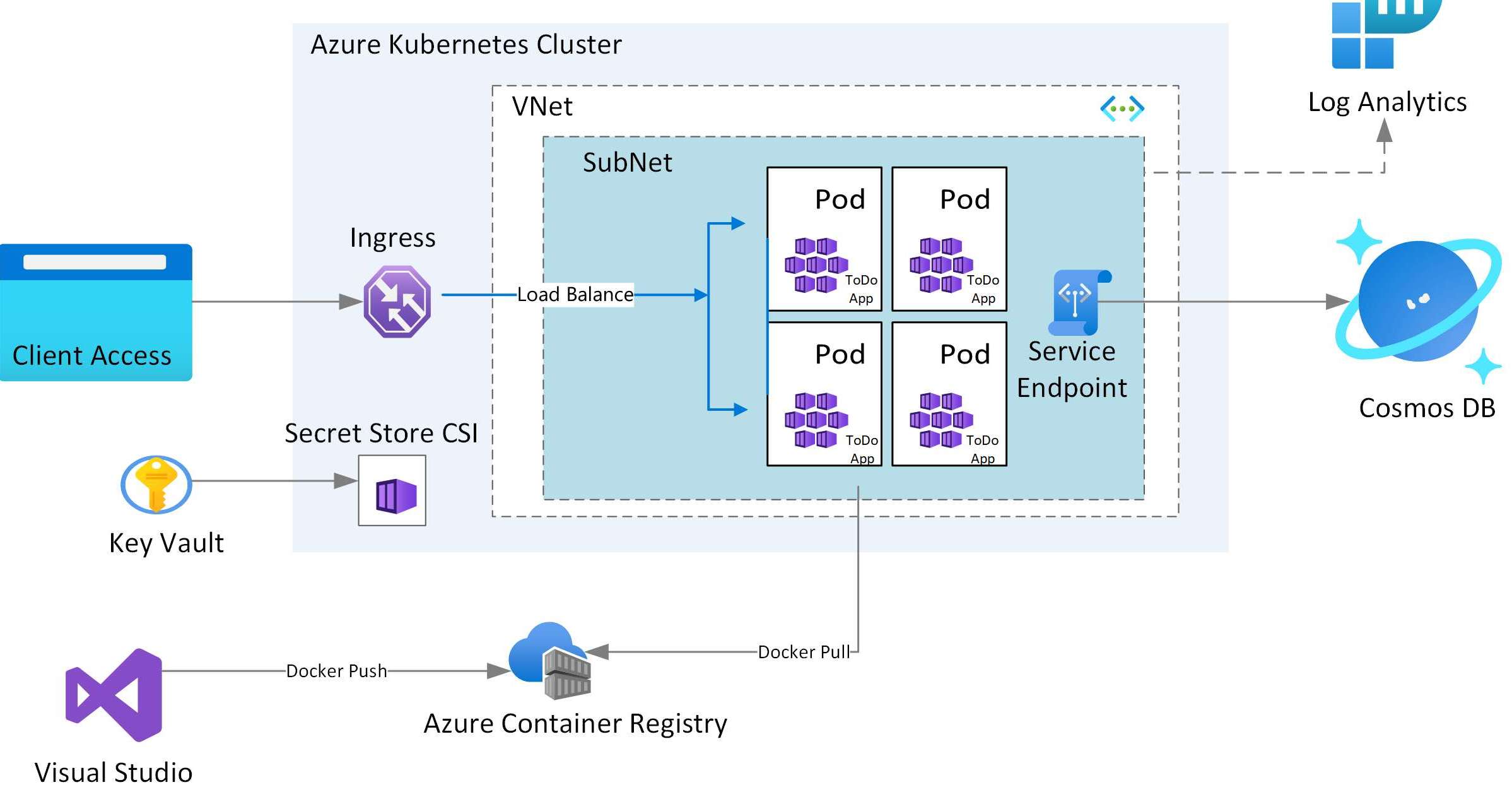

Build And Deploy Containerized Apps With Azure Kubernetes Service The azure cosmos db sink connector allows you to export data from apache kafka topics to an azure cosmos db database. the connector polls data from kafka to write to containers in the database based on the topics subscription. This managed connector allows you to seamlessly integrate azure cosmos db with your kafka powered event streaming architecture—without worrying about provisioning, scaling, or managing the connector infrastructure.

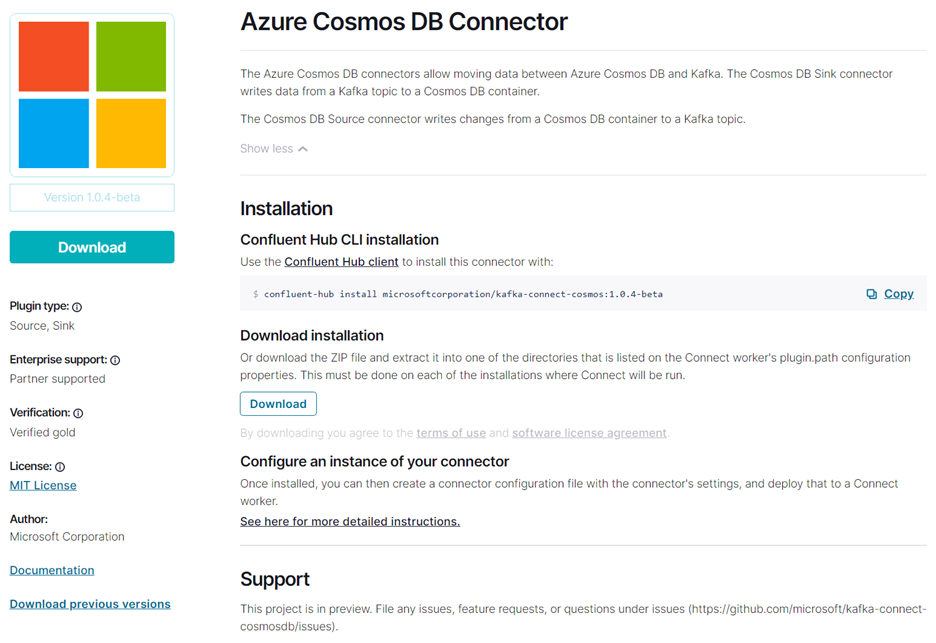

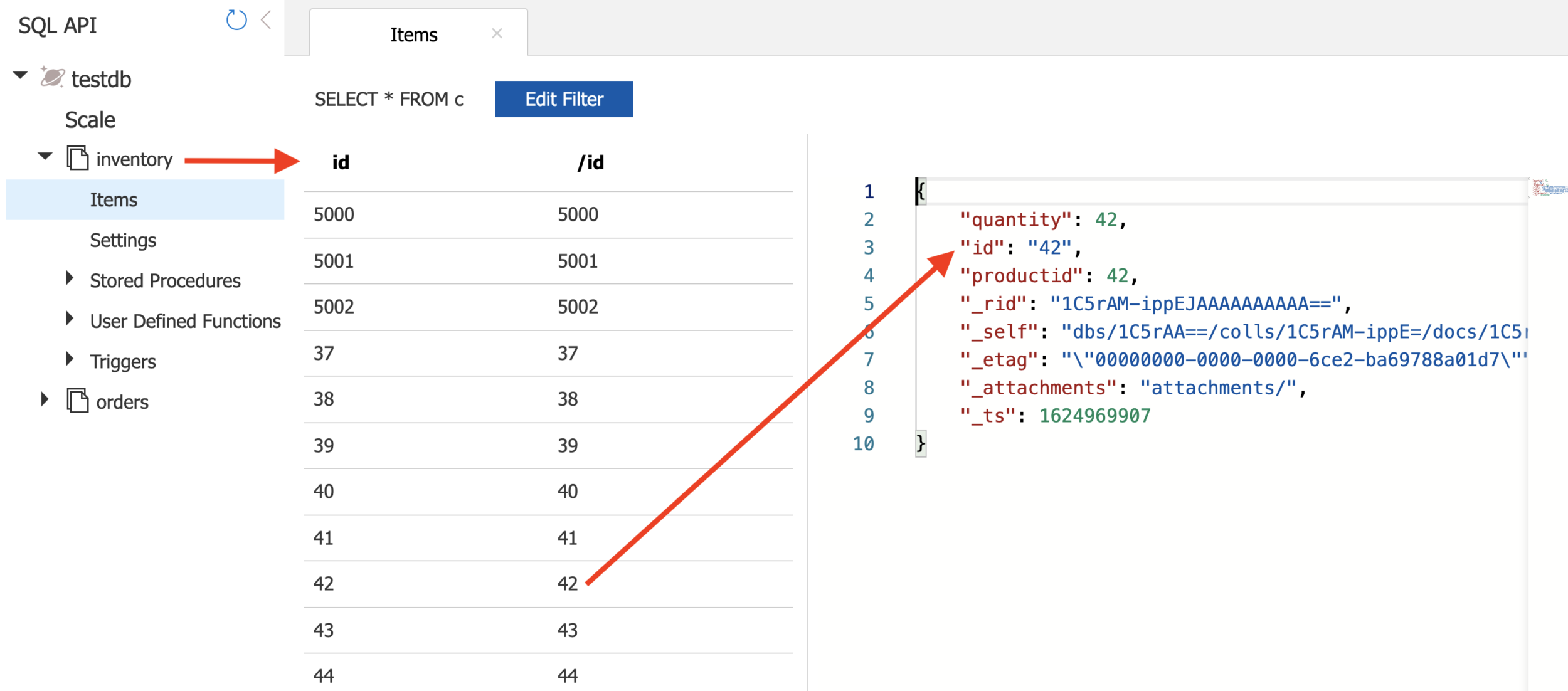

Seamlessly Transfer Kafka Data With The New Azure Cosmos Db Connector Kafka connect is a tool for scalable and reliably streaming data between apache kafka and other systems. using kafka connect you can define connectors that move large data sets into and out of kafka. kafka connect for azure cosmos db is a connector to read from and write data to azure cosmos db. Follow this guide to create a connector from control center but instead of using the datagenconnector option, use the cosmosdbsinkconnector tile instead. when configuring the sink connector, fill out the values as you have filled in the json file. Go to github to download the azure cosmos db connector and find details and documentation for the azure cosmos db kafka source connector and the azure cosmos db kafka sink connector. The fully managed azure cosmos db sink v2 connector for confluent cloud writes data to an azure cosmos db database. the connector polls data from apache kafka® and writes to database containers, supporting high throughput data ingestion with configurable write strategies for enhanced data handling.

Getting Started With Kafka Connector For Azure Cosmos Db Using Docker Go to github to download the azure cosmos db connector and find details and documentation for the azure cosmos db kafka source connector and the azure cosmos db kafka sink connector. The fully managed azure cosmos db sink v2 connector for confluent cloud writes data to an azure cosmos db database. the connector polls data from apache kafka® and writes to database containers, supporting high throughput data ingestion with configurable write strategies for enhanced data handling. This managed connector allows you to seamlessly integrate azure cosmos db with your kafka powered event streaming architecture—without worrying about provisioning, scaling, or managing the connector infrastructure. read on to learn more about the new connector and what it takes to hook everything up. This managed connector allows you to seamlessly integrate cosmos db with your kafka powered event streaming architecture, without worrying about provisioning, scaling, or managing the connector infrastructure. This new version brings significant improvements in both the source and sink connectors, enhancing scalability, performance, and flexibility for developers working with azure cosmos db. Enable smooth data transfers between apache kafka and azure cosmos db. new self managed source and sink azure cosmos db connectors for kafka connect are available now and can be downloaded from confluent hub or on github.

Getting Started With Kafka Connector For Azure Cosmos Db Using Docker This managed connector allows you to seamlessly integrate azure cosmos db with your kafka powered event streaming architecture—without worrying about provisioning, scaling, or managing the connector infrastructure. read on to learn more about the new connector and what it takes to hook everything up. This managed connector allows you to seamlessly integrate cosmos db with your kafka powered event streaming architecture, without worrying about provisioning, scaling, or managing the connector infrastructure. This new version brings significant improvements in both the source and sink connectors, enhancing scalability, performance, and flexibility for developers working with azure cosmos db. Enable smooth data transfers between apache kafka and azure cosmos db. new self managed source and sink azure cosmos db connectors for kafka connect are available now and can be downloaded from confluent hub or on github.

Comments are closed.