Run Local Llm On Raspberry Pi 5 With A Single Executable Llamafile Cli Version

Llm On Raspberry Pi 5 Raspberry Pi Maker Pro First, starting early april, raspberry pi os comes with vulkan driver support natively, i use tinyllamafile f16 and q8 0 to run sample q&a. This guide provides a detailed tutorial on transforming your custom llama model, llama3, into a llamafile, enabling it to run locally as a standalone executable.

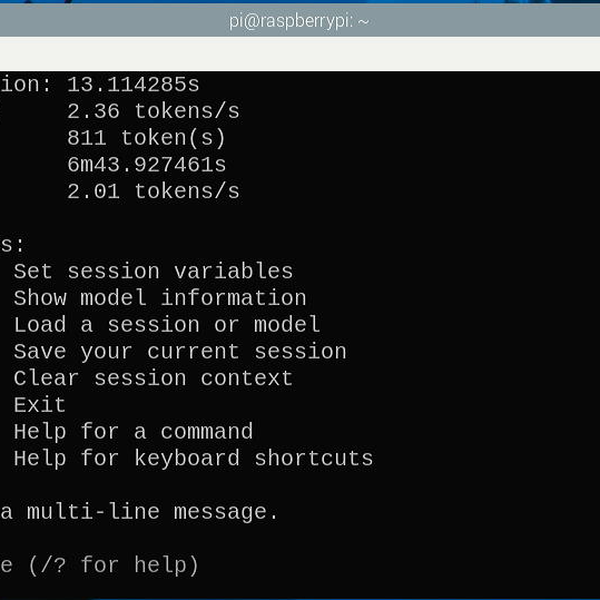

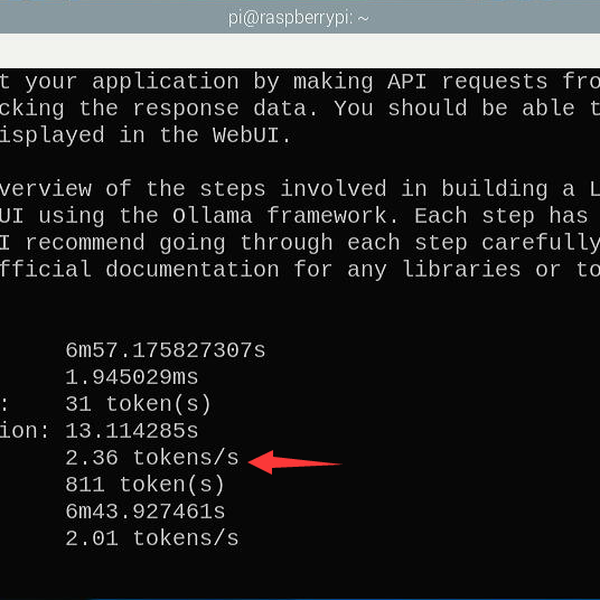

Llm On Raspberry Pi 5 Raspberry Pi Maker Pro We will be using these commands to download and build the llama project, and to download and quantize a model that can run on the raspberry pi. I wanted to see if i could run llamafile, which lets you distribute and run llms with a single file, on a raspberry pi 5. i hope i can use my own custom front end to interact with the model in different ways. i have a few ideas but want to see if i can get it running first. is it fast? yes, using a small model is no problem. Ollama is a lightweight and optimized framework for running large language models (llms) locally on personal devices, including computers and single board computers like the raspberry pi 5. it is designed to make deploying and interacting with ai models seamless without requiring cloud based processing. when was deepseek first released?. This article serves as a comprehensive guide on how to run your own local llm on a raspberry pi, an affordable and adaptable single board computer that is popular among hobbyists and educators.

Build Local Llm Agent On Raspberry Pi 5 Hackaday Io Ollama is a lightweight and optimized framework for running large language models (llms) locally on personal devices, including computers and single board computers like the raspberry pi 5. it is designed to make deploying and interacting with ai models seamless without requiring cloud based processing. when was deepseek first released?. This article serves as a comprehensive guide on how to run your own local llm on a raspberry pi, an affordable and adaptable single board computer that is popular among hobbyists and educators. In this tutorial, you will work with the llamafile of the llava model using the second method. it's a 7 billion parameter model that is quantized to 4 bits that you can interact with via chat, upload images, and ask questions. First, starting early april, raspberry pi os comes with vulkan driver support natively, i use tinyllamafile f16 and q8 0 to run sample q&a. the results coming from the set up of raspberry pi 5 is different from what justine showed. This file, known as a “llamafile,” combines the model weights and a specially compiled version of llama.cpp with cosmopolitan libc, allowing the model to run locally on most computers without the need for additional dependencies or installations. We need to clone the github repository of the llama model, change the directory to the newly downloaded one, and build the project files. in the meantime we need to start downloading the model,.

Build Local Llm Agent On Raspberry Pi 5 Hackaday Io In this tutorial, you will work with the llamafile of the llava model using the second method. it's a 7 billion parameter model that is quantized to 4 bits that you can interact with via chat, upload images, and ask questions. First, starting early april, raspberry pi os comes with vulkan driver support natively, i use tinyllamafile f16 and q8 0 to run sample q&a. the results coming from the set up of raspberry pi 5 is different from what justine showed. This file, known as a “llamafile,” combines the model weights and a specially compiled version of llama.cpp with cosmopolitan libc, allowing the model to run locally on most computers without the need for additional dependencies or installations. We need to clone the github repository of the llama model, change the directory to the newly downloaded one, and build the project files. in the meantime we need to start downloading the model,.

Build Local Llm Agent On Raspberry Pi 5 Hackaday Io This file, known as a “llamafile,” combines the model weights and a specially compiled version of llama.cpp with cosmopolitan libc, allowing the model to run locally on most computers without the need for additional dependencies or installations. We need to clone the github repository of the llama model, change the directory to the newly downloaded one, and build the project files. in the meantime we need to start downloading the model,.

Comments are closed.