Retrieval Augmented Generation Using Your Data With Llms

Retrieval Augmented Generation Using Your Data With Llms In this survey, we propose a rag task categorization method, classifying user queries into four levels based on the type of external data required and primary focus of the task: explicit fact queries, implicit fact queries, interpretable rationale queries, and hidden rationale queries. This compact guide to rag will explain how to build a generative ai application using llms that have been augmented with enterprise data. we’ll dive deep into architecture, implementation best practices and how to evaluate gen ai application performance.

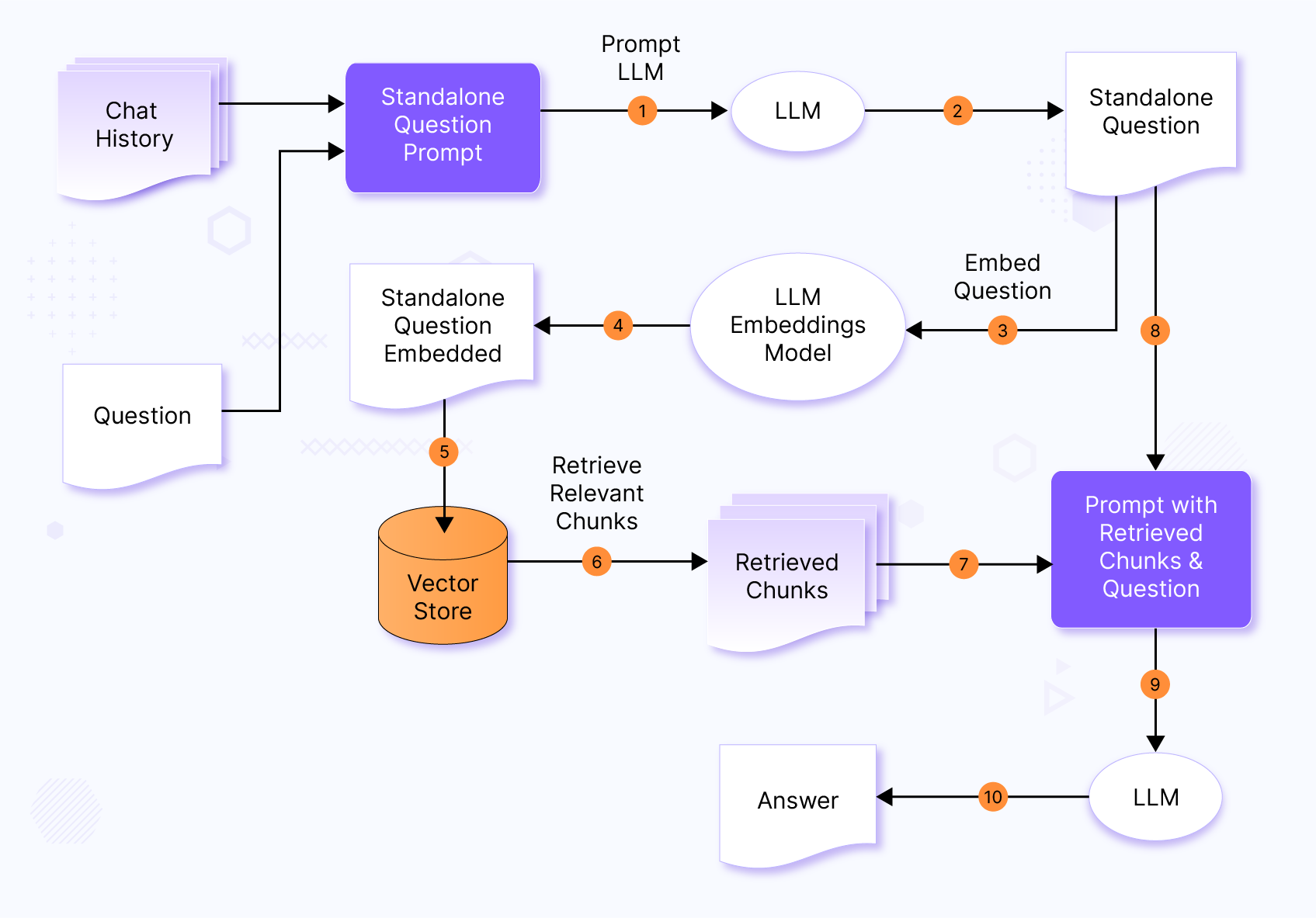

Retrieval Augmented Generation Using Your Data With Llms This time we focus on a popular technique called retrieval augmented generation which augments the prompt with documents retrieved from a vector database. an off the shelf large language model (llm) formulates a syntactically correct and often a convincing sounding answer to any prompt. Methods like llm finetuning, rag, and fitting data into the prompt context are different ways to achieve this. this article explains the basics of retrieval augmented generation (rag) and demonstrates how your data can be used with llm backed applications. In this post, we’ll cover an alternative technique called "retrieval augmented generation" (rag). this approach is based on prompting and it was introduced by facebook ai research (fair) and collaborators in 2021. Retrieval augmented generation (rag) represents a powerful technique that combines the capabilities of large language models (llms) with external data sources, enabling more accurate and.

Retrieval Augmented Generation Using Your Data With Llms In this post, we’ll cover an alternative technique called "retrieval augmented generation" (rag). this approach is based on prompting and it was introduced by facebook ai research (fair) and collaborators in 2021. Retrieval augmented generation (rag) represents a powerful technique that combines the capabilities of large language models (llms) with external data sources, enabling more accurate and. To address these issues, this tutorial explores how integrating retrieval mechanisms and structured knowledge can enhance llm performance for web use. Retrieval augmented generation (rag) is a hybrid approach that enhances the performance of traditional llms. while classic llms generate text based on their training data alone, rag systems can pull in external information from knowledge bases or the web before generating a response. Retrieval augmented generation is a framework that improves how llms generate responses. it does this by adding a retrieval step before generation. Retrieval augmented generation (rag) is changing how ai systems understand and generate accurate, timely, and context rich responses. by combining large language models (llms) with real time document retrieval, rag connects static training data with changing, evolving knowledge. whether you are building a chatbot, search assistant, or enterprise knowledge tool, this complete guide will explain.

Big Data In Llms With Retrieval Augmented Generation Rag To address these issues, this tutorial explores how integrating retrieval mechanisms and structured knowledge can enhance llm performance for web use. Retrieval augmented generation (rag) is a hybrid approach that enhances the performance of traditional llms. while classic llms generate text based on their training data alone, rag systems can pull in external information from knowledge bases or the web before generating a response. Retrieval augmented generation is a framework that improves how llms generate responses. it does this by adding a retrieval step before generation. Retrieval augmented generation (rag) is changing how ai systems understand and generate accurate, timely, and context rich responses. by combining large language models (llms) with real time document retrieval, rag connects static training data with changing, evolving knowledge. whether you are building a chatbot, search assistant, or enterprise knowledge tool, this complete guide will explain.

Big Data In Llms With Retrieval Augmented Generation Rag Retrieval augmented generation is a framework that improves how llms generate responses. it does this by adding a retrieval step before generation. Retrieval augmented generation (rag) is changing how ai systems understand and generate accurate, timely, and context rich responses. by combining large language models (llms) with real time document retrieval, rag connects static training data with changing, evolving knowledge. whether you are building a chatbot, search assistant, or enterprise knowledge tool, this complete guide will explain.

Big Data In Llms With Retrieval Augmented Generation Rag

Comments are closed.