Regression Analysis Spss Step By Step Multiple Linear Regression Spss Lesson 7

Multiple Regression Analysis Using Spss Statistics Pdf Regression I was just wondering why regression problems are called "regression" problems. what is the story behind the name? one definition for regression: "relapse to a less perfect or developed state.". Also, for ols regression, r^2 is the squared correlation between the predicted and the observed values. hence, it must be non negative. for simple ols regression with one predictor, this is equivalent to the squared correlation between the predictor and the dependent variable again, this must be non negative.

Multiple Linear Regression In Spss Beginners Tutorial A good residual vs fitted plot has three characteristics: the residuals "bounce randomly" around the 0 line. this suggests that the assumption that the relationship is linear is reasonable. the res. Consider the following figure from faraway's linear models with r (2005, p. 59). the first plot seems to indicate that the residuals and the fitted values are uncorrelated, as they should be in a. Is it possible to have a (multiple) regression equation with two or more dependent variables? sure, you could run two separate regression equations, one for each dv, but that doesn't seem like it. There’s not really a sharp line between the two basis expansion allows linear regression to estimate rich relationships. moreover, random fourier features are an example of a linear in parameters model that also has connections to popular kernel methods, such as rbf kernel regression.

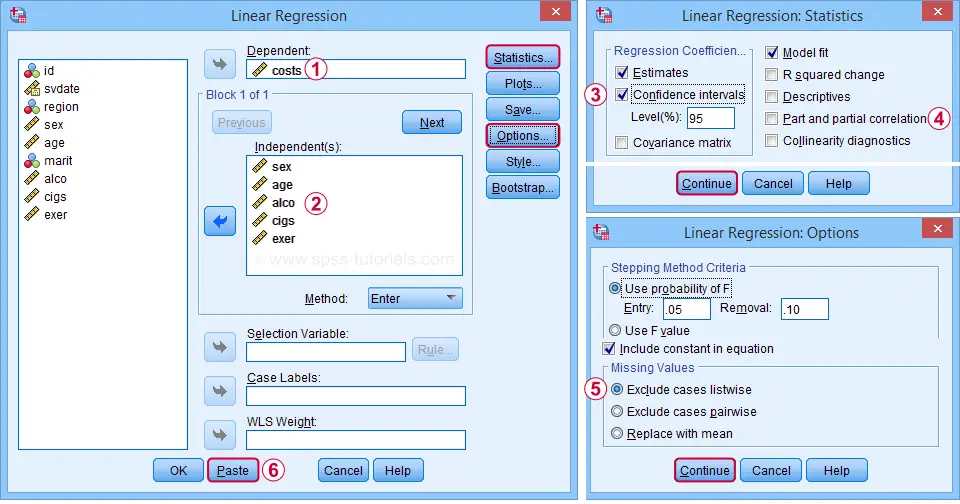

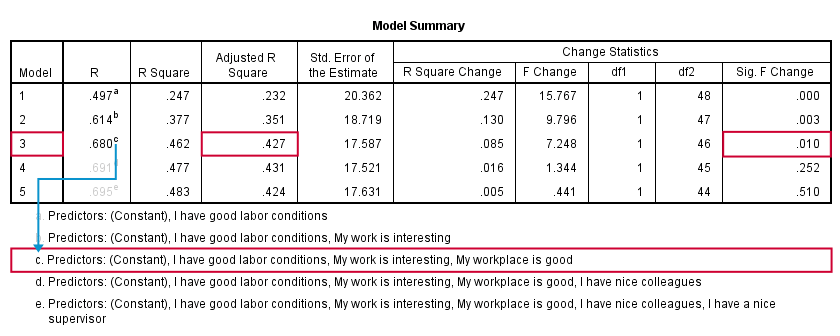

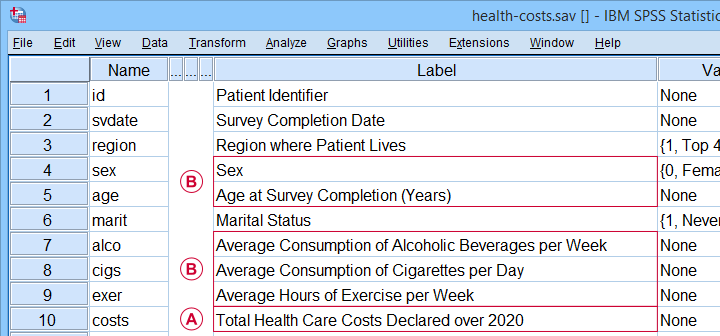

Spss Multiple Regression Analysis In 6 Simple Steps Is it possible to have a (multiple) regression equation with two or more dependent variables? sure, you could run two separate regression equations, one for each dv, but that doesn't seem like it. There’s not really a sharp line between the two basis expansion allows linear regression to estimate rich relationships. moreover, random fourier features are an example of a linear in parameters model that also has connections to popular kernel methods, such as rbf kernel regression. For the top set of points, the red ones, the regression line is the best possible regression line that also passes through the origin. it just happens that that regression line is worse than using a horizontal line, and hence gives a negative r squared. undefined r squared. I have fish density data that i am trying to compare between several different collection techniques, the data has lots of zeros, and the histogram looks vaugley appropriate for a poisson distribut. Performing regression adjustment, relates closely to double robustness (see kang & schafer's (2007) demystifying double robustness: a comparison of alternative strategies for estimating a population mean from incomplete data for a detailed exposition) and an underfitted model invalidates its utility in getting more precise estimates. This multiple regression technique is based on previous time series values, especially those within the latest periods, and allows us to extract a very interesting "inter relationship" between multiple past values that work to explain a future value.

Multiple Linear Regression In Spss Beginners Tutorial For the top set of points, the red ones, the regression line is the best possible regression line that also passes through the origin. it just happens that that regression line is worse than using a horizontal line, and hence gives a negative r squared. undefined r squared. I have fish density data that i am trying to compare between several different collection techniques, the data has lots of zeros, and the histogram looks vaugley appropriate for a poisson distribut. Performing regression adjustment, relates closely to double robustness (see kang & schafer's (2007) demystifying double robustness: a comparison of alternative strategies for estimating a population mean from incomplete data for a detailed exposition) and an underfitted model invalidates its utility in getting more precise estimates. This multiple regression technique is based on previous time series values, especially those within the latest periods, and allows us to extract a very interesting "inter relationship" between multiple past values that work to explain a future value.

Comments are closed.