Redefining Transformers How Simple Feed Forward Neural Networks Can

Redefining Transformers How Simple Feed Forward Neural Networks Can Researchers from eth zurich analyze the efficacy of utilizing standard shallow feed forward networks to emulate the attention mechanism in the transformer model, a leading architecture for sequence to sequence tasks. Feedforward is a concept that originates from the field of neural networks. in essence, it refers to the process of passing information forward through the network, from the input layer,.

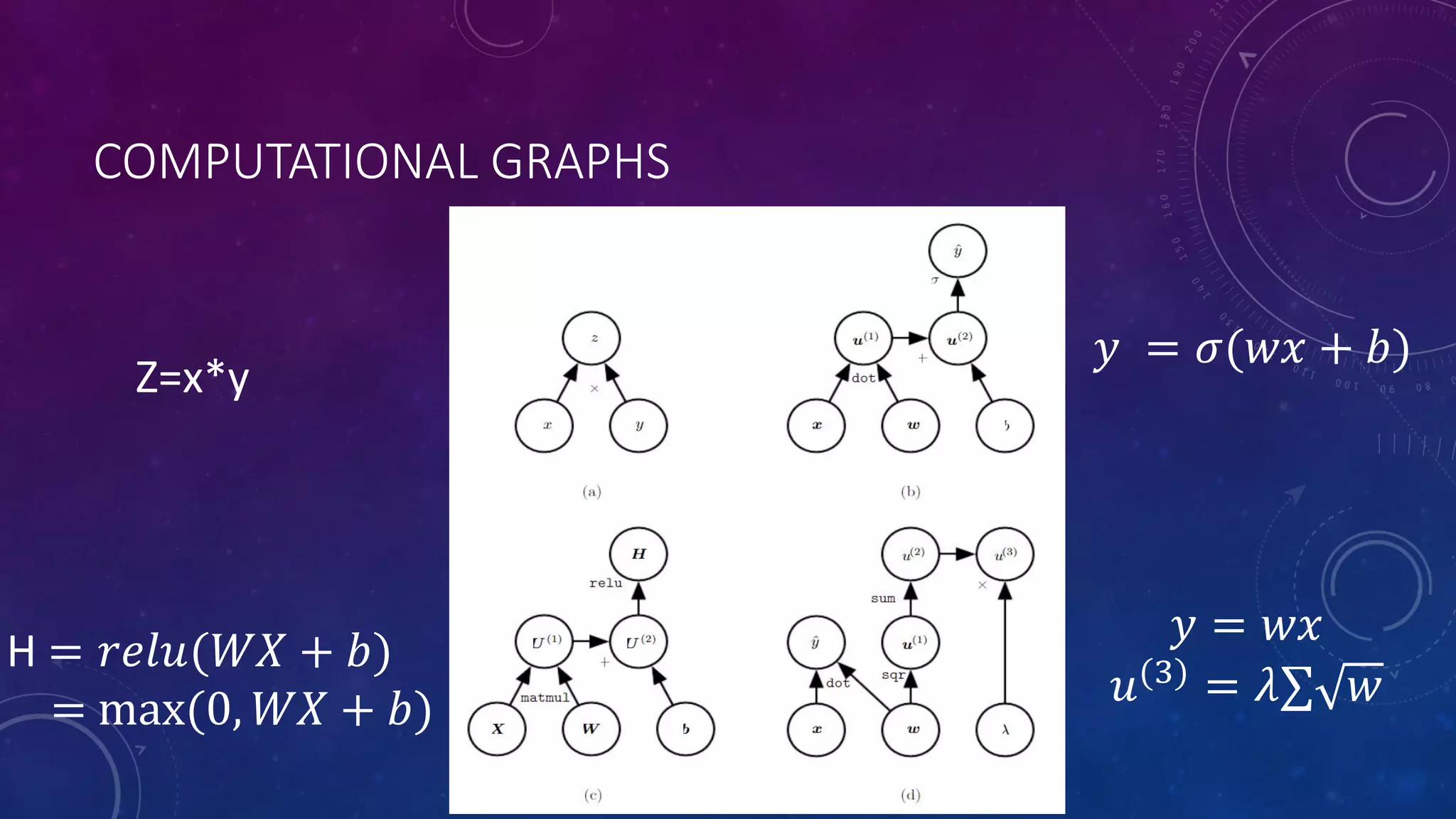

Deep Learning Advantages Of Convolutional Neural Networks Over In our work, we show that simple feedforward neural networks (sfnns) can achieve performance on par with, or even exceeding, these state of the art models, while being simpler, smaller, faster, and more robust. This fourth section will cover the essential feed forward layer, a fundamental element found in most deep learning architectures. while discussing vital topics common to deep learning, we’ll emphasize their significant role in shaping the transformers architecture. The study shows that shallow feed forward networks can effectively mimic attention mechanisms, simplifying complex sequence to sequence architectures. We substitute key elements of the attention mechanism in the transformer with simple feed forward networks, trained using the original components via knowledge distillation. our.

A Feed Forward Neural Networks B Recurrent Neural Networks C Unfold The study shows that shallow feed forward networks can effectively mimic attention mechanisms, simplifying complex sequence to sequence architectures. We substitute key elements of the attention mechanism in the transformer with simple feed forward networks, trained using the original components via knowledge distillation. our. Read this chapter to understand the ffnn sublayer, its role in transformer, and how to implement ffnn in transformer architecture using python programming language. in the transformer architecture, the ffnn sublayer is just above the multi head attention sublayer. Our experiments, conducted on the iwslt2017 dataset, reveal the capacity of these ”attentionless transform ers” to rival the performance of the original architecture. through rigorous ablation studies, and experimenting with various replacement network types and sizes, we offer in sights that support the viability of our approach. To understand the transformer neural net architecture, you must know vector transformations or feature mapping first. then the concept of vectorization (parallelization of computation using matrices and gpus). Recap: what did we learn in the transformer block? in day 4, we saw how multi head attention allows each word in a sentence to look at others and decide what matters.

7 A Simple Feed Forward Neural Network Download Scientific Diagram Read this chapter to understand the ffnn sublayer, its role in transformer, and how to implement ffnn in transformer architecture using python programming language. in the transformer architecture, the ffnn sublayer is just above the multi head attention sublayer. Our experiments, conducted on the iwslt2017 dataset, reveal the capacity of these ”attentionless transform ers” to rival the performance of the original architecture. through rigorous ablation studies, and experimenting with various replacement network types and sizes, we offer in sights that support the viability of our approach. To understand the transformer neural net architecture, you must know vector transformations or feature mapping first. then the concept of vectorization (parallelization of computation using matrices and gpus). Recap: what did we learn in the transformer block? in day 4, we saw how multi head attention allows each word in a sentence to look at others and decide what matters.

An Example Of A Simple Feed Forward Neural Network Adapted From Single To understand the transformer neural net architecture, you must know vector transformations or feature mapping first. then the concept of vectorization (parallelization of computation using matrices and gpus). Recap: what did we learn in the transformer block? in day 4, we saw how multi head attention allows each word in a sentence to look at others and decide what matters.

Deep Feed Forward Neural Networks And Regularization Pdf Artificial

Comments are closed.