Quantization Of Convolutional Neural Networks Model Quantization

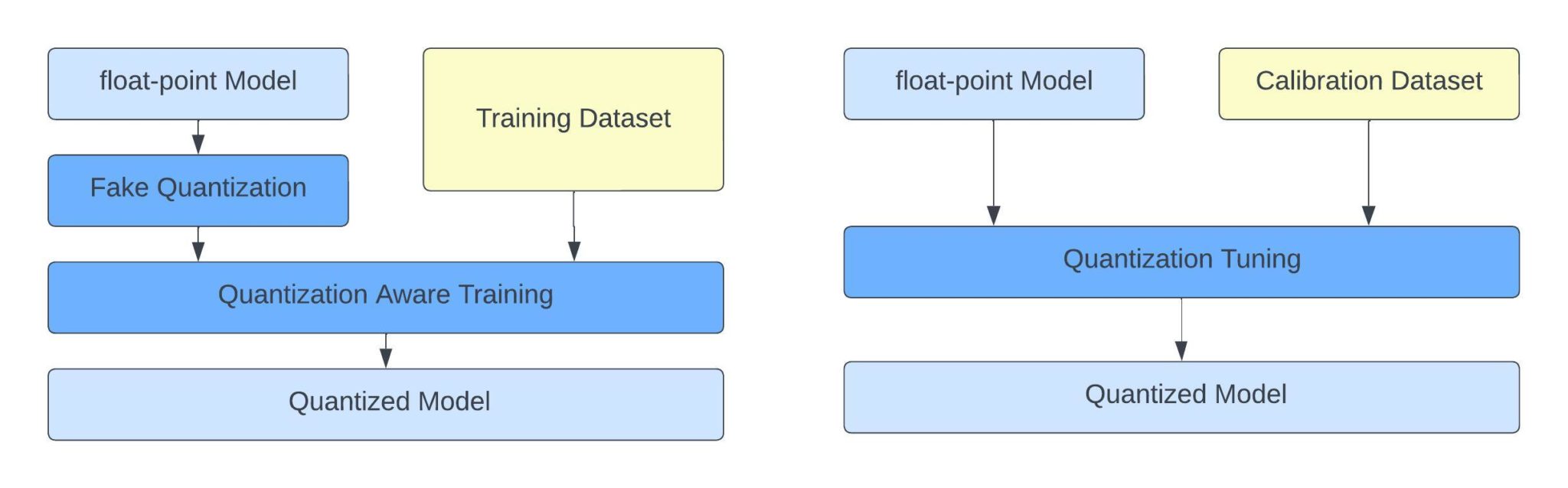

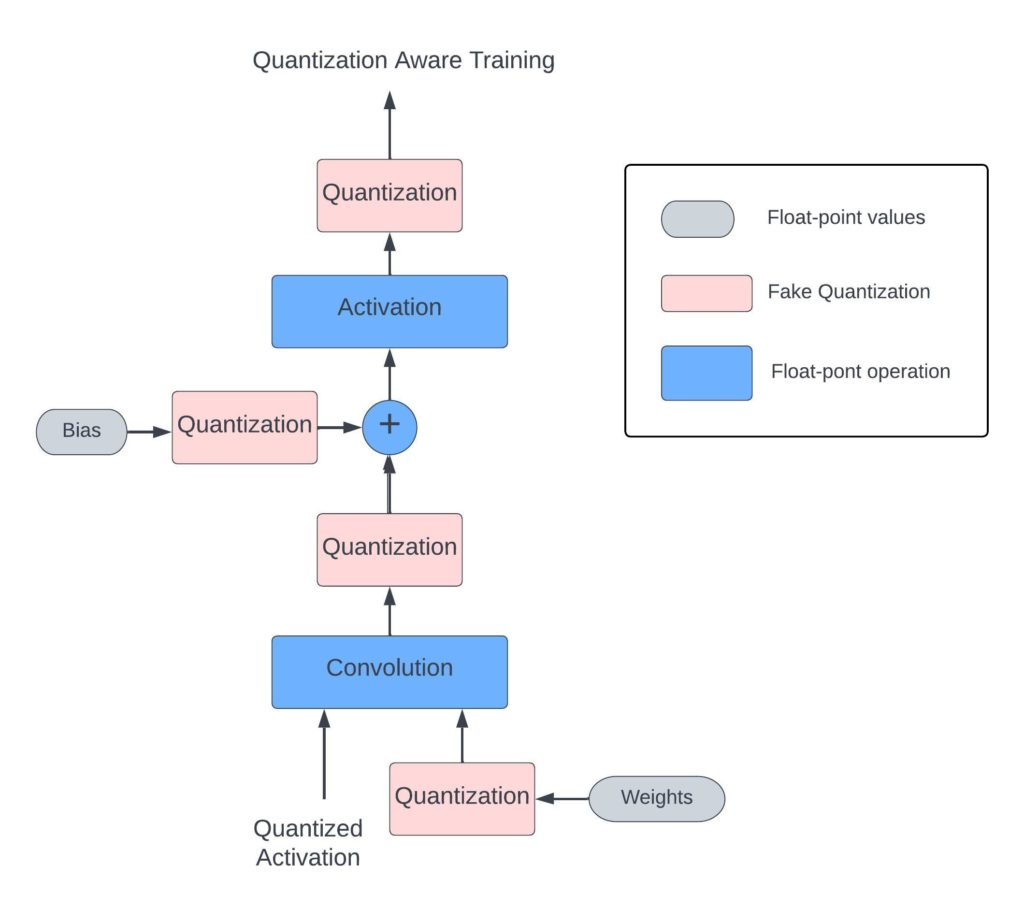

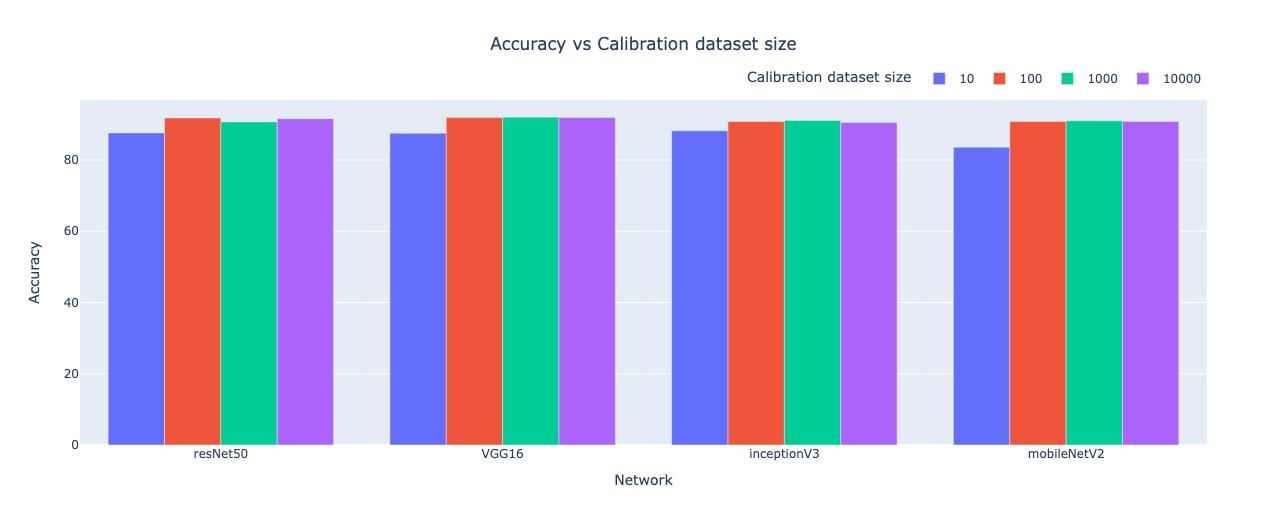

Quantization Of Convolutional Neural Networks Model Quantization The general definition of quantization states that it is the process of mapping continuous infinite values to a smaller set of discrete finite values In this blog, we will talk about quantization in Reducing the precision of model weights can make deep neural networks run faster in less GPU memory, while preserving model accuracy If ever there were a salient example of a counter-intuitive

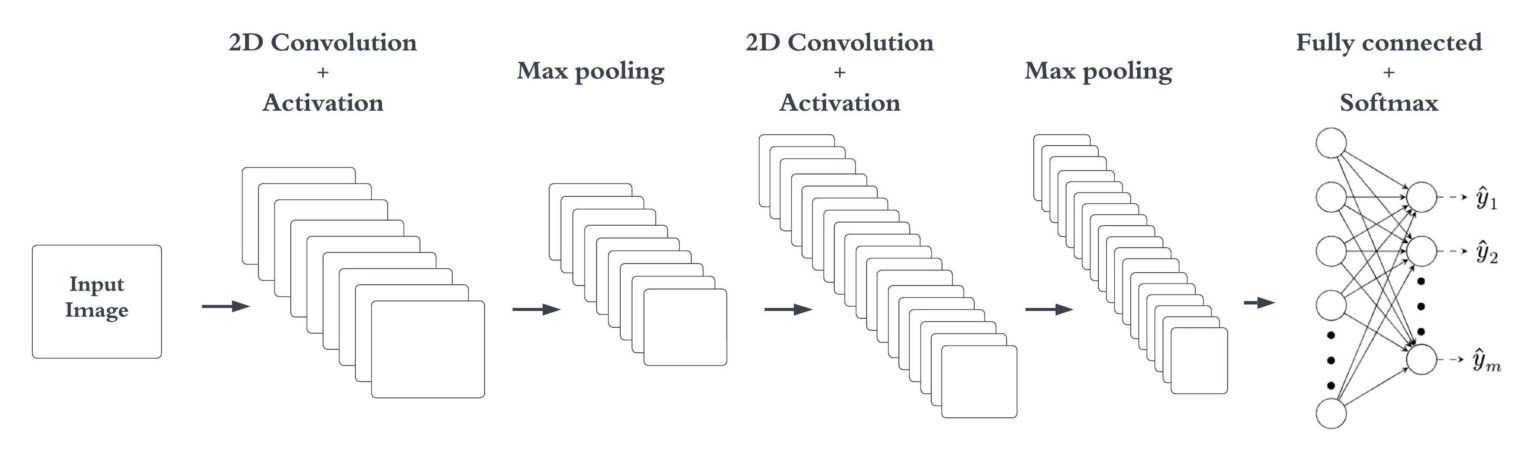

Quantization Of Convolutional Neural Networks Model Quantization Three techniques have emerged as potential game changers in the domain of model quantization, namely GPTQ, LoRA, and QLoRA: GPTQ involves compressing models after they’ve been trained The learning capability of convolutional neural networks (CNNs) originates from a combination of various feature extraction layers that fully utilize a large amount of data However, they often

Quantization Of Convolutional Neural Networks Model Quantization

Quantization Of Convolutional Neural Networks Model Quantization

Comments are closed.