Prompt Tuning Based Adapter For Vision Language Model Adaption Deepai

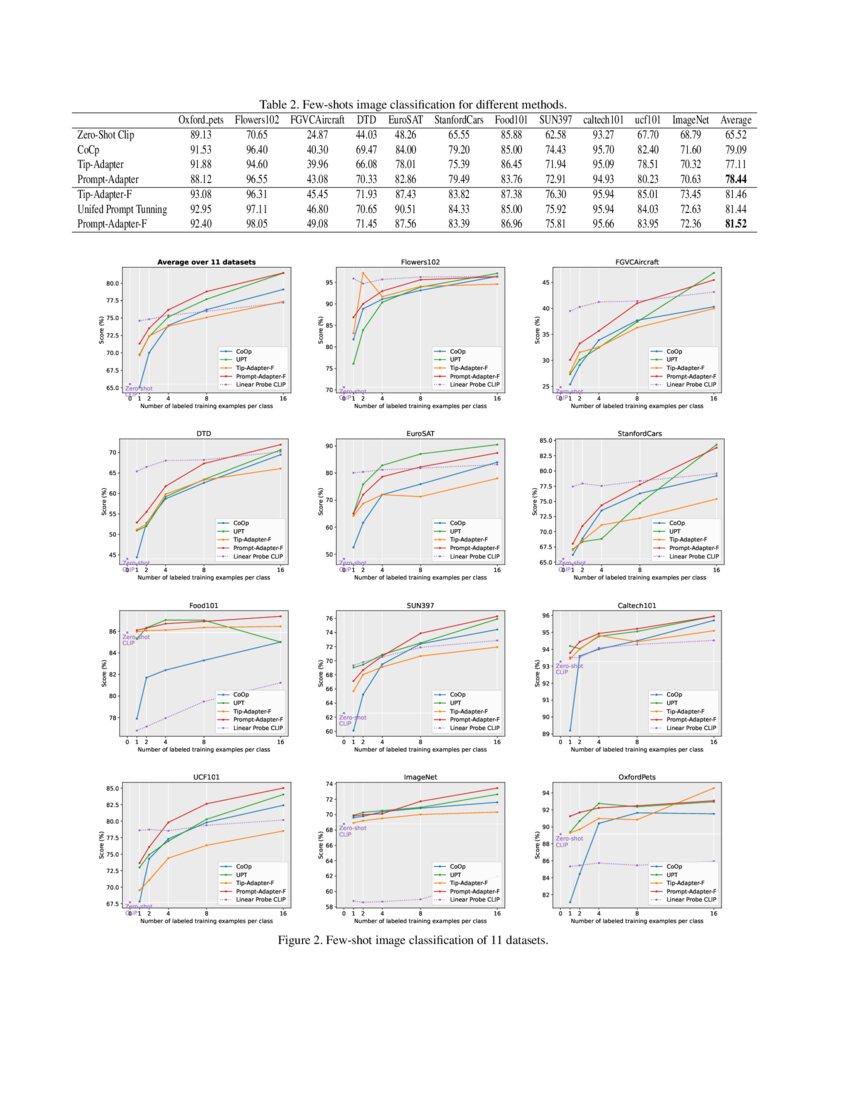

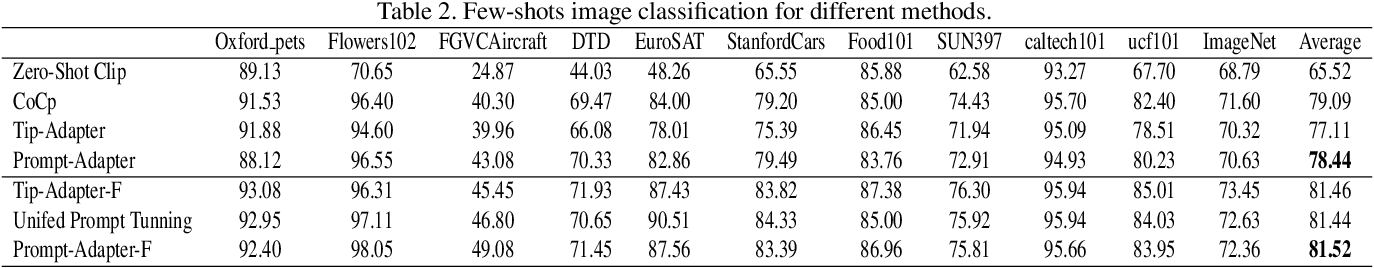

Prompt Tuning Based Adapter For Vision Language Model Adaption Deepai We explore the idea of prompt tuning with multi task pre trained initialization and find it can significantly improve model performance. based on our findings, we introduce a new model, termed prompt adapter, that combines pre trained prompt tunning with an efficient adaptation network. To address this issue, we propose a deep continuous prompting method dubbed adapt that encourages heterogeneous context lengths. context lengths are automatically determined by iteratively pruning context tokens.

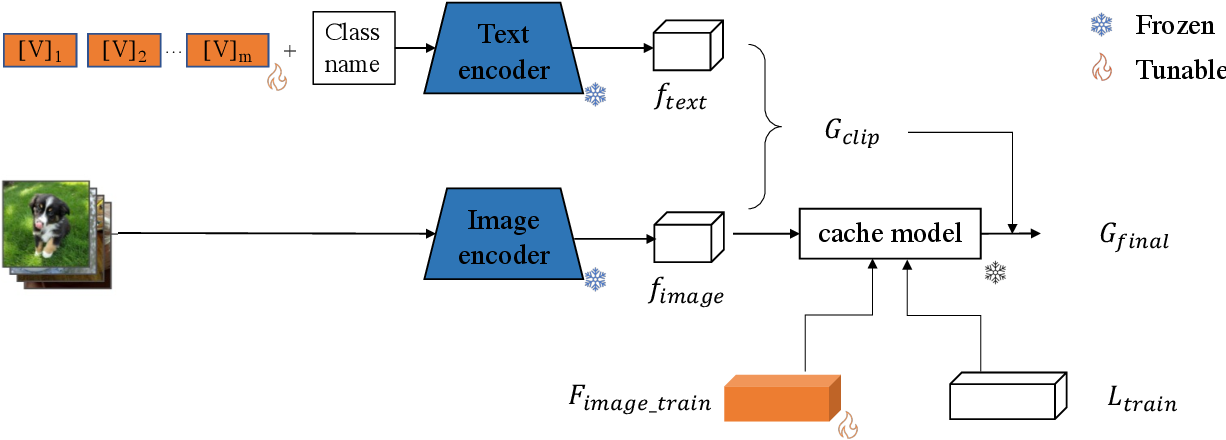

Prompt Tuning Based Adapter For Vision Language Model Adaption My research primarily focuses on multi modal large language lodels (llms), including but not limited to vision language and audio language models. Based on the results of our study, we conclude that the proposed prompt based adaptation method is an effective approach for efficiently adapting large vision and language models to downstream tasks. In this paper, we propose unified multi modal prompt with adapter for vision language models (umpa) based on clip, for parameter efficient fine tuning (peft). learnable prompt design can improve the adaption ability of model. adapter can realize lightweight model fine tuning. We propose a unified framework for adapting clip to multi modal and multi task settings, supporting seamless task integration with minimal overhead. our approach achieves superior performance, surpassing conventional fine tuning and prompt based methods in accuracy and training efficiency.

Prompt Tuning Based Adapter For Vision Language Model Adaption In this paper, we propose unified multi modal prompt with adapter for vision language models (umpa) based on clip, for parameter efficient fine tuning (peft). learnable prompt design can improve the adaption ability of model. adapter can realize lightweight model fine tuning. We propose a unified framework for adapting clip to multi modal and multi task settings, supporting seamless task integration with minimal overhead. our approach achieves superior performance, surpassing conventional fine tuning and prompt based methods in accuracy and training efficiency. Prompt tuning provides an efficient mechanism to adapt large vision language models to downstream tasks by treating part of the input language prompts as learnable parameters while freezing the rest of the model.

Debiased Fine Tuning For Vision Language Models By Prompt Prompt tuning provides an efficient mechanism to adapt large vision language models to downstream tasks by treating part of the input language prompts as learnable parameters while freezing the rest of the model.

Comments are closed.