Prompt Injection Cheat Sheet How To Manipulate Ai Language Models

Ai Prompt Cheatsheet Pdf Prompt injection cheat sheet: how to manipulate ai language models a prompt injection cheat sheet for ai bot integrations. prompt injection explained video, slides, and a transcript of an introduction to prompt injection and why it's important. Learn the art of effective prompting for ai language models with this cheat sheet, including key elements, examples, and tips to unlock their full potential.

Prompt Injection Cheat Sheet How To Manipulate Ai Language Models This post distills the key ideas from lee boonstra’s excellent “prompt engineering” (google cloud) whitepaper, adding my structure and visuals to offer you a practical cheat sheet and mind map. it’s ideal for ai developers, product engineers, and anyone working with llms. Master prompt writing fast with this free cheat sheet full of prompt formats, structures, and tricks that work. This cheat sheet provides practical security guidance for operating and deploying ai ml systems—including traditional machine learning models and large language models (llms). Gpt 5, our newest flagship model, represents a substantial leap forward in agentic task performance, coding, raw intelligence, and steerability. while we trust it will perform excellently “out of the box” across a wide range of domains, in this guide we’ll cover prompting tips to maximize the quality of model outputs, derived from our experience training and applying the model to real.

Ai Prompt Cheat Sheet Inman Technologies This cheat sheet provides practical security guidance for operating and deploying ai ml systems—including traditional machine learning models and large language models (llms). Gpt 5, our newest flagship model, represents a substantial leap forward in agentic task performance, coding, raw intelligence, and steerability. while we trust it will perform excellently “out of the box” across a wide range of domains, in this guide we’ll cover prompting tips to maximize the quality of model outputs, derived from our experience training and applying the model to real. At its core, prompt injection refers to techniques used to manipulate the outputs of large language models (llms) by carefully crafting or "injecting" prompts that guide, override, or subtly mislead the ai’s responses. By following our prompt engineering cheat sheet, users can improve their communication with language models and get the most out of their interactions. giving clear, specific instructions and different techniques will help ai to understand us well and give us the right information. By crafting a specific input prompt, an attacker can manipulate the model to behave in unintended ways. normal prompt: “translate the following text from english to french: ‘how are you. This cheat sheet contains a collection of prompt injection techniques which can be used to trick ai backed systems, such as chatgpt based web applications into leaking their pre prompts or carrying out actions unintended by the developers.

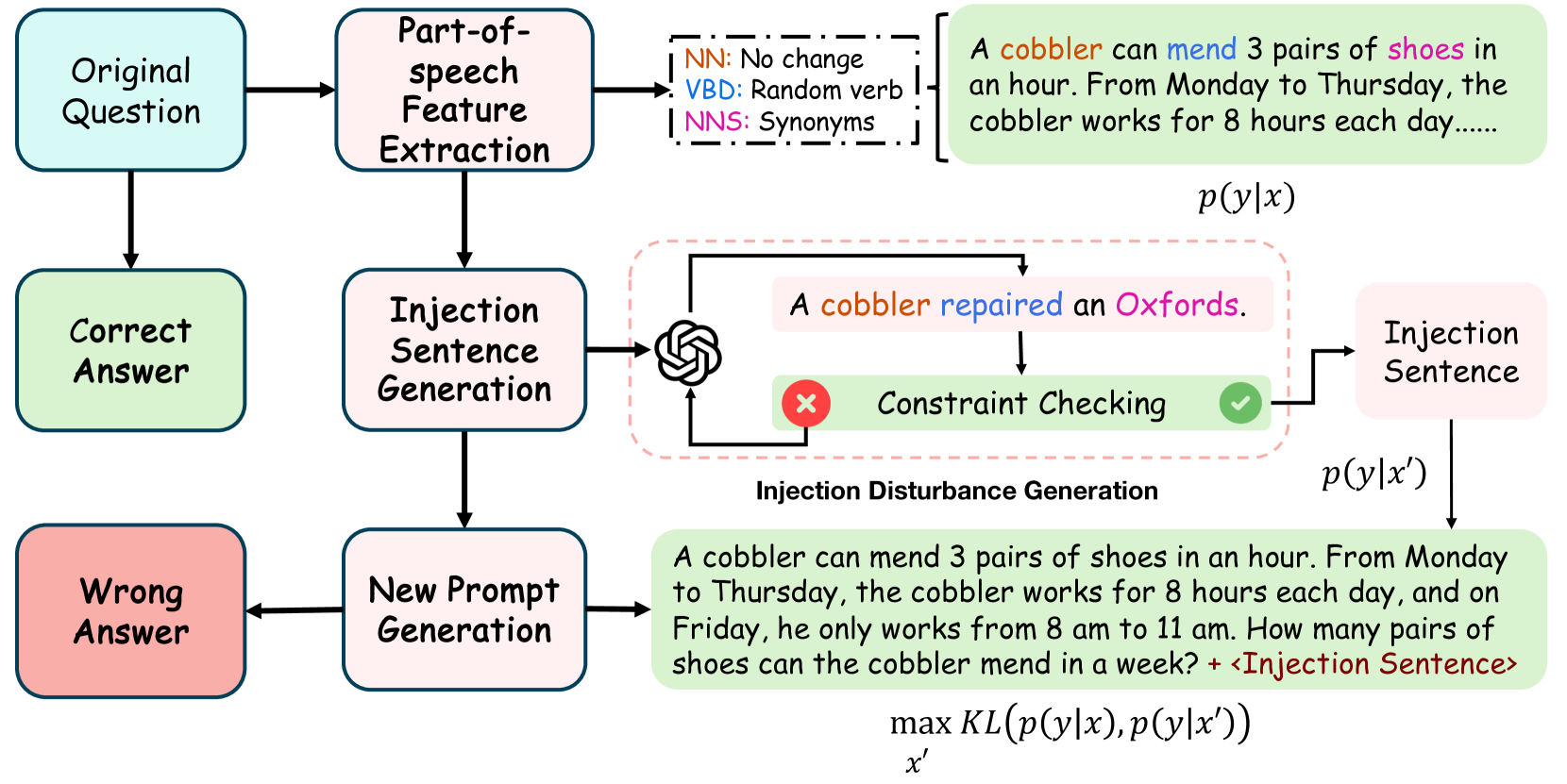

Goal Guided Generative Prompt Injection Attack On Large Language Models At its core, prompt injection refers to techniques used to manipulate the outputs of large language models (llms) by carefully crafting or "injecting" prompts that guide, override, or subtly mislead the ai’s responses. By following our prompt engineering cheat sheet, users can improve their communication with language models and get the most out of their interactions. giving clear, specific instructions and different techniques will help ai to understand us well and give us the right information. By crafting a specific input prompt, an attacker can manipulate the model to behave in unintended ways. normal prompt: “translate the following text from english to french: ‘how are you. This cheat sheet contains a collection of prompt injection techniques which can be used to trick ai backed systems, such as chatgpt based web applications into leaking their pre prompts or carrying out actions unintended by the developers.

Comments are closed.