Problem 1 Linear Regression With Regularization Chegg

Problem 1 Linear Regression With Regularization Chegg Your solution’s ready to go! our expert help has broken down your problem into an easy to learn solution you can count on. see answer question: a) assume we are doing linear regression with squared loss and ℓ2 regularization on four onedimensional data points. Unlike polynomial fitting, it’s hard to imagine how linear regression can overfit the data, since it’s just a single line (or a hyperplane). one situation is that features are correlated or redundant.

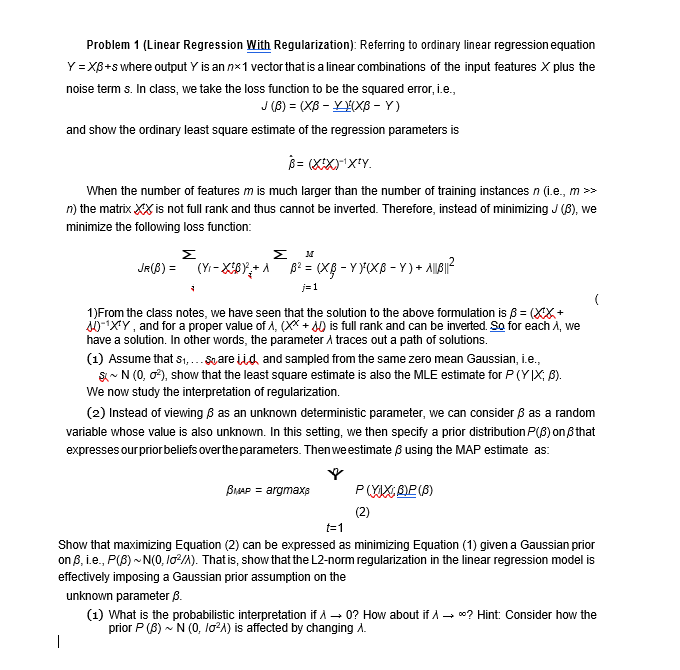

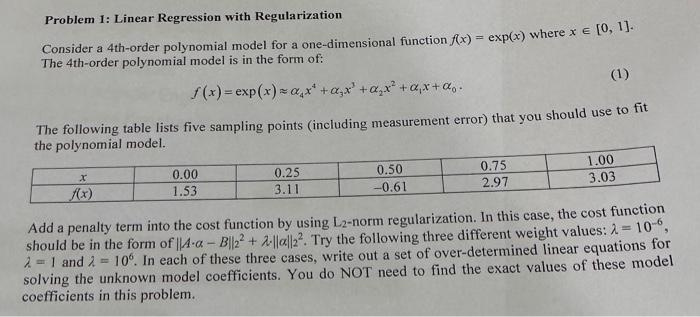

Problem 1 Linear Regression With Regularization Chegg The goal of this problem is just so that you can get a sense of the general principles at play here. in practice, designing a good step size schedule for your specific task is often both very important and also very much black magic. In this new innovative method, we have derived an iterative approach to solving the general tikhonov regularization problem, which converges to the noiseless solution, does not depend strongly on the choice of lambda, and yet still avoids the inversion problem. For most of linear regression problem, m is much larger than n, i.e. number of samples is much larger than number of features. solving n by n linear equation will be faster than solving m by n linear equation. Problem 1 (linear regression with regularization): referring to ordinary linear regression equation y = xb s where output y is an nx 1 vector that is a linear combinations of the input features x plus the noise terms.

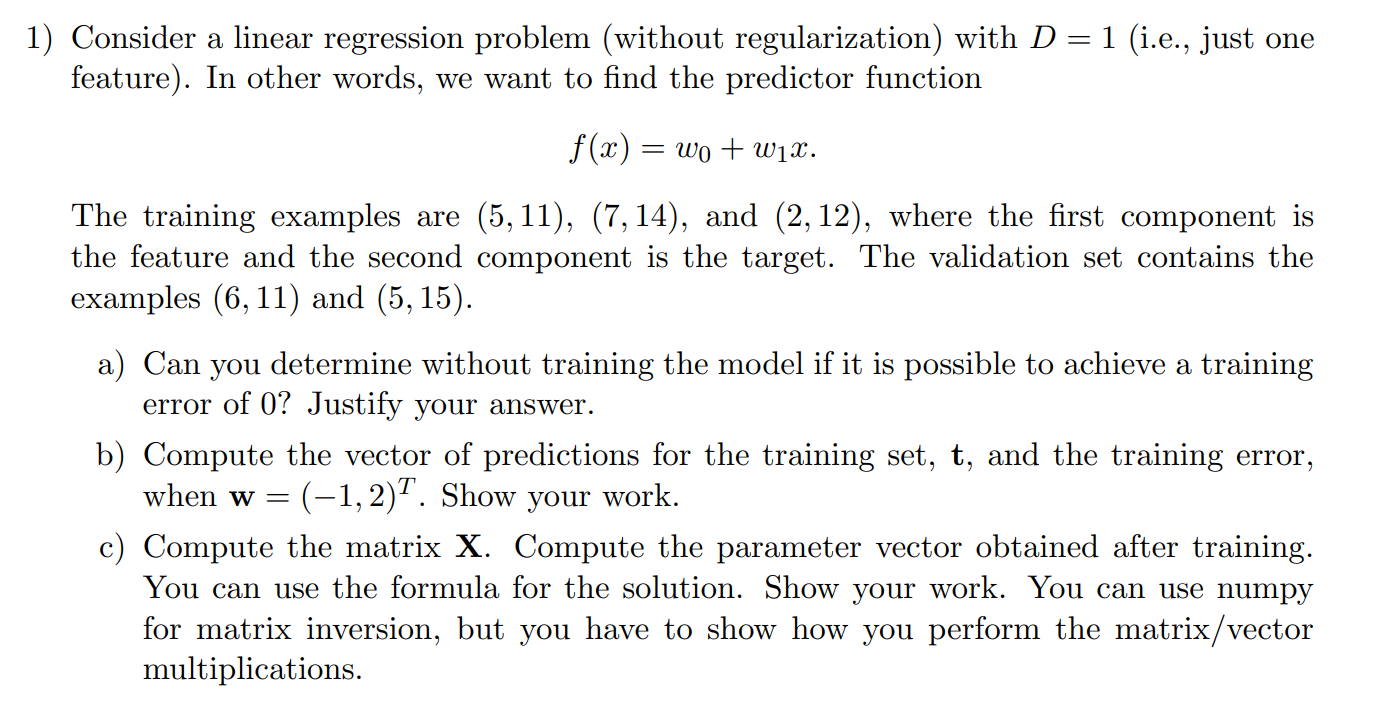

1 Consider A Linear Regression Problem Without Chegg For most of linear regression problem, m is much larger than n, i.e. number of samples is much larger than number of features. solving n by n linear equation will be faster than solving m by n linear equation. Problem 1 (linear regression with regularization): referring to ordinary linear regression equation y = xb s where output y is an nx 1 vector that is a linear combinations of the input features x plus the noise terms. Your goal is to find a linear combination of the features (feature vectors elements coordinates) that is best able to predict each label. in other words, you want to discover the parameter vector that allows you to make the most accurate predictions:.

Problem 1 Linear Regression With Regularization Chegg Your goal is to find a linear combination of the features (feature vectors elements coordinates) that is best able to predict each label. in other words, you want to discover the parameter vector that allows you to make the most accurate predictions:.

Comments are closed.