Problem 1 Forward Backward Propagation Consider The Chegg

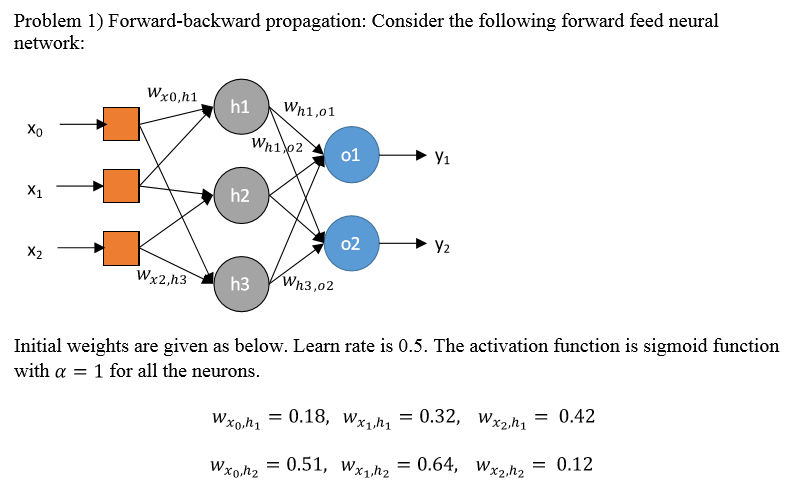

Solved Problem 1 Forward Backward Propagation Consider The Chegg Problem 1) forward backward propagation: consider the following forward feed neural network: wx0,h1 h1 wh1,01 wh12 01 h2 kv y2 02 wh3,02 wx2,h3 h3 initial weights are given as below. learn rate is 0.5. the activation function is sigmoid function with a = 1 for all the neurons. We will talk about a few simple tweaks that made it easy!.

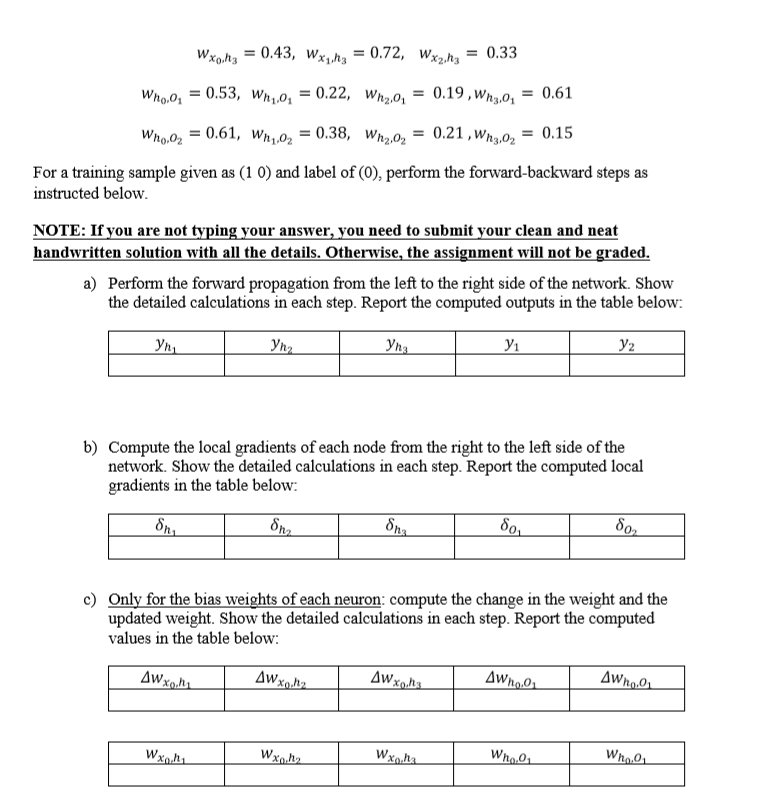

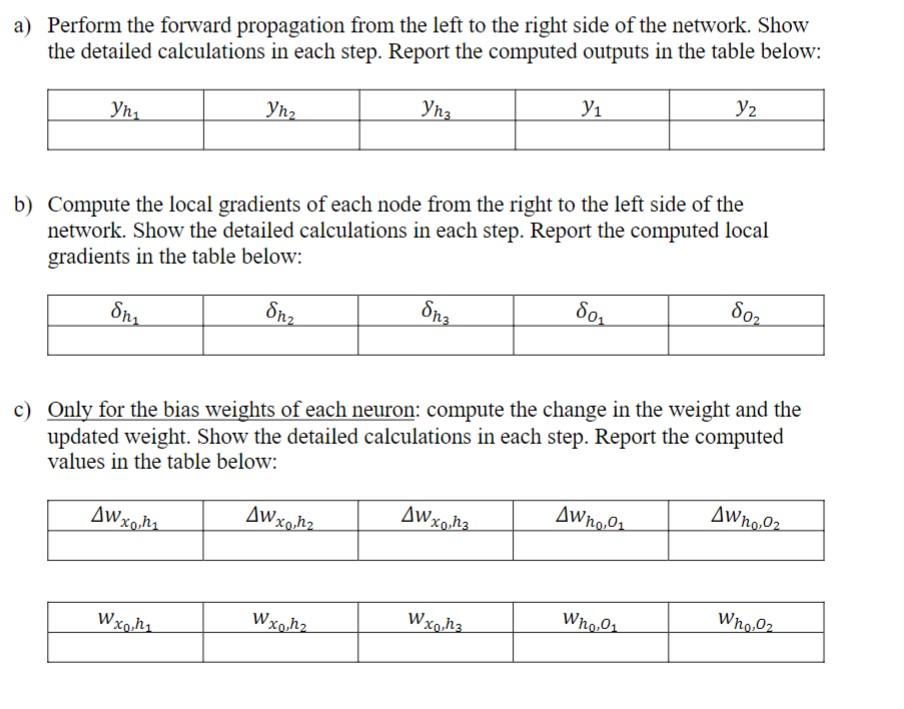

Solved Problem 1 Forward Backward Propagation Consider The Chegg Forward and backward propagation numerical solved (machine learning). chegg question solved #part1. no description has been added to this video. Apply input vector xn to network and forward propagate through network using. 2. evaluate for all output units using δk δk=yk tk. 3. backpropagate the δ. 4. use ∂e. % this pseudo code illustrates implementing a several layer neural %network. you need to fill in the missing part to adapt the program to %your own use. To solve the problem of forward backward propagation in a neural network, we will break down the solution into three main parts as requested: forward propagation, computation of local gradients, and updating the bias weights. Q. consider a multilayer feed forward neural network given below. let the learning rate be 0.5. assume initial values of weights and biases as given in the table below.

Solved Problem 1 Paper Based Forward Backward Chegg To solve the problem of forward backward propagation in a neural network, we will break down the solution into three main parts as requested: forward propagation, computation of local gradients, and updating the bias weights. Q. consider a multilayer feed forward neural network given below. let the learning rate be 0.5. assume initial values of weights and biases as given in the table below. Report the calculated values in the table below: problem forward backward propagation: consider the following forward feed neural network: wx0,h1 wh1,01 wh1o2 xo 4 x1 x2 4 wx2,h3 15 wh3,o2 initial weights are given below. In backward pass or back propagation the errors between the predicted and actual outputs are computed. the gradients are calculated using the derivative of the sigmoid function and weights and biases are updated accordingly. Problem 1) forward backward propagation: consider the following forward feed neural network: wx0,h1 h1 wh1,01 Хо wn1.02 01 y1 x1 h2 02 x2 y2 wx2.h3 h3 wn3,02 initial weights are given as below. learn rate is 0.5. the activation function is sigmoid function with a = 1 for all the neurons. [5p] show that any classi cation problem where each class occupies a distinct region in space, given by a single convex polytope (cf. figure 2a) can be solved exactly by a neural network with 2 hidden layers.

Comments are closed.