Performance Issue 16817 Microsoft Onnxruntime Github

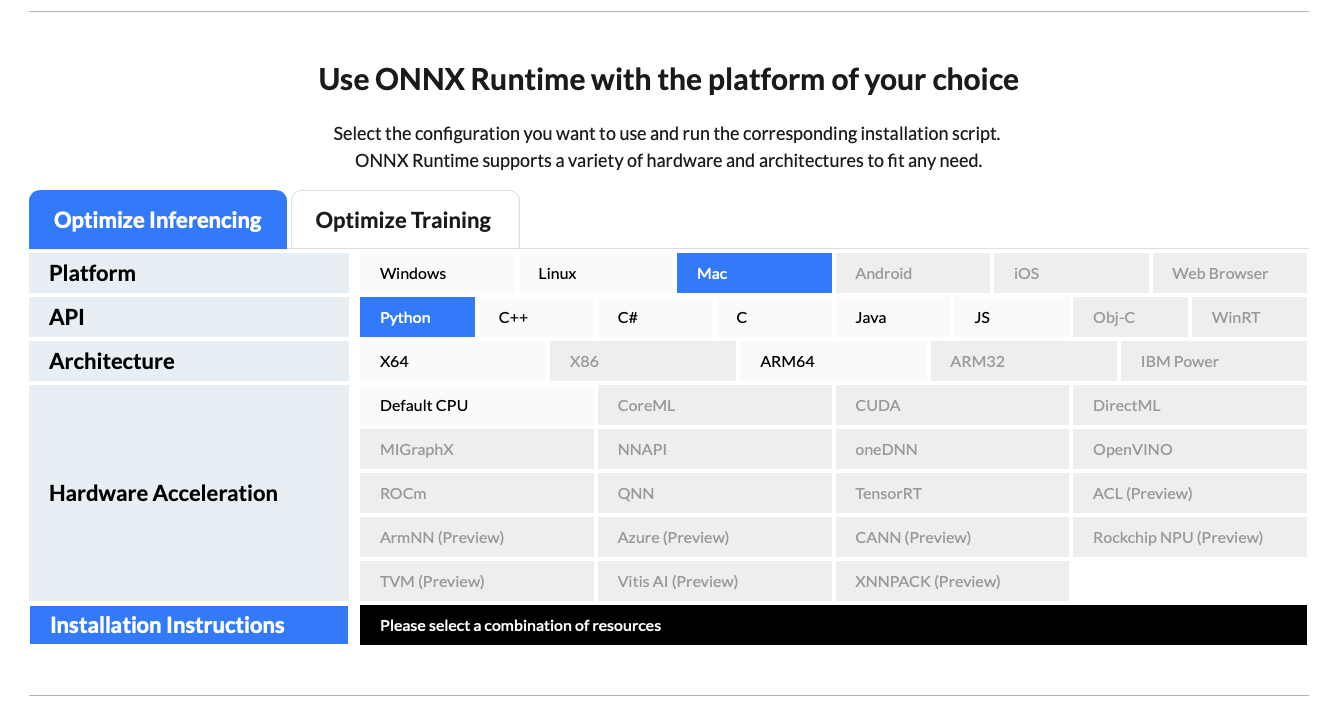

Performance Issue 16817 Microsoft Onnxruntime Github Describe the issue onnxruntime gpu only in the case of window cuda, why not mac m1, not support, only install onnxruntime, onnx model rendering performance is very slow, very slow; can't understand, is mac m1 currently not supported? to. This issue has been automatically marked as stale due to inactivity and will be closed in 30 days if no further activity occurs. if further support is needed, please provide an update and or more details.

Performance Issue 16817 Microsoft Onnxruntime Github Describe the issue onnx runtime it has very low performance in coreml for models like inswapper among others and does not efficiently use the gpu and ane to reproduce inswapper urgency no response platform mac os version 15 onnx runtime. Describe the issue hi, i have a pytorch model which i exported to onnx (by creating a torch model object with all parameters in half dtype) using torch.onnx.export, to generate a fp16 model. when i compare the performance of onnx fp16 mo. Describe the issue hi all, i'm exporting a pytorch model to onnx (opset 14) using only float32, static input sizes, and no dynamic reshaping. despite optimizing the model for gpu, i still see certain ops (concat, slice) falling back to cpu during inference in onnx runtime with cudaexecutionprovider. here’s what i’ve already tried:. We have implemented a performance optimization in the mlas backend of onnx runtime that improves cpu utilization in convolution workloads with multiple groups or large batch sizes.

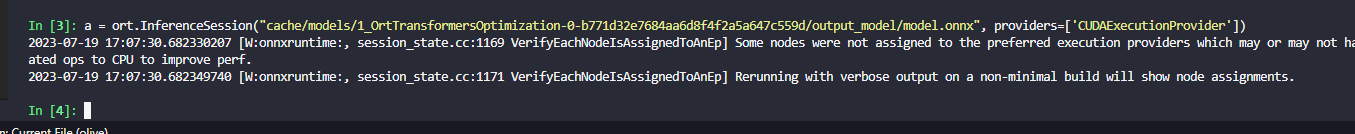

Onnxruntime Capi Onnxruntime Pybind11 State Fail Onnxruntimeerror Describe the issue hi all, i'm exporting a pytorch model to onnx (opset 14) using only float32, static input sizes, and no dynamic reshaping. despite optimizing the model for gpu, i still see certain ops (concat, slice) falling back to cpu during inference in onnx runtime with cudaexecutionprovider. here’s what i’ve already tried:. We have implemented a performance optimization in the mlas backend of onnx runtime that improves cpu utilization in convolution workloads with multiple groups or large batch sizes. Checklist to troubleshoot onnx runtime performance tuning issues and frequently asked questions. Onnx runtime is a cross platform inference and training machine learning accelerator. Describe the issue i use directml to runing olive optimization model stable diffusion 1.5, there will have five json logging. i also convert it to value change dump file format as operator. Have a question about this project? sign up for a free github account to open an issue and contact its maintainers and the community.

Bug Onnxruntime Capi Onnxruntime Pybind11 State Fail With Save As Checklist to troubleshoot onnx runtime performance tuning issues and frequently asked questions. Onnx runtime is a cross platform inference and training machine learning accelerator. Describe the issue i use directml to runing olive optimization model stable diffusion 1.5, there will have five json logging. i also convert it to value change dump file format as operator. Have a question about this project? sign up for a free github account to open an issue and contact its maintainers and the community.

Comments are closed.