Pdf Transformationally Identical And Invariant Convolutional Neural

Lecture 17 Convolutional Neural Networks Pdf Pdf Artificial Neural As a part of this research direction related to the transformation process within the cnn, this study is intended to demonstrate that transformationally identical and invariant cnn can also be constructed by combining (i.e., averaging in this study) symmetric operations or input vectors. In this study, transformationally identical processing based on combining results of all sub processes with corresponding transformations either at the final processing step or at the beginning.

Convolutional Neural Pdf Cnn model, convolutional kernels are trained to be invariant to these transformations. fig. 2 presents a side by side comparison of the overall structure of these. This study found that both types of transformationally identical cnn systems are mathematically equivalent by either applying an averaging operation to corresponding elements of all sub channels before the activation function or without using a non linear activation function. View a pdf of the paper titled transformationally identical and invariant convolutional neural networks through symmetric element operators, by shih chung b. lo and 3 other authors. We propose a novel supervised feature learning approach, which efficiently extracts information from these constraints to produce interpretable, transformation invariant features.

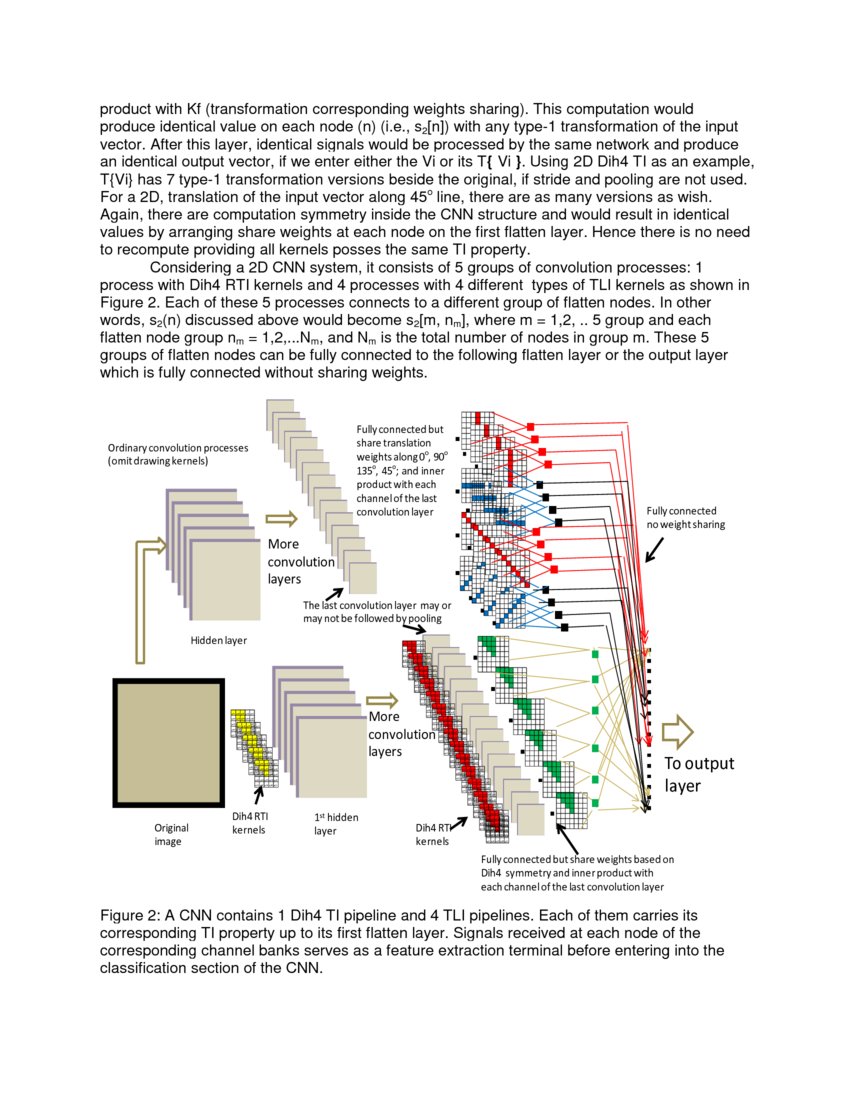

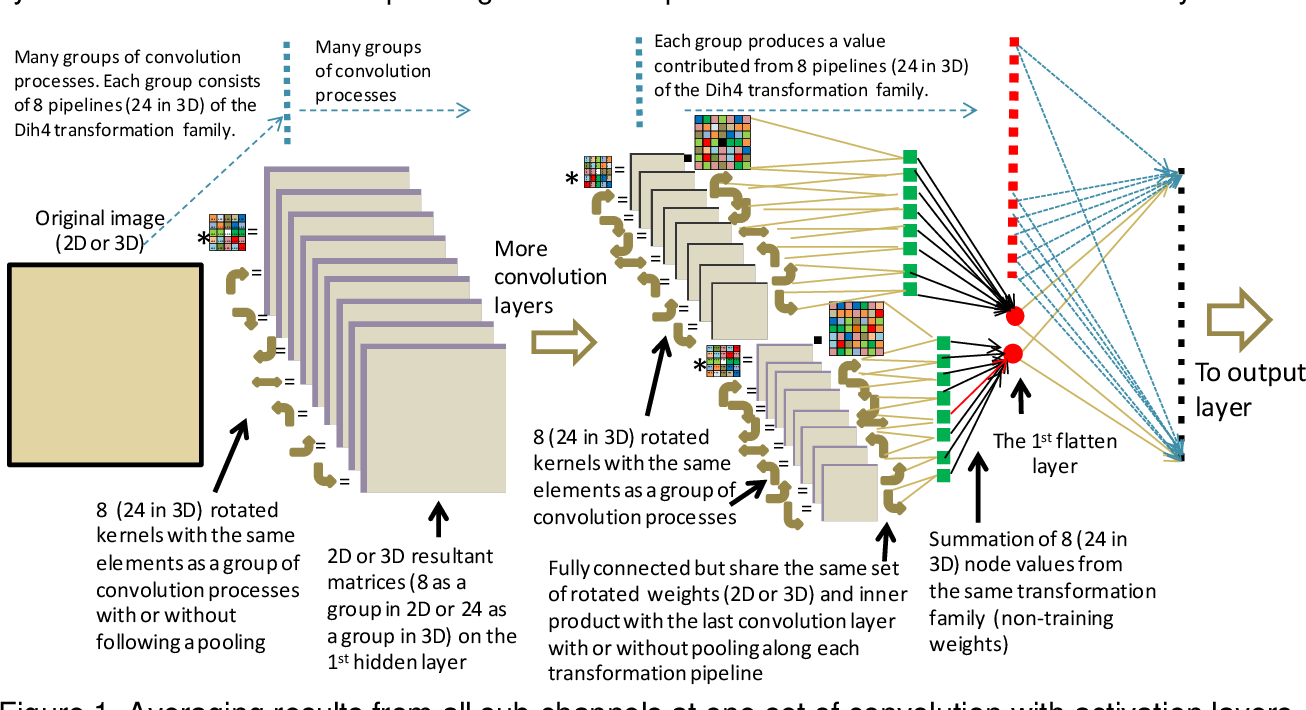

Unit 2 Convolutional Neural Network Pdf Artificial Neural Network View a pdf of the paper titled transformationally identical and invariant convolutional neural networks through symmetric element operators, by shih chung b. lo and 3 other authors. We propose a novel supervised feature learning approach, which efficiently extracts information from these constraints to produce interpretable, transformation invariant features. We introduce group equivariant convolutional neural networks (g cnns), a natural generalization of convolutional neural networks that reduces sample complexity by exploiting. Specifically, a transformationally identical cnn can be constructed by arranging internally symmetric operations in parallel with the same transformation family that includes a flatten layer with weights sharing among their corresponding transformation elements. In this study, transformationally identical processing based on combining results of all sub processes with corresponding transformations either at the final processing step or at the beginning step were found to be equivalent through a special algebraical operation property. We can call this property as transformationally invariant quantitatively, or transformationally identical (ti). this quantitatively identical property through a predefined transformation and it applications to cnn is the main focus of this paper.

Transformationally Identical And Invariant Convolutional Neural We introduce group equivariant convolutional neural networks (g cnns), a natural generalization of convolutional neural networks that reduces sample complexity by exploiting. Specifically, a transformationally identical cnn can be constructed by arranging internally symmetric operations in parallel with the same transformation family that includes a flatten layer with weights sharing among their corresponding transformation elements. In this study, transformationally identical processing based on combining results of all sub processes with corresponding transformations either at the final processing step or at the beginning step were found to be equivalent through a special algebraical operation property. We can call this property as transformationally invariant quantitatively, or transformationally identical (ti). this quantitatively identical property through a predefined transformation and it applications to cnn is the main focus of this paper.

Figure 1 From Transformationally Identical And Invariant Convolutional In this study, transformationally identical processing based on combining results of all sub processes with corresponding transformations either at the final processing step or at the beginning step were found to be equivalent through a special algebraical operation property. We can call this property as transformationally invariant quantitatively, or transformationally identical (ti). this quantitatively identical property through a predefined transformation and it applications to cnn is the main focus of this paper.

Comments are closed.