Pandas Apply Function To Single Multiple Column S Spark By

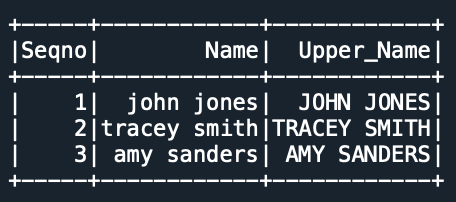

Pyspark Apply Function To Column Spark By Examples Apply a function along an axis of the dataframe. objects passed to the function are series objects whose index is either the dataframe’s index (axis=0) or the dataframe’s columns (axis=1). In this section, i will explain how to create a custom pyspark udf function and apply this function to a column. pyspark udf (a.k.a user defined function) is the most useful feature of spark sql & dataframe that is used to extend the pyspark built in capabilities.

Pyspark Apply Function To Column Spark By Examples My guess was that they would perform the same (discounting the python overhead of course), because until an action (like write() count()) is executed, the spark engine will just keep building the plan, but not execute anything. In this article, we are going to learn how to apply a transformation to multiple columns in a data frame using pyspark in python. the api which was introduced to support spark and python language and has features of scikit learn and pandas libraries of python is known as pyspark. In this article, i have explained the pandas apply() function provides a powerful tool for returning multiple columns based on custom transformations applied to dataframe rows or columns. In this article, i will cover how to apply () a function on values of a selected single, multiple, all columns. for example, let’s say we have three columns and would like to apply a function on a single column without touching other two columns and return a dataframe with three columns.

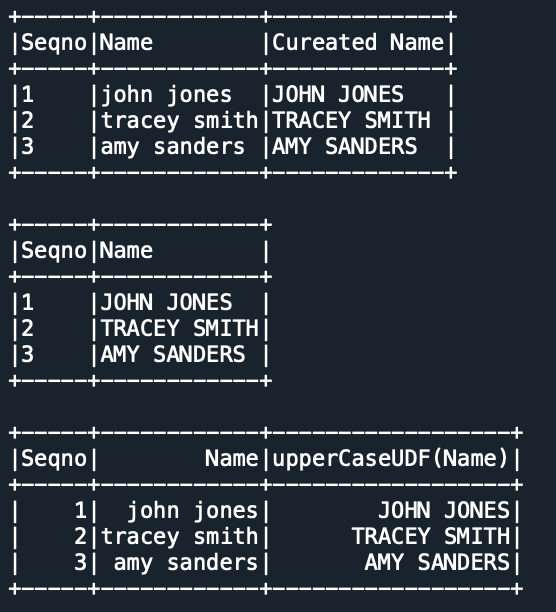

Pyspark Apply Function To Column Spark By Examples In this article, i have explained the pandas apply() function provides a powerful tool for returning multiple columns based on custom transformations applied to dataframe rows or columns. In this article, i will cover how to apply () a function on values of a selected single, multiple, all columns. for example, let’s say we have three columns and would like to apply a function on a single column without touching other two columns and return a dataframe with three columns. At these times, you’ll want to combine the distributed processing power of spark with the flexibility of pandas by using pandas udfs, applyinpandas, or mapinpandas. each of these approaches allows you to operate on standard pandas objects (dataframes and series) in a custom python function. In this article, we will discuss how to apply a function to each row of a spark dataframe. this is a common operation that is required when performing data cleaning, data transformation, or data analysis tasks. The apis slice the pandas on spark dataframe or series, and then apply the given function with pandas dataframe or series as input and output. see the examples below:. Pyspark.pandas allows you to work with pandas like syntax but run on spark, making it scalable. the apply function takes a custom function and applies it to either rows or columns.

Pandas Apply Function To Single Multiple Column S Spark By At these times, you’ll want to combine the distributed processing power of spark with the flexibility of pandas by using pandas udfs, applyinpandas, or mapinpandas. each of these approaches allows you to operate on standard pandas objects (dataframes and series) in a custom python function. In this article, we will discuss how to apply a function to each row of a spark dataframe. this is a common operation that is required when performing data cleaning, data transformation, or data analysis tasks. The apis slice the pandas on spark dataframe or series, and then apply the given function with pandas dataframe or series as input and output. see the examples below:. Pyspark.pandas allows you to work with pandas like syntax but run on spark, making it scalable. the apply function takes a custom function and applies it to either rows or columns.

Comments are closed.