Orchestrating An Application Process With Aws Batch Using Aws Cdk Aws

Orchestrating An Application Process With Aws Batch Using Aws Cdk Aws In this blog, we will leverage the capabilities and features of aws cdk (with microsoft using c#) instead of cloudformation. let’s get started! this post provides a file processing implementation using docker images and amazon s3, aws lambda, amazon dynamodb, and aws batch. This post provides a file processing implementation using docker images and amazon s3, aws lambda, amazon dynamodb, and aws batch. in this scenario, the user uploads a csv file to into an amazon s3 bucket, which is processed by aws batch as a job.

Aws Cdk Using Aws Cdk Cloud Development Kit By Jwu Medium 55 Off Although newer services such as ecs might be more appealing, we are going to take a deeper look at what aws batch provides and along the way we will deploy a sample batch example using aws. Context: building a daily batch job by leveraging aws cdk and cicd pipeline with amazon ecs. fargate task: aws fargate task run daily job. since the job can take more than 15 minutes to finish, amazon lambda is not suitable. the job only run around 2 hours a day. Below is prototype of the code to demonstrate this working, a list of the main components: i will be using aws cdk to define the infrastructure we need to be deployed using cloudformation. in this example we have some code that is doing some work on a data set and storing the result. Aws batch is a batch processing tool for efficiently running hundreds of thousands computing jobs in aws. batch can dynamically provision amazon ec2 instances to meet the resource requirements of submitted jobs and simplifies the planning, scheduling, and executions of your batch workloads.

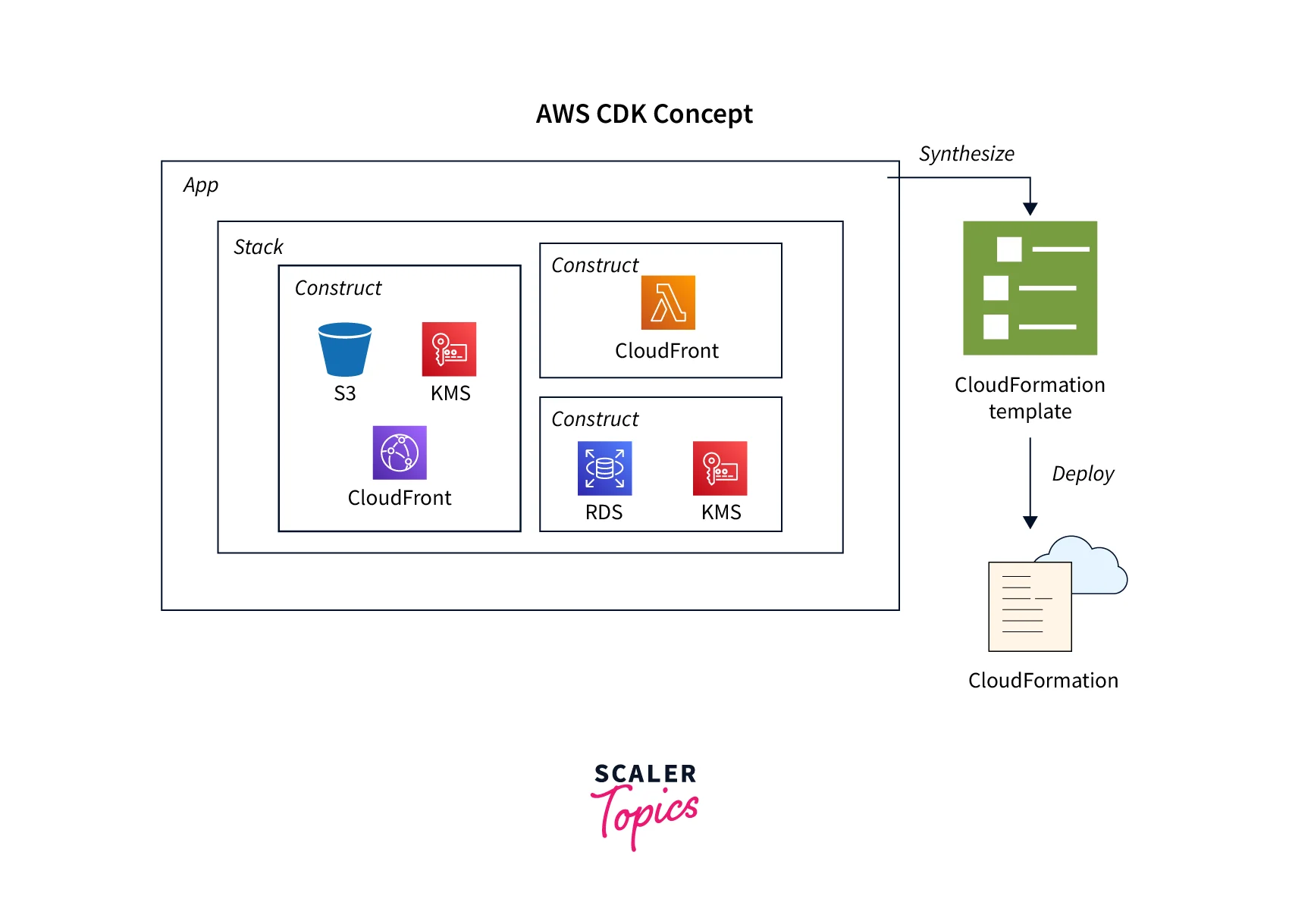

Aws Cdk Aws Cloud Operations Blog Below is prototype of the code to demonstrate this working, a list of the main components: i will be using aws cdk to define the infrastructure we need to be deployed using cloudformation. in this example we have some code that is doing some work on a data set and storing the result. Aws batch is a batch processing tool for efficiently running hundreds of thousands computing jobs in aws. batch can dynamically provision amazon ec2 instances to meet the resource requirements of submitted jobs and simplifies the planning, scheduling, and executions of your batch workloads. Aws batch allows us to run batch workloads without managing any compute resources. although newer services such as ecs might be more appealing, we are going to take a deeper look at what aws batch provides and along the way we will deploy a sample batch example using aws cdk. This post provides a file processing implementation using docker images and amazon s3, aws lambda, amazon dynamodb, and aws batch. in this scenario, the user uploads a csv file to into an amazon s3 bucket, which is processed by aws batch as a job. This post provides a file processing implementation using docker images and amazon s3, aws lambda, amazon dynamodb, and aws batch. in this scenario, the user uploads a csv file into an amazon s3 bucket, which is processed by aws batch as a job. We’ll walk through the core concepts, build a simple cdk app, automate its deployment with github actions, and highlight all the ways this process can scale with your projects.

Aws Cdk Aws Cloud Operations Blog Aws batch allows us to run batch workloads without managing any compute resources. although newer services such as ecs might be more appealing, we are going to take a deeper look at what aws batch provides and along the way we will deploy a sample batch example using aws cdk. This post provides a file processing implementation using docker images and amazon s3, aws lambda, amazon dynamodb, and aws batch. in this scenario, the user uploads a csv file to into an amazon s3 bucket, which is processed by aws batch as a job. This post provides a file processing implementation using docker images and amazon s3, aws lambda, amazon dynamodb, and aws batch. in this scenario, the user uploads a csv file into an amazon s3 bucket, which is processed by aws batch as a job. We’ll walk through the core concepts, build a simple cdk app, automate its deployment with github actions, and highlight all the ways this process can scale with your projects.

Github Aws Samples Aws Cdk For Discourse Aws Cdk For Discourse This post provides a file processing implementation using docker images and amazon s3, aws lambda, amazon dynamodb, and aws batch. in this scenario, the user uploads a csv file into an amazon s3 bucket, which is processed by aws batch as a job. We’ll walk through the core concepts, build a simple cdk app, automate its deployment with github actions, and highlight all the ways this process can scale with your projects.

Comments are closed.