Optimal Approximation Complexity Of High Dimensional Functions With

Optimal Approximation Complexity Of High Dimensional Functions With We then show how to leverage low local dimensionality in some contexts to overcome the curse of dimensionality, obtaining approximation rates that are optimal for unknown lower dimensional subspaces. We investigate optimal algorithms for optimizing and approximating a general high dimensional smooth and sparse function from the perspective of information based complexity. our algorithms and analyses reveal several interesting characteristics for these tasks.

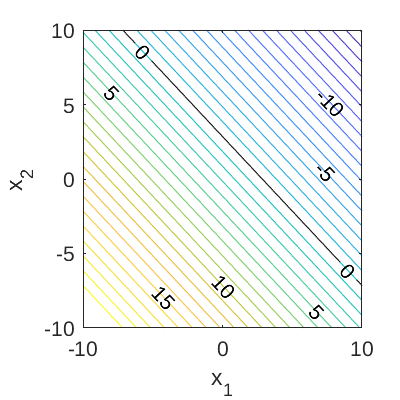

Pdf Efficient Approximation Of High Dimensional Functions With Deep The number of parameters cm needed to compute an ǫ approximation of f(x) over one or all canonical subspaces is given by cm = c5(d, deff, n)ǫ−deff n) (see lemma 2.2 and theorem 1.5). Original source title: a comparison study of supervised learning techniques for the approximation of high dimensional functions and feedback control abstract: approximation of high dimensional functions is in the focus of machine learning and data based scientific computing. In this article, we develop a framework for showing that neural networks can overcome the curse of dimensionality in different high dimensional approximation problems. In this section, we provide different examples of approximable catalogs that will be used in section 6 to show that certain high dimensional functions are approximable without the curse of dimensionality.

Hardness Of Approximation Complexity 1 Introduction Objectives To In this article, we develop a framework for showing that neural networks can overcome the curse of dimensionality in different high dimensional approximation problems. In this section, we provide different examples of approximable catalogs that will be used in section 6 to show that certain high dimensional functions are approximable without the curse of dimensionality. Dynamical systems, iterated function systems, fractals, refinement equations give functions that can be approximated with exponential accuracy but the functions are not smooth. We then show how to leverage low local dimensionality in some contexts to overcome the curse of dimensionality, obtaining approximation rates that are optimal for unknown lower dimensional subspaces. This study introduces a deep active optimization pipeline that effectively tackles high dimensional, complex problems with limited data. the approach minimizes sample size and surpasses existing. We illustrate these results considering the practical neural network approximation of a set of functions defined on high dimensional data including real world data as well.

High Dimensional Example Function Benchmarks Uqworld Dynamical systems, iterated function systems, fractals, refinement equations give functions that can be approximated with exponential accuracy but the functions are not smooth. We then show how to leverage low local dimensionality in some contexts to overcome the curse of dimensionality, obtaining approximation rates that are optimal for unknown lower dimensional subspaces. This study introduces a deep active optimization pipeline that effectively tackles high dimensional, complex problems with limited data. the approach minimizes sample size and surpasses existing. We illustrate these results considering the practical neural network approximation of a set of functions defined on high dimensional data including real world data as well.

Comments are closed.