Openai New Process Supervised Reward Modeling Improves Ai Reasoning

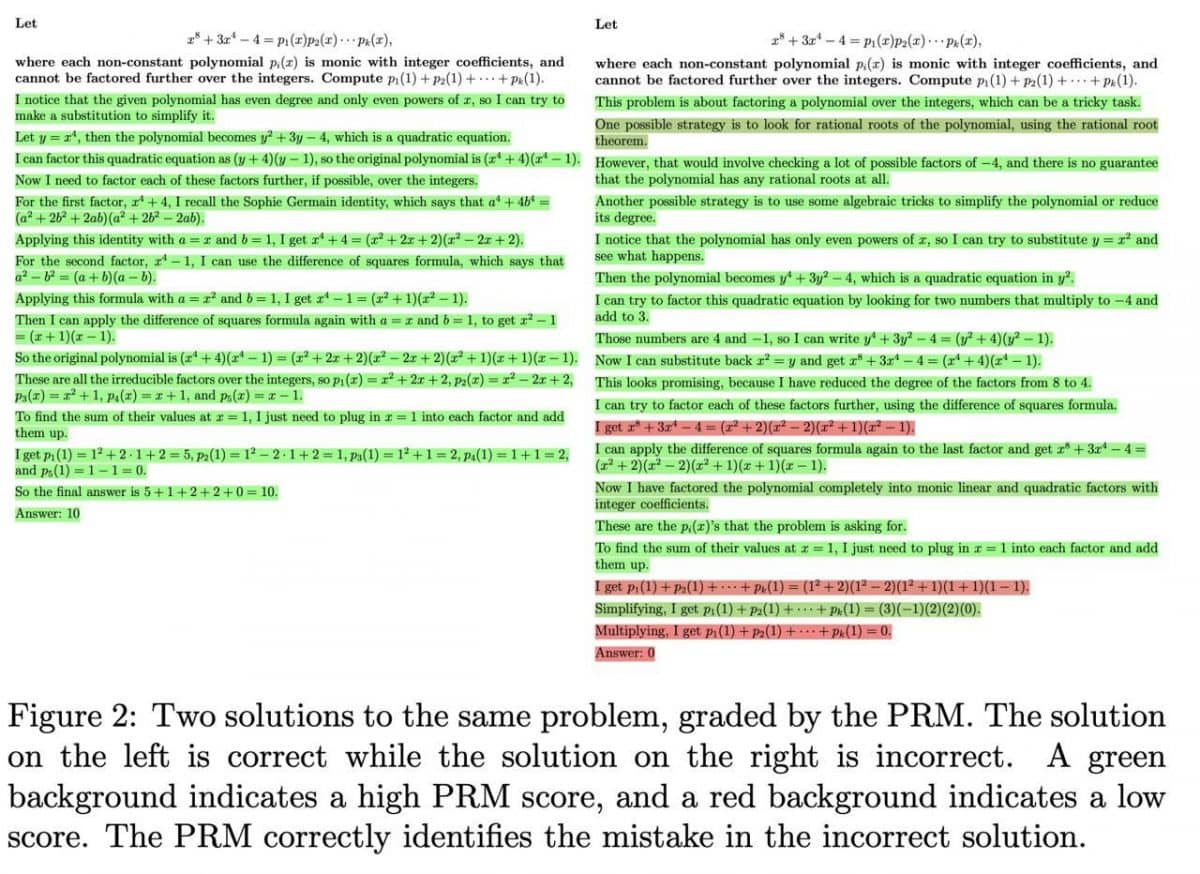

Openai New Process Supervised Reward Modeling Improves Ai Reasoning This shows us that the process supervised reward model is much more reliable. we showcase 10 problems and solutions below, along with commentary about the reward model’s strengths and weaknesses. Openai has once again captured the attention of the ai community with their groundbreaking work in process supervised reward modeling (prms). this innovative approach aims to evaluate the intermediate steps and reasoning of ai models, leading to improved performance and metrics.

Openai New Process Supervised Reward Modeling Improves Ai Reasoning To this end, we propose a heuristic greedy search algorithm that employs the step level feedback from prm to optimize the reasoning pathways explored by llms. this tailored prm demonstrated enhanced results compared to the chain of thought (cot) on mathematical benchmarks like gsm8k and math. Process supervision outperforms outcome supervision: the researchers demonstrate that models trained with process supervision achieve significantly better performance than those trained with outcome supervision on challenging reasoning tasks. Process supervision is a novel training technique that focuses on rewarding ai for each correct step of reasoning, rather than solely evaluating the final answer. this approach allows ai systems to learn from their mistakes, think more logically, and enhance transparency. In mathematical reasoning, researchers have demonstrated that process supervision can be utilized to train far more trustworthy reward models than outcome supervision.

Revolutionizing Reinforcement Learning In Robotics With Openai Process supervision is a novel training technique that focuses on rewarding ai for each correct step of reasoning, rather than solely evaluating the final answer. this approach allows ai systems to learn from their mistakes, think more logically, and enhance transparency. In mathematical reasoning, researchers have demonstrated that process supervision can be utilized to train far more trustworthy reward models than outcome supervision. Process supervised reward models (prms) offer fine grained, step wise feedback on model responses, aiding in selecting effective reasoning paths for complex tasks. The openai team of researchers and engineers—alex wei, sheryl hsu and noam brown—used a general purpose reasoning model: an ai designed to “think” through challenging problems by breaking. A process reward model, or process supervised rm (prm), [21] gives the reward for a step based only on the steps so far: . given a partial thinking trace , a human can judge whether the steps so far are correct, without looking at the final answer. This shows us that the process supervised reward model is much more reliable. we showcase 10 problems and solutions below, along with commentary about the reward model’s strengths and weaknesses.

Comments are closed.