Neural Networks Can Learn Features That Are Invariant Or Equivariant

Neural Networks Can Learn Features That Are Invariant Or Equivariant Motivation deep neural networks can learn invariances (e.g., translation, scale, rotations) given enough data. in unconstrained architectures this can be achieved through data. We explain equivariant neural networks, a notion underlying breakthroughs in machine learning from deep convolutional neural networks for computer vision to alphafold 2 for protein structure prediction, without assuming knowledge of equivariance or neural networks.

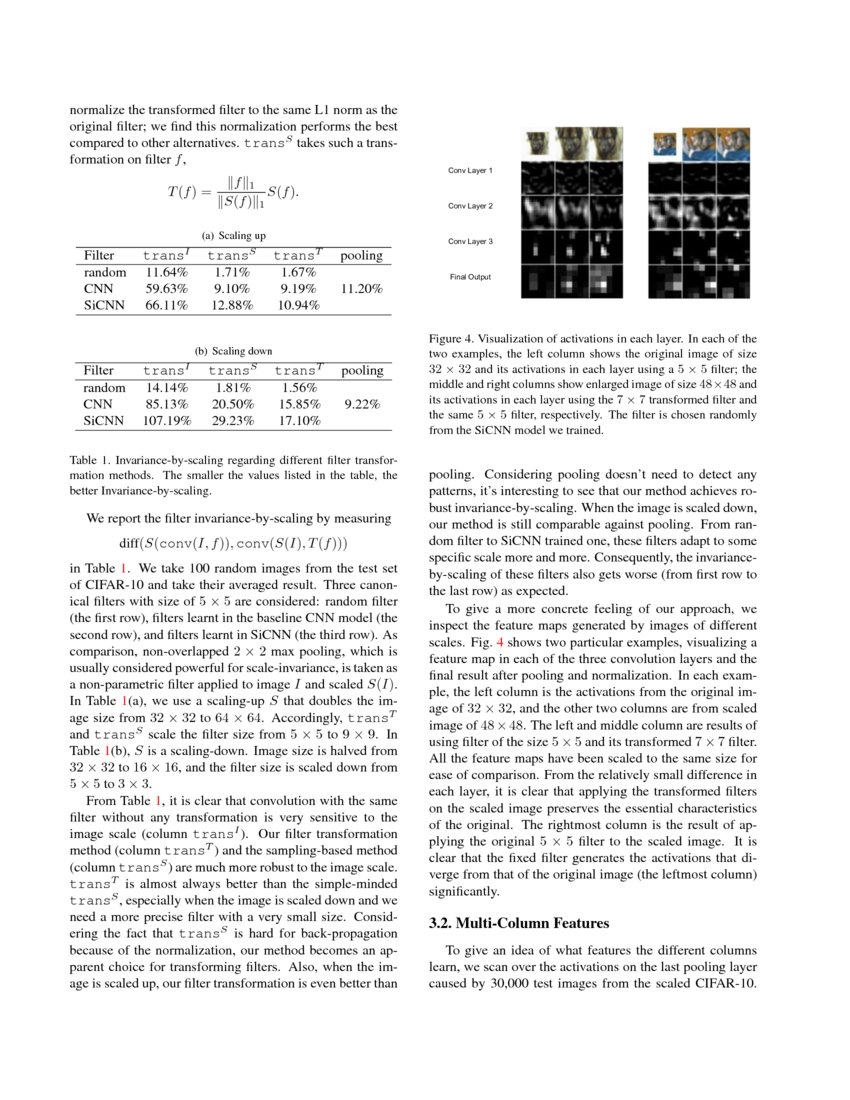

Scale Invariant Convolutional Neural Networks Deepai Neural networks naturally learn many transformed copies of the same feature, connected by symmetric weights. To the best of our knowledge, augerino is the first work to learn invariances and equivariances in neural networks from training data alone. the ability to automatically discover symmetries enables us to uncover interpretable salient structure in data, and provide better generalization. In particular, group equivari ant neural networks are able to learn features under rotations and vertical, horizontal, and diagonal reflections (engelenburg, 2020). In this step by step tutorial we introduce the mathematical foundations of the most common type of group invariant and group equivariant representation learning.

Survey On Invariant And Equivariant Graph Neural Networks Speaker Deck In particular, group equivari ant neural networks are able to learn features under rotations and vertical, horizontal, and diagonal reflections (engelenburg, 2020). In this step by step tutorial we introduce the mathematical foundations of the most common type of group invariant and group equivariant representation learning. We introduce the first flow equivariant models that respect these motion symmetries, leading to significantly improved generalization and sequence modeling. as you aimlessly gaze out the window of a moving train, the hills in the distance gently flow across your visual field. Having developed the framework for gauge equivariant neural network layers, we will now describe how this can be used to construct concrete gauge equivariant convolutions. Invariant and equivariant networks have been successfully used for learning images, sets, point clouds, and graphs. a basic challenge in developing such networks is finding the maximal collection of invariant and equivariant linear layers.

Comments are closed.