Near Real Time Data Streaming With Kafka Azure Cosmos Db No Code Pipeline

Hdinsight Spark Scala Kafka Cosmosdb Stream Data From Kafka To Cosmos In this video, i continue my learning journey into the azure ecosystem—this time focusing on building a near real time data pipeline using confluent kafka and azure cosmos db. Kafka connect is a tool for scalable and reliably streaming data between apache kafka and other systems. using kafka connect you can define connectors that move large data sets into and out of kafka. kafka connect for azure cosmos db is a connector to read from and write data to azure cosmos db.

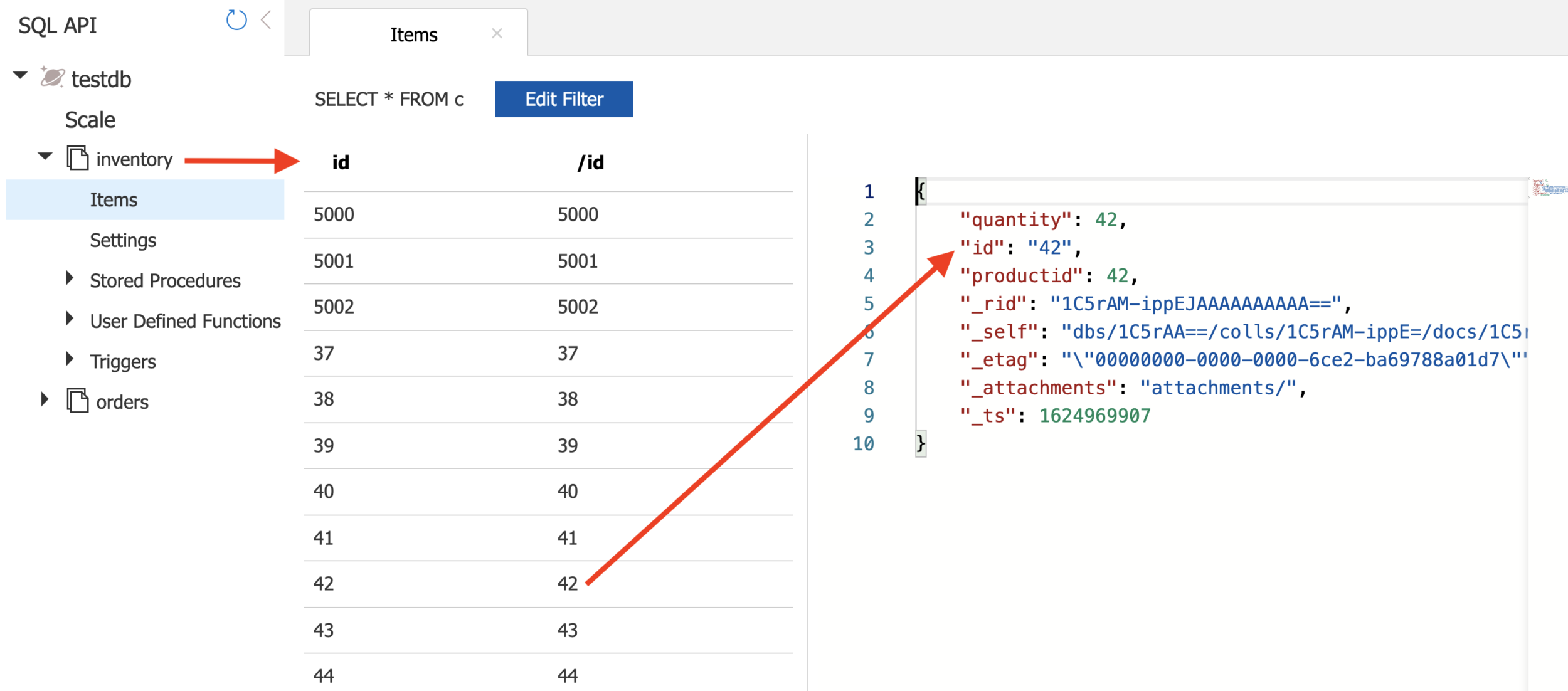

Getting Started With Kafka Connector For Azure Cosmos Db Using Docker Azure cosmos db is a globally distributed, multi model database service designed for real time applications. in this section, we will walk through the process of configuring azure cosmos db to store the streaming data from kafka. The example use case below shows step by step how to do that in the context of building a real time streaming data pipeline between apache kafka and azure cosmosdb. Whether you’re building a real time analytics pipeline, syncing operational data between services, or enabling event driven microservices, this connector empowers you to unlock the full potential of change data capture (cdc) and low latency writes across your azure cosmos db containers. Stream data from apache kafka to azure cosmos db sync your apache kafka data with azure cosmos db in minutes using estuary flow for real time, no code integration and seamless data pipelines.

Getting Started With Kafka Connector For Azure Cosmos Db Using Docker Whether you’re building a real time analytics pipeline, syncing operational data between services, or enabling event driven microservices, this connector empowers you to unlock the full potential of change data capture (cdc) and low latency writes across your azure cosmos db containers. Stream data from apache kafka to azure cosmos db sync your apache kafka data with azure cosmos db in minutes using estuary flow for real time, no code integration and seamless data pipelines. Use the following steps to deploy an azure virtual network, kafka, and spark clusters to your azure subscription. use the following button to sign in to azure and open the template in the azure portal. In modern cloud based architectures, real time data streaming is essential for processing large scale event driven data. apache kafka, azure functions, and azure cosmos db together provide a powerful combination for achieving low latency, scalable, and highly available data streaming solutions. Start with the confluent platform setup because it gives you a complete environment to work with. if you don't wish to use confluent platform, then you need to install and configure zookeeper, apache kafka, kafka connect, yourself. you also need to install and configure the azure cosmos db connectors manually. Set up a real time source connector for azure cosmos db in minutes. estuary captures change data (cdc), events, or snapshots — no custom pipelines, agents or manual configs needed. choose apache kafka as your target system. estuary intelligently maps schemas, supports both batch and streaming loads, and adapts to schema changes automatically.

Comments are closed.