Min New Tokens Argument In Generate Implementation Issue 20814

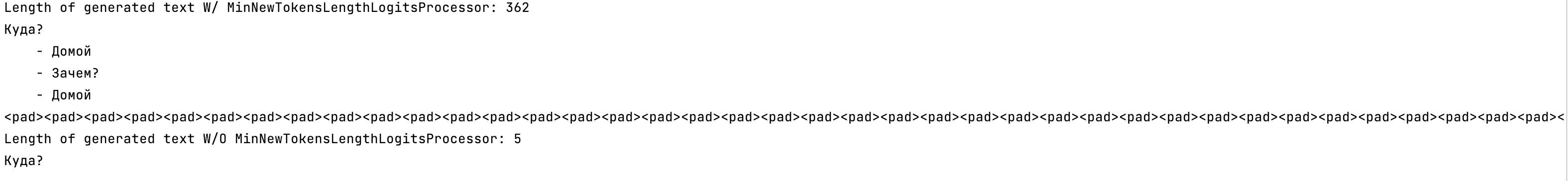

Min New Tokens Argument In Generate Implementation Issue 20814 Similarly to the max new tokens, a min new tokens option would count only the newly generated tokens, ignoring the tokens of the input sequence (prompt) in decoder only models. the option min length of the generate() method might be ambiguous for decoder only models. For whom it may concern, i found out the issue was with the max length argument of the generation method. it limits the maximal number of tokens including the input tokens.

Min New Tokens Argument In Generate Implementation Issue 20814 Can we add a new parameter min new tokens to the generate function to limit the length of newly generated tokens? the current parameter min length limits the length of prompt newly generated tokens, not the length of newly generated tokens. Pipeline already supports the option max new tokens. i’m requesting for the existing “min new tokens” to be able to be used with pipeline the same way as “max new tokens”. Please note that if the bug related issue you submitted lacks corresponding environment info and a minimal reproducible demo, it will be challenging for us to reproduce and resolve the issue, reducing the likelihood of receiving feedback. Successfully merging a pull request may close this issue. checklist 1. if the issue you raised is not a feature but a question, please raise a discussion at github sgl project sglang discussions new choose otherwise, it will be closed. 2. please use english, otherwise it will be clo.

How To Set Max New Tokens Parameter In Model Prediction Component Please note that if the bug related issue you submitted lacks corresponding environment info and a minimal reproducible demo, it will be challenging for us to reproduce and resolve the issue, reducing the likelihood of receiving feedback. Successfully merging a pull request may close this issue. checklist 1. if the issue you raised is not a feature but a question, please raise a discussion at github sgl project sglang discussions new choose otherwise, it will be closed. 2. please use english, otherwise it will be clo. Controlling max length via the config is deprecated and max length will be removed from the config in v5 of transformers – we recommend using max new tokens to control the maximum length of the generation. I want to use the generate () function with the compiled model, but it appears that the compiled model (instance of the class optimizedmodule) does not have the generate () function. instead, calling generate () triggers getattr () and the function is executed by the original bertlmheadmodel. Can we add a new parameter min new tokens to the generate function to limit the length of newly generated tokens? the current parameter min length limits the length of prompt newly generated tokens, not the length of newly generated tokens. However, i get an error that this is a friendly reminder the current text generation call will exceed the model's predefined maximum length (1024). depending on the model, you may observe exceptions, performance degradation, or nothing at all. cuda also throws a runtimeerror. why?.

Comments are closed.