Markov Chains Pdf Markov Chain Linear Algebra

Markov Chain And Its Applications Linear Algebra Applications Pdf This game is an example of a markov chain, named for a.a. markov, who worked in the first half of the 1900's. each vector of 's is a probability vector and the matrix is a transition matrix. In this appendix, we present an application to those probabilistic systems known as markov chains.

Markov Chains Pdf Markov Chain Stochastic Process Math224 11december 2007 1.introduction markov chains arenamedafter russian m thematician andrei markov andprovide away ofdealing withasequence ofevents based ontheprobabilities dictating themotion ofa ation amongvarious states (fraleigh 1 5). consider asituation whe cancxist intwocmocc states. ama7hainisasccies ofdiscccte time inte,vais ove,. Generalizations of markov chains, including continuous time markov processes and in nite dimensional markov processes, are widely studied, but we will not discuss them in these notes. This queuing model is an example of a so called birth death chain (a markov chain for which, at each step, the state of the system can only change by at most 1) which we will introduce in more detail in section 1.5. Below you will find an ex ample of a markov chain on a countably infinite state space, but first we want to discuss what kind of restrictions are put on a model by assuming that it is a markov chain.

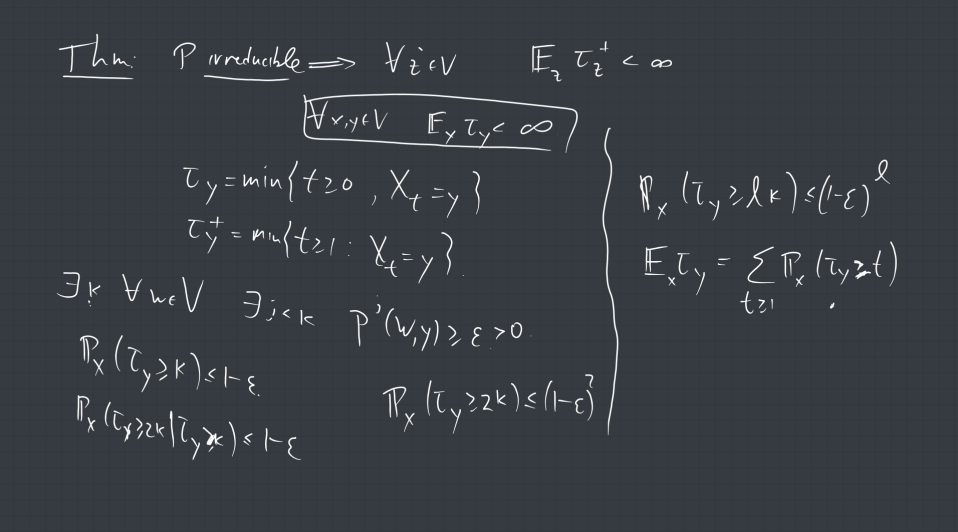

5 Hidden Markov Chains Pdf Markov Chain Statistical Theory This queuing model is an example of a so called birth death chain (a markov chain for which, at each step, the state of the system can only change by at most 1) which we will introduce in more detail in section 1.5. Below you will find an ex ample of a markov chain on a countably infinite state space, but first we want to discuss what kind of restrictions are put on a model by assuming that it is a markov chain. We will see in the mathematical introduction that markov chains can be described with matrices; a central aim of this paper is to use the tools of linear algebra in order to understand the different properties of markov chains, illustrating them with examples simulated with matlab. In previous lectures we have seen that: any aperiodic and irreducible finite markov chain (e.g., lazy random walks) converges to a unique stationary distribution the mixing time captures how long it takes for convergence to happen. 16.9.3 theorem (convergence to the invariant distribution) for a markov chain with transition matrix p , if the chain is irreducible and aperiodic, then the invariant dis tribution π is unique, and for any initial distribution λ, the sequence λp n converges to π. Before we proceed to the general definition of a markov chain, let’s play around with this model for a bit, and see what it can do. for example, suppose that it’s sunny on monday. what is the probability that it’s rainy on friday, according to this model?.

Week 1 Lecture 1 Markov Chains Course We will see in the mathematical introduction that markov chains can be described with matrices; a central aim of this paper is to use the tools of linear algebra in order to understand the different properties of markov chains, illustrating them with examples simulated with matlab. In previous lectures we have seen that: any aperiodic and irreducible finite markov chain (e.g., lazy random walks) converges to a unique stationary distribution the mixing time captures how long it takes for convergence to happen. 16.9.3 theorem (convergence to the invariant distribution) for a markov chain with transition matrix p , if the chain is irreducible and aperiodic, then the invariant dis tribution π is unique, and for any initial distribution λ, the sequence λp n converges to π. Before we proceed to the general definition of a markov chain, let’s play around with this model for a bit, and see what it can do. for example, suppose that it’s sunny on monday. what is the probability that it’s rainy on friday, according to this model?.

Comments are closed.