Managing Your Ai Workloads At Run Ai A Path To Improved Productivity

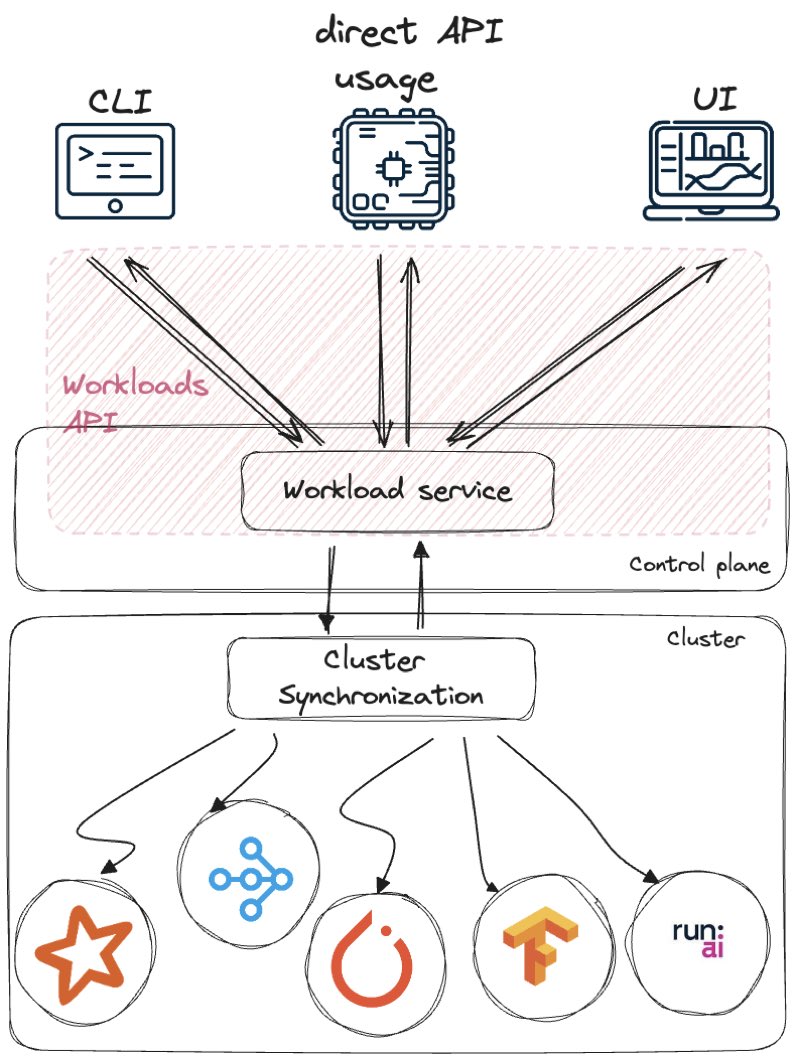

Managing Your Ai Workloads At Run Ai A Path To Improved Productivity Nvidia run:ai is a gpu orchestration and optimization platform that helps organizations maximize compute utilization for ai workloads. by optimizing the use of expensive compute resources, nvidia run:ai accelerates ai development cycles, and drives faster time to market for ai powered innovations. Run:ai enhances visibility and simplifies management, by monitoring, presenting and orchestrating all ai workloads in the clusters it is installed on.

Managing Your Ai Workloads At Run Ai A Path To Improved Productivity Run.ai is a comprehensive platform designed to optimize and orchestrate ai workloads, focusing on gpu utilization. it features dynamic workload management, gpu optimization, and extensive visibility into ai infrastructure, making it suitable for various industries. In this comprehensive guide, we’ll explore what run is, why it matters, its features, benefits, and how businesses and developers can use it to run large scale ai workloads smoothly. Run serves as an ai optimization platform that ensures maximum productivity by managing ai workloads effectively. its key features include a workload scheduler that organizes resources throughout the ai lifecycle and gpu fractioning, allowing for cost effective use of gpus in various environments. When creating a new workload, fields and assets may have limitations or defaults. these rules and defaults are derived from a policy your administrator set. policies allow you to control, standardize, and simplify the workload submission process. for additional information, see policies and rules.

Managing Your Ai Workloads At Run Ai A Path To Improved Productivity Run serves as an ai optimization platform that ensures maximum productivity by managing ai workloads effectively. its key features include a workload scheduler that organizes resources throughout the ai lifecycle and gpu fractioning, allowing for cost effective use of gpus in various environments. When creating a new workload, fields and assets may have limitations or defaults. these rules and defaults are derived from a policy your administrator set. policies allow you to control, standardize, and simplify the workload submission process. for additional information, see policies and rules. The workloads table can be found under workloads in the run:ai platform. the workloads table provides a list of all the workloads scheduled on the run:ai scheduler, and allows you to manage them. With the exponential growth of ai driven applications, the need for efficient and dynamic resource management has become paramount. run.ai tackles this challenge by offering a robust, kubernetes based solution that intelligently allocates and optimizes gpu resources. Run:ai is revolutionizing resource management for machine learning by dynamically allocating computing power across ai workloads. how it works: dynamic workload allocation: run:ai adjusts compute resources based on workload demands, ensuring efficient use of hardware. Each stage of the ai lifecycle requires different tools, resources, and frameworks to ensure optimal performance. nvidia run:ai simplifies this process by offering specialized workload types tailored to each phase, facilitating a smooth transition across various stages of the ml workflows.

Managing Your Ai Workloads At Run Ai A Path To Improved Productivity The workloads table can be found under workloads in the run:ai platform. the workloads table provides a list of all the workloads scheduled on the run:ai scheduler, and allows you to manage them. With the exponential growth of ai driven applications, the need for efficient and dynamic resource management has become paramount. run.ai tackles this challenge by offering a robust, kubernetes based solution that intelligently allocates and optimizes gpu resources. Run:ai is revolutionizing resource management for machine learning by dynamically allocating computing power across ai workloads. how it works: dynamic workload allocation: run:ai adjusts compute resources based on workload demands, ensuring efficient use of hardware. Each stage of the ai lifecycle requires different tools, resources, and frameworks to ensure optimal performance. nvidia run:ai simplifies this process by offering specialized workload types tailored to each phase, facilitating a smooth transition across various stages of the ml workflows.

Comments are closed.